-

This week’s release introduces new detections for denial-of-service attempts targeting React CVE-2026-23864 (https://www.cve.org/CVERecord?id=CVE-2026-23864 ↗).

Key Findings

- CVE-2026-23864 (https://www.cve.org/CVERecord?id=CVE-2026-23864 ↗) affects

react-server-dom-parcel,react-server-dom-turbopack, andreact-server-dom-webpackpackages. - Attackers can send crafted HTTP requests to Server Function endpoints, causing server crashes, out-of-memory exceptions, or excessive CPU usage.

Ruleset Rule ID Legacy Rule ID Description Previous Action New Action Comments Cloudflare Managed Ruleset N/A React Server - DOS - CVE:CVE-2026-23864 - 1 N/A Block This is a new detection. Cloudflare Managed Ruleset N/A React Server - DOS - CVE:CVE-2026-23864 - 2 N/A Block This is a new detection. Cloudflare Managed Ruleset N/A React Server - DOS - CVE:CVE-2026-23864 - 3 N/A Block This is a new detection. - CVE-2026-23864 (https://www.cve.org/CVERecord?id=CVE-2026-23864 ↗) affects

-

Paid plans can now have up to 100,000 files per Pages site, increased from the previous limit of 20,000 files.

To enable this increased limit, set the environment variable

PAGES_WRANGLER_MAJOR_VERSION=4in your Pages project settings.The Free plan remains at 20,000 files per site.

For more details, refer to the Pages limits documentation.

-

You can now store up to 10 million vectors in a single Vectorize index, doubling the previous limit of 5 million vectors. This enables larger-scale semantic search, recommendation systems, and retrieval-augmented generation (RAG) applications without splitting data across multiple indexes.

Vectorize continues to support indexes with up to 1,536 dimensions per vector at 32-bit precision. Refer to the Vectorize limits documentation for complete details.

-

Cloudflare Rulesets now includes

encode_base64()andsha256()functions, enabling you to generate signed request headers directly in rule expressions. These functions support common patterns like constructing a canonical string from request attributes, computing a SHA256 digest, and Base64-encoding the result.

Function Description Availability encode_base64(input, flags)Encodes a string to Base64 format. Optional flagsparameter:ufor URL-safe encoding,pfor padding (adds=characters to make the output length a multiple of 4, as required by some systems). By default, output is standard Base64 without padding.All plans (in header transform rules) sha256(input)Computes a SHA256 hash of the input string. Requires enablement

Encode a string to Base64 format:

encode_base64("hello world")Returns:

aGVsbG8gd29ybGQEncode a string to Base64 format with padding:

encode_base64("hello world", "p")Returns:

aGVsbG8gd29ybGQ=Perform a URL-safe Base64 encoding of a string:

encode_base64("hello world", "u")Returns:

aGVsbG8gd29ybGQCompute the SHA256 hash of a secret token:

sha256("my-token")Returns a hash that your origin can validate to authenticate requests.

Compute the SHA256 hash of a string and encode the result to Base64 format:

encode_base64(sha256("my-token"))Combines hashing and encoding for systems that expect Base64-encoded signatures.

For more information, refer to the Functions reference.

-

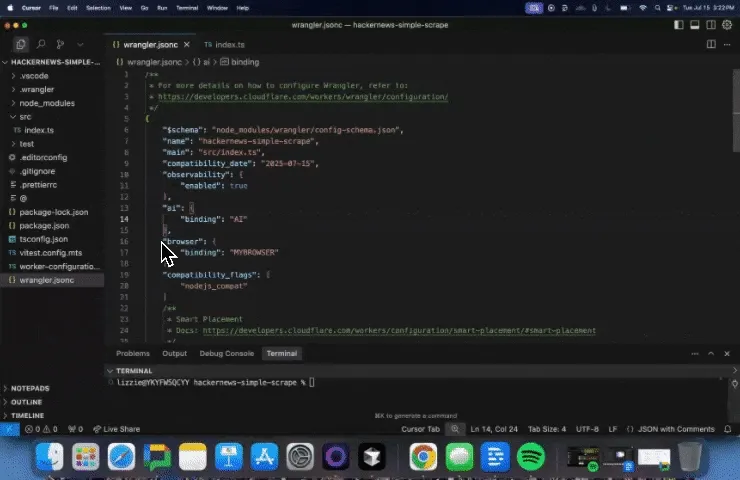

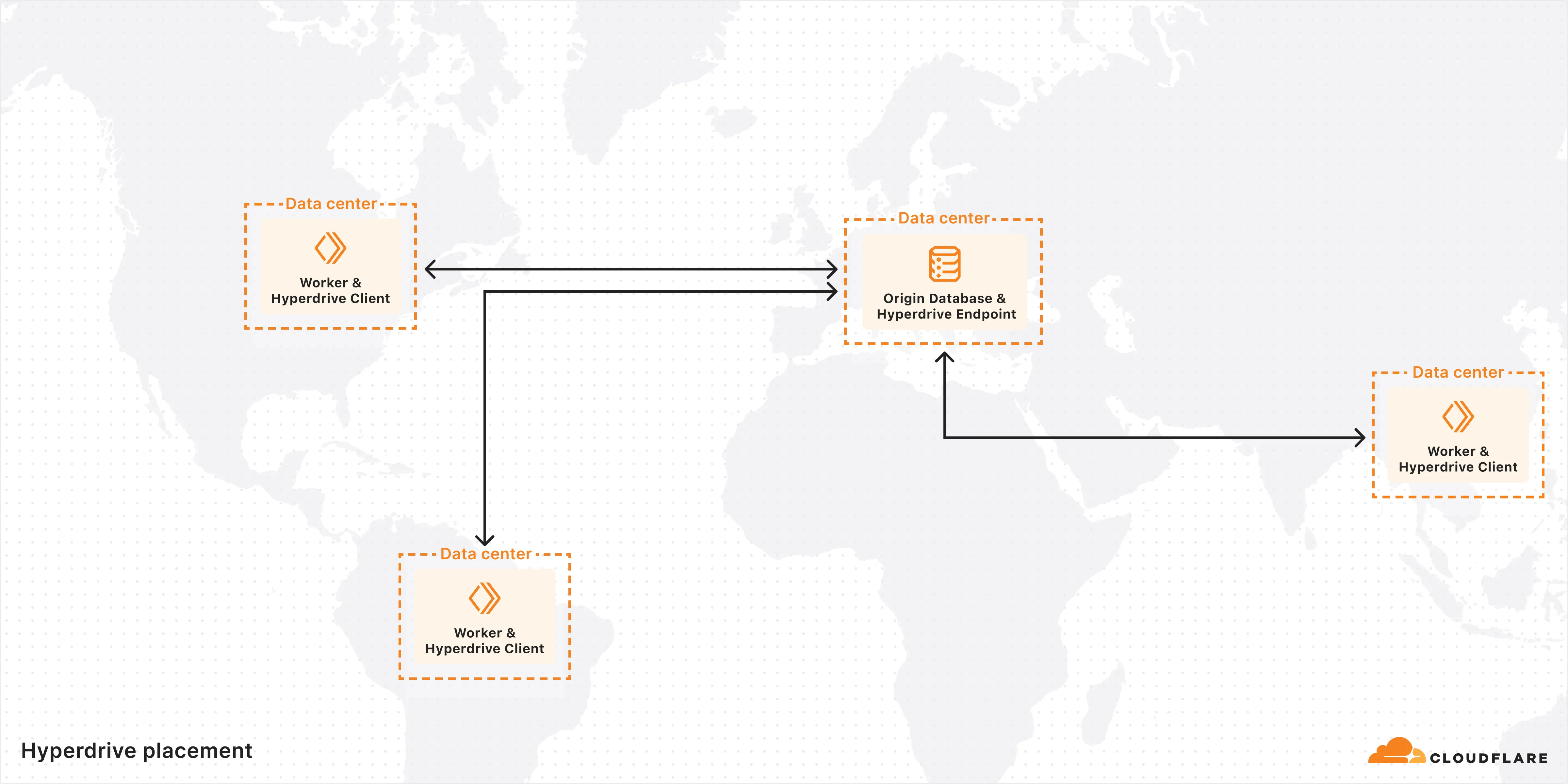

You can now configure Workers to run close to infrastructure in legacy cloud regions to minimize latency to existing services and databases. This is most useful when your Worker makes multiple round trips.

To set a placement hint, set the

placement.regionproperty in your Wrangler configuration file:{"placement": {"region": "aws:us-east-1",},}[placement]region = "aws:us-east-1"Placement hints support Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure region identifiers. Workers run in the Cloudflare data center ↗ with the lowest latency to the specified cloud region.

If your existing infrastructure is not in these cloud providers, expose it to placement probes with

placement.hostfor layer 4 checks orplacement.hostnamefor layer 7 checks. These probes are designed to locate single-homed infrastructure and are not suitable for anycasted or multicasted resources.{"placement": {"host": "my_database_host.com:5432",},}[placement]host = "my_database_host.com:5432"{"placement": {"hostname": "my_api_server.com",},}[placement]hostname = "my_api_server.com"This is an extension of Smart Placement, which automatically places your Workers closer to back-end APIs based on measured latency. When you do not know the location of your back-end APIs or have multiple back-end APIs, set

mode: "smart":{"placement": {"mode": "smart",},}[placement]mode = "smart"

-

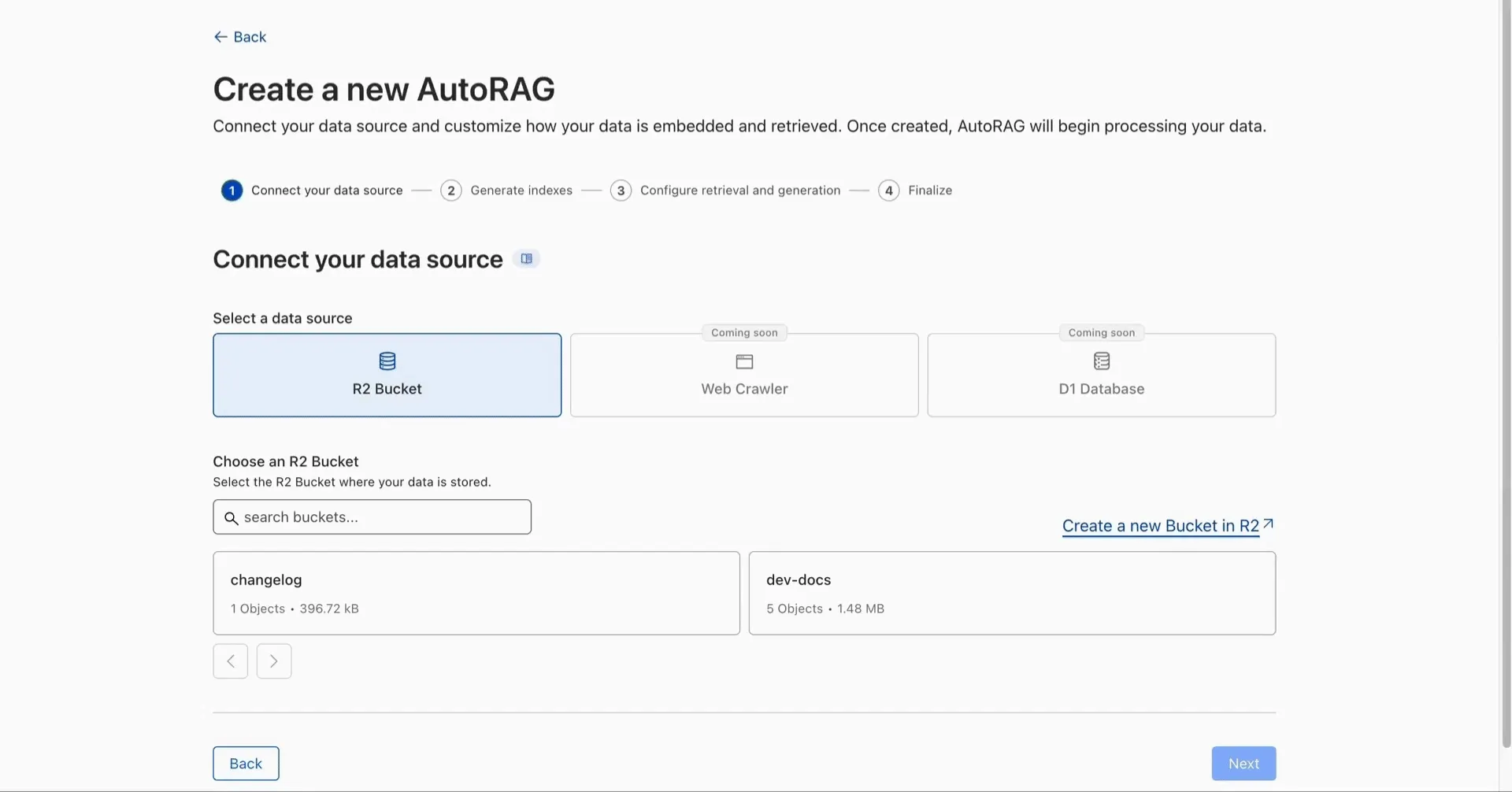

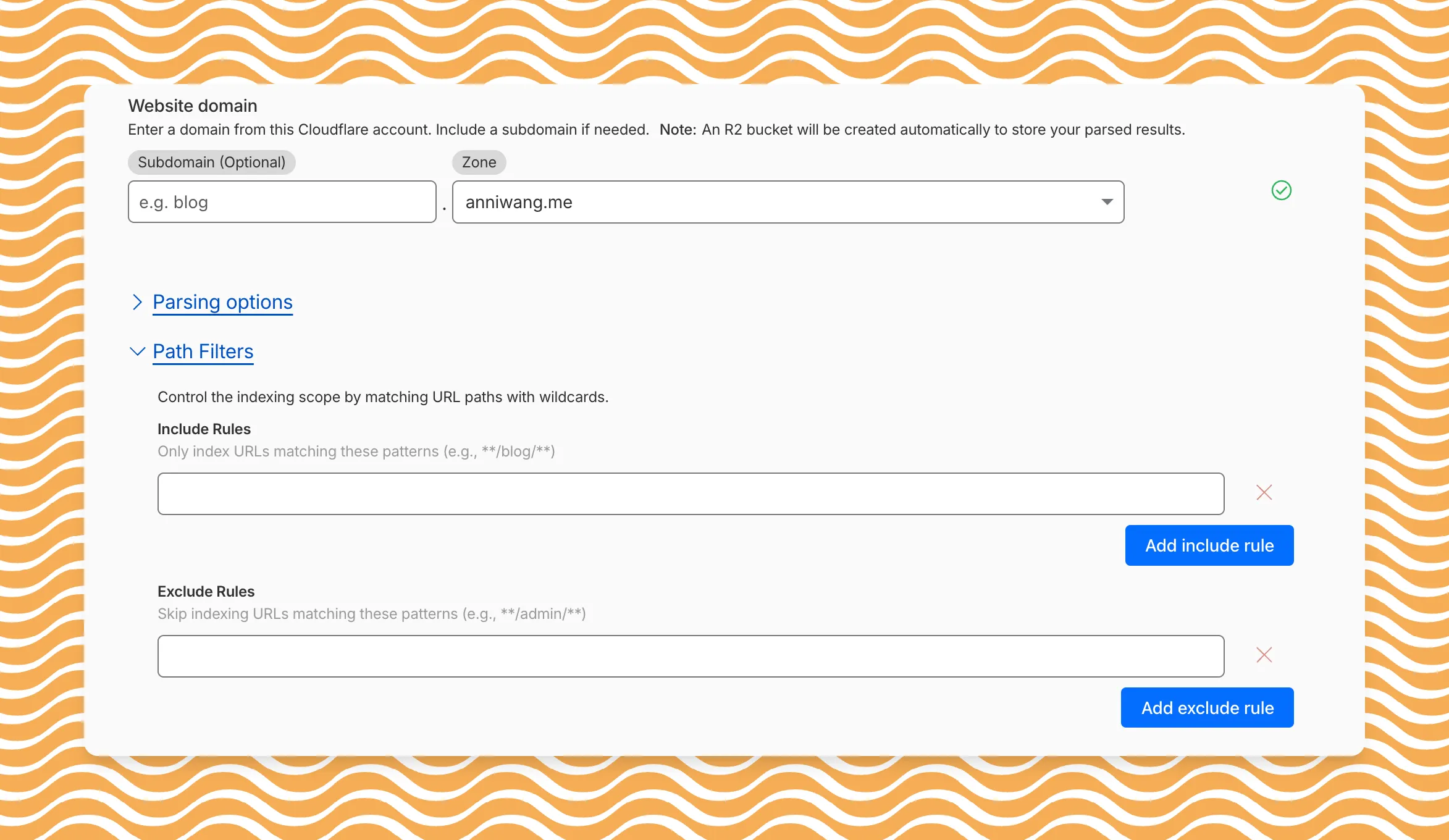

AI Search now includes path filtering for both website and R2 data sources. You can now control which content gets indexed by defining include and exclude rules for paths.

By controlling what gets indexed, you can improve the relevance and quality of your search results. You can also use path filtering to split a single data source across multiple AI Search instances for specialized search experiences.

Path filtering uses micromatch ↗ patterns, so you can use

*to match within a directory and**to match across directories.Use case Include Exclude Index docs but skip drafts **/docs/****/docs/drafts/**Keep admin pages out of results — **/admin/**Index only English content **/en/**— Configure path filters when creating a new instance or update them anytime from Settings. Check out path filtering to learn more.

-

You can now create AI Search instances programmatically using the API. For example, use the API to create instances for each customer in a multi-tenant application or manage AI Search alongside your other infrastructure.

If you have created an AI Search instance via the dashboard before, you already have a service API token registered and can start creating instances programmatically right away. If not, follow the API guide to set up your first instance.

For example, you can now create separate search instances for each language on your website:

Terminal window for lang in en fr es de; docurl -X POST "https://api.cloudflare.com/client/v4/accounts/$ACCOUNT_ID/ai-search/instances" \-H "Authorization: Bearer $API_TOKEN" \-H "Content-Type: application/json" \--data '{"id": "docs-'"$lang"'","type": "web-crawler","source": "example.com","source_params": {"path_include": ["**/'"$lang"'/**"]}}'doneRefer to the REST API reference for additional configuration options.

-

Workers KV has an updated dashboard UI with new dashboard styling that makes it easier to navigate and see analytics and settings for a KV namespace.

The new dashboard features a streamlined homepage for easy access to your namespaces and key operations, with consistent design with the rest of the dashboard UI updates. It also provides an improved analytics view.

The updated dashboard is now available for all Workers KV users. Log in to the Cloudflare Dashboard ↗ to start exploring the new interface.

-

Disclaimer: Please note that v6.0.0-beta.1 is in Beta and we are still testing it for stability.

Full Changelog: v5.2.0...v6.0.0-beta.1 ↗

In this release, you'll see a large number of breaking changes. This is primarily due to a change in OpenAPI definitions, which our libraries are based off of, and codegen updates that we rely on to read those OpenAPI definitions and produce our SDK libraries. As the codegen is always evolving and improving, so are our code bases.

Some breaking changes were introduced due to bug fixes, also listed below.

Please ensure you read through the list of changes below before moving to this version - this will help you understand any down or upstream issues it may cause to your environments.

BGPPrefixCreateParams.cidr: optional → requiredPrefixCreateParams.asn:number | null→numberPrefixCreateParams.loa_document_id: required → optionalServiceBindingCreateParams.cidr: optional → requiredServiceBindingCreateParams.service_id: optional → required

ConfigurationUpdateResponseremovedPublicSchema→OldPublicSchemaSchemaUpload→UserSchemaCreateResponseConfigurationUpdateParams.propertiesremoved; usenormalize

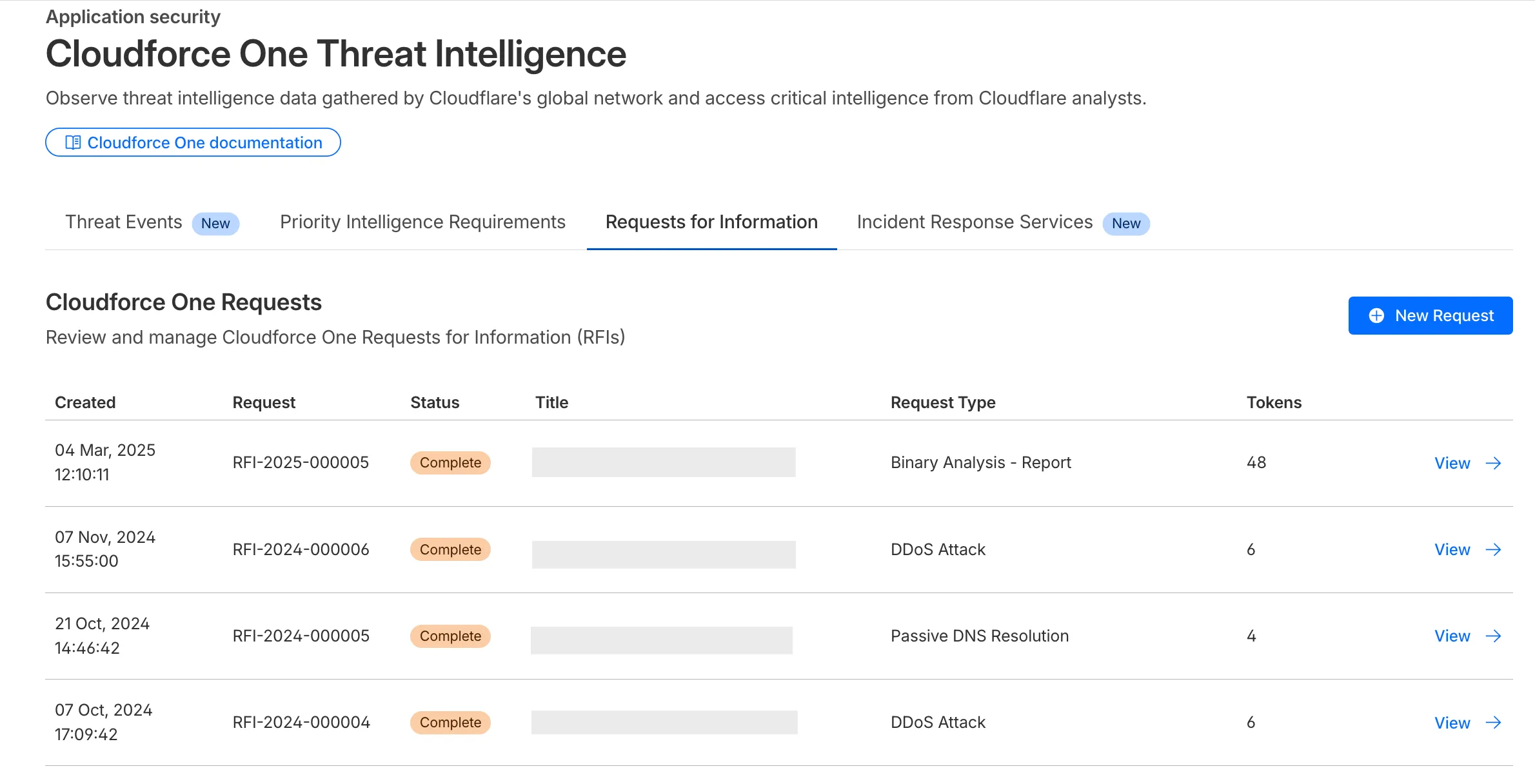

ThreatEventBulkCreateResponse:number→ complex object with counts and errors

DatabaseQueryParams: simple interface → union type (D1SingleQuery | MultipleQueries)DatabaseRawParams: same change- Supports batch queries via

batcharray

All record type interfaces renamed from

*Recordto short names:RecordResponse.ARecord→RecordResponse.ARecordResponse.AAAARecord→RecordResponse.AAAARecordResponse.CNAMERecord→RecordResponse.CNAMERecordResponse.MXRecord→RecordResponse.MXRecordResponse.NSRecord→RecordResponse.NSRecordResponse.PTRRecord→RecordResponse.PTRRecordResponse.TXTRecord→RecordResponse.TXTRecordResponse.CAARecord→RecordResponse.CAARecordResponse.CERTRecord→RecordResponse.CERTRecordResponse.DNSKEYRecord→RecordResponse.DNSKEYRecordResponse.DSRecord→RecordResponse.DSRecordResponse.HTTPSRecord→RecordResponse.HTTPSRecordResponse.LOCRecord→RecordResponse.LOCRecordResponse.NAPTRRecord→RecordResponse.NAPTRRecordResponse.SMIMEARecord→RecordResponse.SMIMEARecordResponse.SRVRecord→RecordResponse.SRVRecordResponse.SSHFPRecord→RecordResponse.SSHFPRecordResponse.SVCBRecord→RecordResponse.SVCBRecordResponse.TLSARecord→RecordResponse.TLSARecordResponse.URIRecord→RecordResponse.URIRecordResponse.OpenpgpkeyRecord→RecordResponse.Openpgpkey

ResourceGroupCreateResponse.scope: optional single → required arrayResourceGroupCreateResponse.id: optional → required

OriginCACertificateCreateParams.csr: optional → requiredOriginCACertificateCreateParams.hostnames: optional → requiredOriginCACertificateCreateParams.request_type: optional → required

- Renamed:

DeploymentsSinglePage→DeploymentListResponsesV4PagePaginationArray - Domain response fields: many optional → required

- Entire v0 API deprecated; use v1 methods (

createV1,listV1, etc.) - New sub-resources:

Sinks,Streams

EventNotificationUpdateParams.rules: optional → required- Super Slurper:

bucket,secretnow required in source params

dataSource:string→ typed enum (23 values)eventType:string→ typed enum (6 values)- V2 methods require

dimensionparameter (breaking signature change)

- Removed:

status_messagefield from all recipient response types

- Consolidated

SchemaCreateResponse,SchemaListResponse,SchemaEditResponse,SchemaGetResponse→PublicSchema - Renamed:

SchemaListResponsesV4PagePaginationArray→PublicSchemasV4PagePaginationArray

- Renamed union members:

AppListResponse.UnionMember0→SpectrumConfigAppConfig - Renamed union members:

AppListResponse.UnionMember1→SpectrumConfigPaygoAppConfig

- Removed:

WorkersBindingKindTailConsumertype (all occurrences) - Renamed:

ScriptsSinglePage→ScriptListResponsesSinglePage - Removed:

DeploymentsSinglePage

datasets.create(),update(),get()return types changedPredefinedGetResponseunion members renamed toUnionMember0-5

- Removed:

CloudflaredCreateResponse,CloudflaredListResponse,CloudflaredDeleteResponse,CloudflaredEditResponse,CloudflaredGetResponse - Removed:

CloudflaredListResponsesV4PagePaginationArray

- Reports:

create,list,get - Mitigations: sub-resource for abuse mitigations

- Instances:

create,update,list,delete,read,stats - Items:

list,get - Jobs:

create,list,get,logs - Tokens:

create,update,list,delete,read

- Directory Services:

create,update,list,delete,get - Supports IPv4, IPv6, dual-stack, and hostname configurations

- Organizations:

create,update,list,delete,get - OrganizationProfile:

update,get - Hierarchical organization support with parent/child relationships

- Catalog:

list,enable,disable,get - Credentials:

create - MaintenanceConfigs:

update,get - Namespaces:

list - Tables:

list, maintenance config management - Apache Iceberg integration

- Apps:

get,post - Meetings:

create,get, participant management - Livestreams: 10+ methods for streaming

- Recordings: start, pause, stop, get

- Sessions: transcripts, summaries, chat

- Webhooks: full CRUD

- ActiveSession: polls, kick participants

- Analytics: organization analytics

- Configuration:

create,list,delete,edit,get - Credentials:

update - Rules:

create,list,delete,bulkCreate,bulkEdit,edit,get - JWT validation with RS256/384/512, PS256/384/512, ES256, ES384

create,update,list,delete,get

create,update,list,delete,get,beginVerification

- Sinks:

create,list,delete,get - Streams:

create,update,list,delete,get

- Portals:

create,update,list,delete,read - Servers:

create,update,list,delete,read,sync

managed_byfield withparent_org_id,parent_org_name

auto_generatedfield onLOADocumentCreateResponse

delegate_loa_creation,irr_validation_state,ownership_validation_state,ownership_validation_token,rpki_validation_state

- Added

toMarkdown.supported()method to get all supported conversion formats

zdrfield added to all responses and params

- New alert type:

abuse_report_alert typefield added to PolicyFilter

ContentCreateParams: refined to discriminated union (Variant0 | Variant1)- Split into URL-based and HTML-based parameter variants for better type safety

reactivateparameter in edit

ThreatEventCreateParams.indicatorType: required → optionalhasChildrenfield added to all threat event response typesdatasetIdsquery parameter onAttackerListParams,CategoryListParams,TargetIndustryListParamscategoryUuidfield onTagCreateResponseindicatorsarray for multi-indicator support per eventuuidandpreserveUuidfields for UUID preservation in bulk createformatquery parameter ('json' | 'stix2') onThreatEventListParamscreatedAt,datasetIdfields onThreatEventEditParams

- Added

create(),update(),get()methods

- New page types:

basic_challenge,under_attack,waf_challenge

served_by_colo- colo that handled queryjurisdiction-'eu' | 'fedramp'- Time Travel (

client.d1.database.timeTravel):getBookmark(),restore()- point-in-time recovery

- New fields on

InvestigateListResponse/InvestigateGetResponse:envelope_from,envelope_to,postfix_id_outbound,replyto - New detection classification:

'outbound_ndr' - Enhanced

Findinginterface withattachment,detection,field,portion,reason,score - Added

cursorquery parameter toInvestigateListParams

- New list types:

CATEGORY,LOCATION,DEVICE

- New issue type:

'configuration_suggestion' payloadfield:unknown→ typedPayloadinterface withdetection_method,zone_tag

- Added

detections.get()method

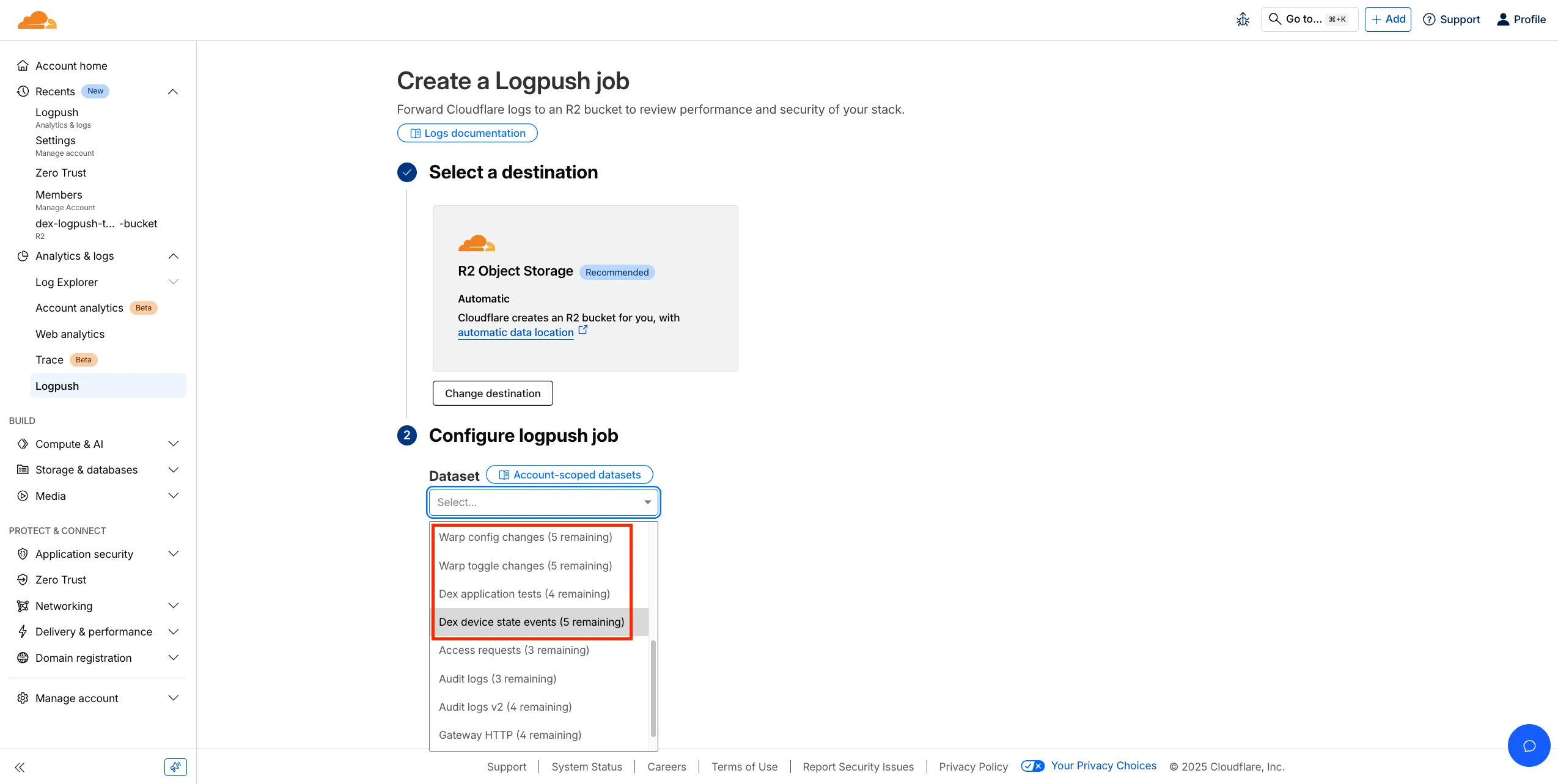

- New datasets:

dex_application_tests,dex_device_state_events,ipsec_logs,warp_config_changes,warp_toggle_changes

Monitor.port:number→number | nullPool.load_shedding:LoadShedding→LoadShedding | nullPool.origin_steering:OriginSteering→OriginSteering | null

license_keyfield on connectorsprovision_licenseparameter for auto-provisioning- IPSec:

custom_remote_identitieswith FQDN support - Snapshots: Bond interface,

probed_mtufield

- New response types:

ProjectCreateResponse,ProjectListResponse,ProjectEditResponse,ProjectGetResponse - Deployment methods return specific response types instead of generic

Deployment

- Added

subscriptions.get()method - Enhanced

SubscriptionGetResponsewith typed event source interfaces - New event source types: Images, KV, R2, Vectorize, Workers AI, Workers Builds, Workflows

- Sippy: new provider

s3(S3-compatible endpoints) - Sippy:

bucketUrlfield for S3-compatible sources - Super Slurper:

keysfield on source response schemas (specify specific keys to migrate) - Super Slurper:

pathPrefixfield on source schemas - Super Slurper:

regionfield on S3 source params

- Added

geolocations.list(),geolocations.get()methods - Added V2 dimension-based methods (

summaryV2,timeseriesGroupsV2) to radar sub-resources

- Added

terminalboolean field to Resource Error interfaces

- Added

idfield toItemDeleteParams.Item

- New buffering fields on

SetConfigRule:request_body_buffering,response_body_buffering

- New scopes:

'dex','access'(in addition to'workers','ai_gateway')

- Response types now proper interfaces (was

unknown) - Fields now required:

id,certificates,hosts,status,type

payloadfield:unknown→ typedPayloadinterface withdetection_method,zone_tag

- Added:

CloudflareTunnelsV4PagePaginationArraypagination class

- Added

subdomains.delete()method Worker.references- track external dependencies (domains, Durable Objects, queues)Worker.startup_time_ms- startup timingScript.observability- observability settings with loggingScript.tag,Script.tags- immutable ID and tags- Placement: support for region, hostname, host-based placement

tags,tail_consumersnow accept| null- Telemetry:

tracesfield,$containersevent info,durableObjectId,transactionName,abr_levelfields

ScriptUpdateResponse: new fieldsentry_point,observability,tag,tagsplacementfield now union of 4 variants (smart mode, region, hostname, host)tags,tail_consumersnow nullableTagUpdateParams.bodynow acceptsnull

instance_retention:unknown→ typedInstanceRetentioninterface witherror_retention,success_retention- New status option:

'restart'added toStatusEditParams.status

- External emergency disconnect settings (4 new fields)

antivirusdevice posture check typeos_version_extradocumentation improvements

- New response types:

SubscriptionCreateResponse,SubscriptionUpdateResponse,SubscriptionGetResponse

- New

ApplicationTypevalues:'mcp','mcp_portal','proxy_endpoint' - New destination type:

ViaMcpServerPortalDestinationfor MCP server access

- Added

rules.listTenant()method

ProxyEndpoint: interface → discriminated union (ZeroTrustGatewayProxyEndpointIP | ZeroTrustGatewayProxyEndpointIdentity)ProxyEndpointCreateParams: interface → union type- Added

kindfield:'ip' | 'identity'

WARPConnector*Response: union type → interface

- API Gateway:

UserSchemas,Settings,SchemaValidationresources - Audit Logs:

auditLogId.not(useid.not) - CloudforceOne:

ThreatEvents.get(),IndicatorTypes.list() - Devices:

public_ipfield (use DEX API) - Email Security:

item_countfield in Move responses - Pipelines: v0 methods (use v1)

- Radar: old

summary()andtimeseriesGroups()methods (use V2) - Rulesets:

disable_apps,miragefields - WARP Connector:

connectionsfield - Workers:

environmentparameter in Domains - Zones:

ResponseBufferingpage rule

- mcp: correct code tool API endpoint (599703c ↗)

- mcp: return correct lines on typescript errors (5d6f999 ↗)

- organization_profile: fix bad reference (d84ea77 ↗)

- schema_validation: correctly reflect model to openapi mapping (bb86151 ↗)

- workers: fix tests (2ee37f7 ↗)

-

Cloudflare Rulesets now include new functions that enable advanced expression logic for evaluating arrays and maps. These functions allow you to build rules that match against lists of values in request or response headers, enabling use cases like country-based blocking using custom headers.

Function Description split(source, delimiter)Splits a string into an array of strings using the specified delimiter. join(array, delimiter)Joins an array of strings into a single string using the specified delimiter. has_key(map, key)Returns trueif the specified key exists in the map.has_value(map, value)Returns trueif the specified value exists in the map.

Check if a country code exists in a header list:

has_value(split(http.response.headers["x-allow-country"][0], ","), ip.src.country)Check if a specific header key exists:

has_key(http.request.headers, "x-custom-header")Join array values for logging or comparison:

join(http.request.headers.names, ", ")For more information, refer to the Functions reference.

-

This week's release focuses on improvements to existing detections to enhance coverage.

Key Findings

- Existing rule enhancements have been deployed to improve detection resilience against SQL injection.

Ruleset Rule ID Legacy Rule ID Description Previous Action New Action Comments Cloudflare Managed Ruleset N/A SQLi - Comment - Beta Log Block This rule is merged into the original rule "SQLi - Comment" (ID: Cloudflare Managed Ruleset N/A SQLi - Comparison - Beta Log Block This rule is merged into the original rule "SQLi - Comparison" (ID:

-

The planned release has been postponed to ensure a smooth deployment.

Announcement Date Release Date Release Behavior Legacy Rule ID Rule ID Description Comments 2025-12-01 2026-01-26 Log N/A XWiki - Remote Code Execution - CVE:CVE-2025-24893 2 This is a new detection. The rule has been renamed to "Wiki - Remote Code Execution - CVE-2025-24893 2", previously known as "XWiki - Remote Code Execution - CVE-2025-24893 – Beta". 2025-12-01 2026-01-26 Log N/A Django SQLI - CVE:CVE-2025-64459 This is a new detection.

-

Auxiliary Workers are now fully supported when using full-stack frameworks, such as React Router and TanStack Start, that integrate with the Cloudflare Vite plugin. They are included alongside the framework's build output in the build output directory. Note that this feature requires Vite 7 or above.

Auxiliary Workers are additional Workers that can be called via service bindings from your main (entry) Worker. They are defined in the plugin config, as in the example below:

vite.config.ts import { defineConfig } from "vite";import { tanstackStart } from "@tanstack/react-start/plugin/vite";import { cloudflare } from "@cloudflare/vite-plugin";export default defineConfig({plugins: [tanstackStart(),cloudflare({viteEnvironment: { name: "ssr" },auxiliaryWorkers: [{ configPath: "./wrangler.aux.jsonc" }],}),],});See the Vite plugin API docs for more info.

-

The

.sqlfile extension is now automatically configured to be importable in your Worker code when using Wrangler or the Cloudflare Vite plugin. This is particular useful for importing migrations in Durable Objects and means you no longer need to configure custom rules when using Drizzle ↗.SQL files are imported as JavaScript strings:

TypeScript // `example` will be a JavaScript stringimport example from "./example.sql";

-

We have made it easier to validate connectivity when deploying WARP Connector as part of your software-defined private network.

You can now

pingthe WARP Connector host directly on its LAN IP address immediately after installation. This provides a fast, familiar way to confirm that the Connector is online and reachable within your network before testing access to downstream services.Starting with version 2025.10.186.0, WARP Connector responds to traffic addressed to its own LAN IP, giving you immediate visibility into Connector reachability.

Learn more about deploying WARP Connector and building private network connectivity with Cloudflare One.

-

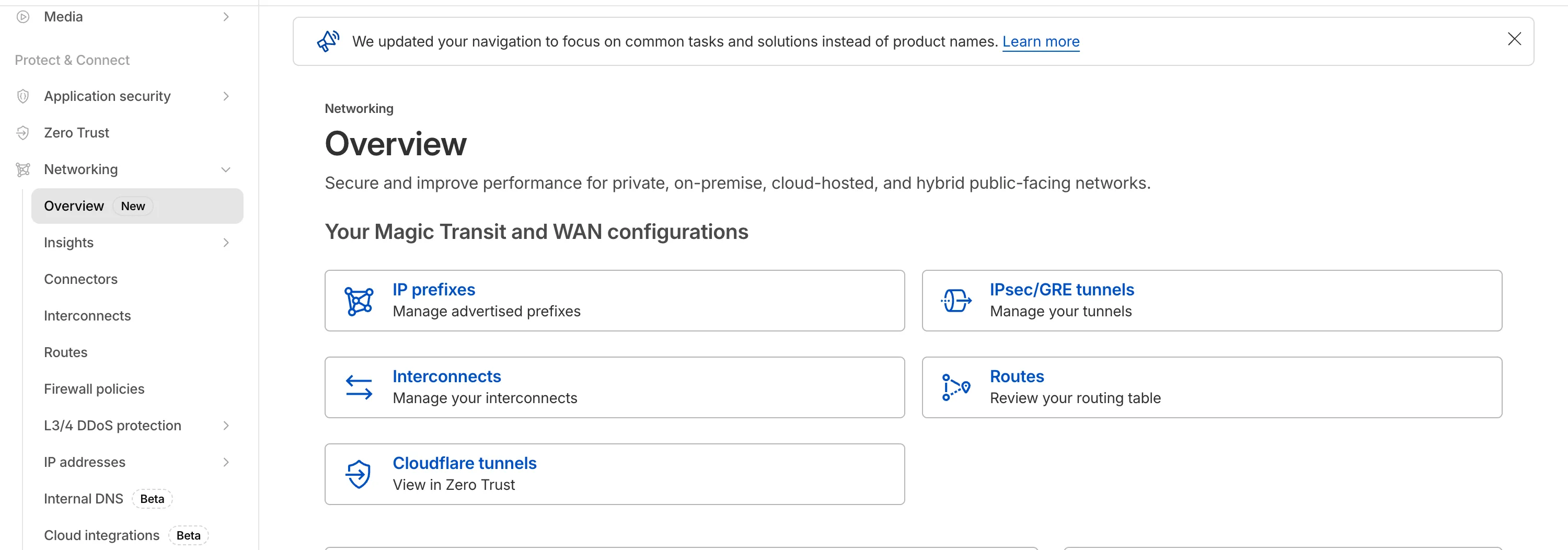

The Network Services menu structure in Cloudflare’s dashboard has been updated to reflect solutions and capabilities instead of product names. This will make it easier for you to find what you need and better reflects how our services work together.

Your existing configurations will remain the same, and you will have access to all of the same features and functionality.

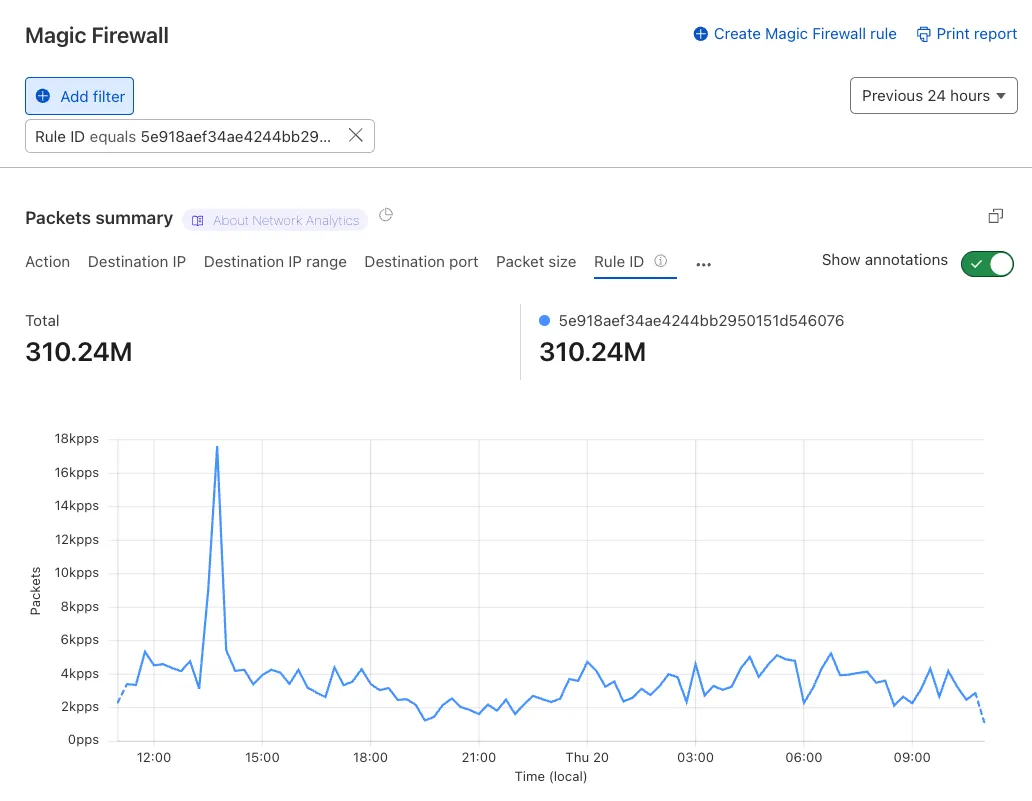

The changes visible in your dashboard may vary based on the products you use. Overall, changes relate to Magic Transit ↗, Magic WAN ↗, and Magic Firewall ↗.

Summary of changes:

- A new 'Overview' page provides access to the most common tasks across Magic Transit and Magic WAN.

- Product names have been removed from top-level navigation.

- Magic Transit and Magic WAN configuration is now organized under 'Routes' and ‘Connectors’. For example, you will find IP Prefixes under ‘Routes’, and your GRE/IPsec Tunnels under ‘Connectors.’

- Magic Firewall policies are now called 'Firewall Policies.'

- Magic WAN Connectors and Connector On-Ramps are now referenced in the dashboard as 'Appliances' and ‘Appliance profiles.’ They can be found under 'Connectors > Appliances.'

- Network analytics, network health, and real-time analytics are now available under 'Insights.'

- Packet Captures are found under 'Insights > Diagnostics.'

- You can manage your Sites from 'Insights > Network health.'

- You can find Magic Network Monitoring under 'Insights > Network flow.'

If you would like to provide feedback, please use the form in the email sent on January 7th, 2026, titled '[FYI] Upcoming Network Services Dashboard Navigation Update.'

-

Cloudflare One has expanded its [User Risk Scoring] (/cloudflare-one/insights/risk-score/) capabilities by introducing two new behaviors for organizations using the [CrowdStrike integration] (/cloudflare-one/integrations/service-providers/crowdstrike/).

Administrators can now automatically escalate the risk score of a user if their device matches specific CrowdStrike Zero Trust Assessment (ZTA) score ranges. This allows for more granular security policies that respond dynamically to the health of the endpoint.

New risk behaviors The following risk scoring behaviors are now available:

- CrowdStrike low device score: Automatically increases a user's risk score when the connected device reports a "Low" score from CrowdStrike.

- CrowdStrike medium device score: Automatically increases a user's risk score when the connected device reports a "Medium" score from CrowdStrike.

These scores are derived from [CrowdStrike device posture attributes] (/cloudflare-one/integrations/service-providers/crowdstrike/#device-posture-attributes), including OS signals and sensor configurations.

-

We've partnered with Black Forest Labs (BFL) again to bring their optimized FLUX.2 [klein] 4B model to Workers AI! This distilled model offers faster generation and cost-effective pricing, while maintaining great output quality. With a fixed 4-step inference process, Klein 4B is ideal for rapid prototyping and real-time applications where speed matters.

Read the BFL blog ↗ to learn more about the model itself, or try it out yourself on our multi modal playground ↗.

Pricing documentation is available on the model page or pricing page.

The model hosted on Workers AI is optimized for speed with a fixed 4-step inference process and supports up to 4 image inputs. Since this is a distilled model, the

stepsparameter is fixed at 4 and cannot be adjusted. Like FLUX.2 [dev], this image model uses multipart form data inputs, even if you just have a prompt.With the REST API, the multipart form data input looks like this:

Terminal window curl --request POST \--url 'https://api.cloudflare.com/client/v4/accounts/{ACCOUNT}/ai/run/@cf/black-forest-labs/flux-2-klein-4b' \--header 'Authorization: Bearer {TOKEN}' \--header 'Content-Type: multipart/form-data' \--form 'prompt=a sunset at the alps' \--form width=1024 \--form height=1024With the Workers AI binding, you can use it as such:

JavaScript const form = new FormData();form.append("prompt", "a sunset with a dog");form.append("width", "1024");form.append("height", "1024");const resp = await env.AI.run("@cf/black-forest-labs/flux-2-klein-4b", {multipart: {body: formStream,contentType: formContentType,},});const formStream = formRequest.body;const formContentType =formRequest.headers.get("content-type") || "multipart/form-data";const resp = await env.AI.run("@cf/black-forest-labs/flux-2-klein-4b", {multipart: {body: formStream,contentType: formContentType,},});The parameters you can send to the model are detailed here:

JSON Schema for Model

Required Parametersprompt(string) - Text description of the image to generate

Optional Parameters

input_image_0(string) - Binary imageinput_image_1(string) - Binary imageinput_image_2(string) - Binary imageinput_image_3(string) - Binary imageguidance(float) - Guidance scale for generation. Higher values follow the prompt more closelywidth(integer) - Width of the image, default1024Range: 256-1920height(integer) - Height of the image, default768Range: 256-1920seed(integer) - Seed for reproducibility

Note: Since this is a distilled model, the

stepsparameter is fixed at 4 and cannot be adjusted.## Multi-Reference ImagesThe FLUX.2 klein-4b model supports generating images based on reference images, just like FLUX.2 [dev]. You can use this feature to apply the style of one image to another, add a new character to an image, or iterate on past generated images. You would use it with the same multipart form data structure, with the input images in binary. The model supports up to 4 input images.For the prompt, you can reference the images based on the index, like `take the subject of image 1 and style it like image 0` or even use natural language like `place the dog beside the woman`.Note: you have to name the input parameter as `input_image_0`, `input_image_1`, `input_image_2`, `input_image_3` for it to work correctly. All input images must be smaller than 512x512.```bashcurl --request POST \--url 'https://api.cloudflare.com/client/v4/accounts/{ACCOUNT}/ai/run/@cf/black-forest-labs/flux-2-klein-4b' \--header 'Authorization: Bearer {TOKEN}' \--header 'Content-Type: multipart/form-data' \--form 'prompt=take the subject of image 1 and style it like image 0' \--form input_image_0=@/Users/johndoe/Desktop/icedoutkeanu.png \--form input_image_1=@/Users/johndoe/Desktop/me.png \--form width=1024 \--form height=1024Through Workers AI Binding:

JavaScript //helper function to convert ReadableStream to Blobasync function streamToBlob(stream: ReadableStream, contentType: string): Promise<Blob> {const reader = stream.getReader();const chunks = [];while (true) {const { done, value } = await reader.read();if (done) break;chunks.push(value);}return new Blob(chunks, { type: contentType });}const image0 = await fetch("http://image-url");const image1 = await fetch("http://image-url");const form = new FormData();const image_blob0 = await streamToBlob(image0.body, "image/png");const image_blob1 = await streamToBlob(image1.body, "image/png");form.append('input_image_0', image_blob0)form.append('input_image_1', image_blob1)form.append('prompt', 'take the subject of image 1 and style it like image 0')const resp = await env.AI.run("@cf/black-forest-labs/flux-2-klein-4b", {multipart: {body: form,contentType: "multipart/form-data"}})

-

This week's release focuses on improvements to existing detections to enhance coverage.

Key Findings

- Existing rule enhancements have been deployed to improve detection resilience against SQL Injection.

Ruleset Rule ID Legacy Rule ID Description Previous Action New Action Comments Cloudflare Managed Ruleset N/A SQLi - String Function - Beta Log Block This rule is merged into the original rule "SQLi - String Function" (ID: Cloudflare Managed Ruleset N/A SQLi - Sub Query - Beta Log Block This rule is merged into the original rule "SQLi - Sub Query" (ID:

-

We have expanded the reporting capabilities of the Cloudflare URL Scanner. In addition to existing JSON and HAR exports, users can now generate and download a PDF report directly from the Cloudflare dashboard. This update streamlines how security analysts can share findings with stakeholders who may not have access to the Cloudflare dashboard or specialized tools to parse JSON and HAR files.

Key Benefits:

- Consolidate scan results, including screenshots, security signatures, and metadata, into a single, portable document

- Easily share professional-grade summaries with non-technical stakeholders or legal teams for faster incident response

What’s new:

- PDF Export Button: A new download option is available in the URL Scanner results page within the Cloudflare dashboard

- Unified Documentation: Access all scan details—from high-level summaries to specific security flags—in one offline-friendly file

To get started with the URL Scanner and explore our reporting capabilities, visit the URL Scanner API documentation ↗.

-

A new GA release for the Windows WARP client is now available on the stable releases downloads page.

This release contains minor fixes, improvements, and new features. New features include the ability to manage WARP client connectivity for all devices in your fleet using an external signal, and a new WARP client device posture check for Antivirus.

Changes and improvements

- Added a new feature to manage WARP client connectivity for all devices using an external signal. This feature allows administrators to send a global signal from an on-premises HTTPS endpoint that force disconnects or reconnects all WARP clients in an account based on configuration set on the endpoint.

- Fixed an issue that caused occasional audio degradation and increased CPU usage on Windows by optimizing route configurations for large domain-based split tunnel rules.

- The Local Domain Fallback feature has been fixed for devices running WARP client version 2025.4.929.0 and newer. Previously, these devices could experience failures with Local Domain Fallback unless a fallback server was explicitly configured. This configuration is no longer a requirement for the feature to function correctly.

- Proxy mode now supports transparent HTTP proxying in addition to CONNECT-based proxying.

- Fixed an issue where sending large messages to the daemon by Inter-Process Communication (IPC) could cause the daemon to fail and result in service interruptions.

- Added support for a new WARP client device posture check for Antivirus. The check confirms the presence of an antivirus program on a Windows device with the option to check if the antivirus is up to date.

Known issues

For Windows 11 24H2 users, Microsoft has confirmed a regression that may lead to performance issues like mouse lag, audio cracking, or other slowdowns. Cloudflare recommends users experiencing these issues upgrade to a minimum Windows 11 24H2 KB5062553 or higher for resolution.

Devices with KB5055523 installed may receive a warning about

Win32/ClickFix.ABAbeing present in the installer. To resolve this false positive, update Microsoft Security Intelligence to version 1.429.19.0 or later.DNS resolution may be broken when the following conditions are all true:

- WARP is in Secure Web Gateway without DNS filtering (tunnel-only) mode.

- A custom DNS server address is configured on the primary network adapter.

- The custom DNS server address on the primary network adapter is changed while WARP is connected.

To work around this issue, reconnect the WARP client by toggling off and back on.

-

A new GA release for the macOS WARP client is now available on the stable releases downloads page.

This release contains minor fixes, improvements, and new features, including the ability to manage WARP client connectivity for all devices in your fleet using an external signal.

Changes and improvements

- The Local Domain Fallback feature has been fixed for devices running WARP client version 2025.4.929.0 and newer. Previously, these devices could experience failures with Local Domain Fallback unless a fallback server was explicitly configured. This configuration is no longer a requirement for the feature to function correctly.

- Proxy mode now supports transparent HTTP proxying in addition to CONNECT-based proxying.

- Added a new feature to manage WARP client connectivity for all devices using an external signal. This feature allows administrators to send a global signal from an on-premises HTTPS endpoint that force disconnects or reconnects all WARP clients in an account based on configuration set on the endpoint.

-

A new GA release for the Linux WARP client is now available on the stable releases downloads page.

This release contains minor fixes, improvements, and new features, including the ability to manage WARP client connectivity for all devices in your fleet using an external signal.

WARP client version 2025.8.779.0 introduced an updated public key for Linux packages. The public key must be updated if it was installed before September 12, 2025 to ensure the repository remains functional after December 4, 2025. Instructions to make this update are available at pkg.cloudflareclient.com.

Changes and improvements

- The Local Domain Fallback feature has been fixed for devices running WARP client version 2025.4.929.0 and newer. Previously, these devices could experience failures with Local Domain Fallback unless a fallback server was explicitly configured. This configuration is no longer a requirement for the feature to function correctly.

- Linux disk encryption posture check now supports non-filesystem encryption types like

dm-crypt. - Proxy mode now supports transparent HTTP proxying in addition to CONNECT-based proxying.

- Fixed an issue where the GUI becomes unresponsive when the Re-Authenticate in browser button is clicked.

- Added a new feature to manage WARP client connectivity for all devices using an external signal. This feature allows administrators to send a global signal from an on-premises HTTPS endpoint that force disconnects or reconnects all WARP clients in an account based on configuration set on the endpoint.

-

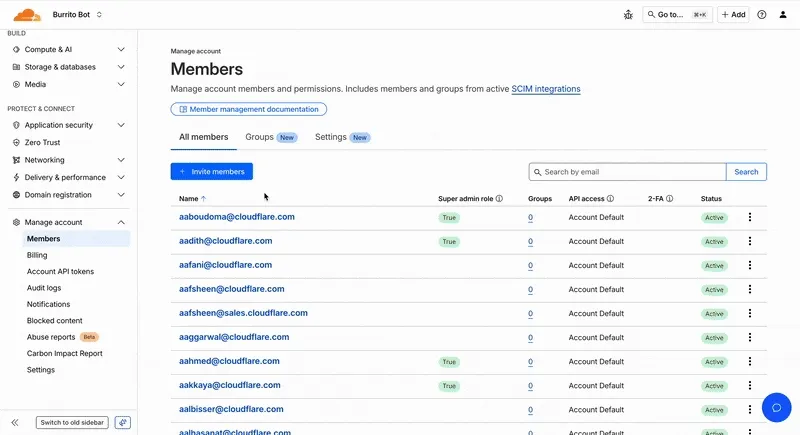

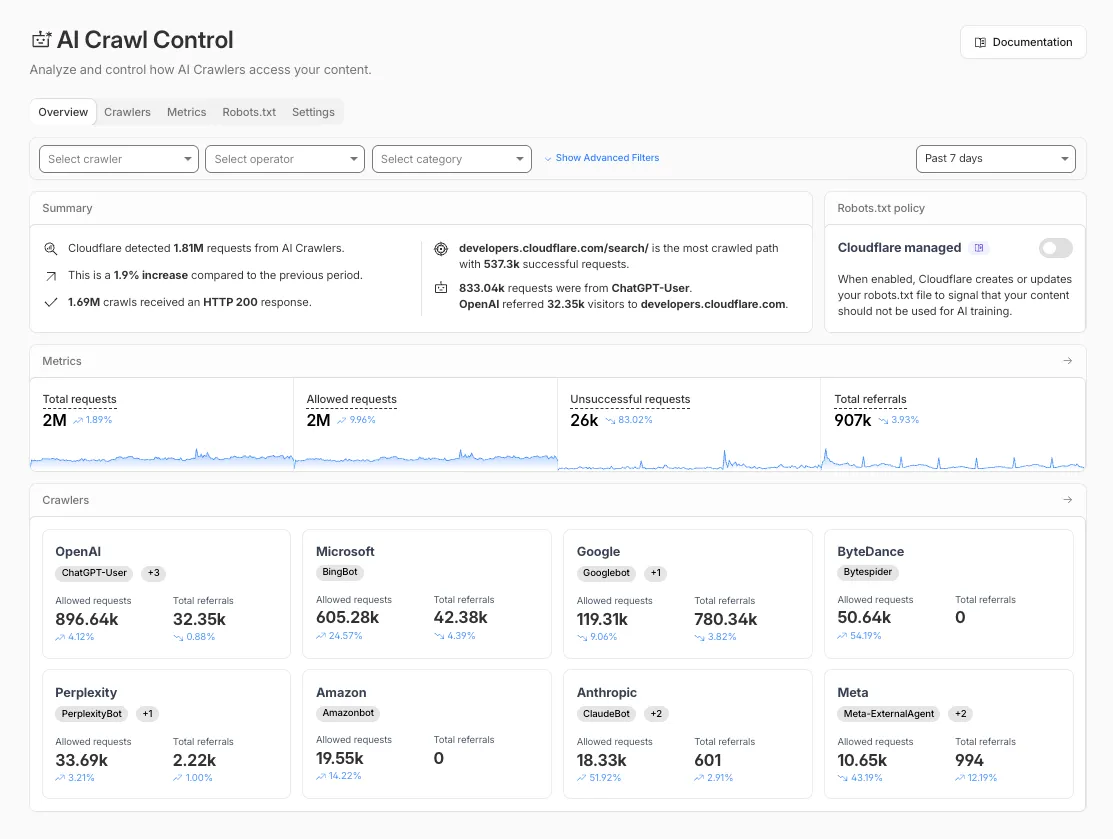

Account administrators can now assign the AI Crawl Control Read Only role to provide read-only access to AI Crawl Control at the domain level.

Users with this role can view the Overview, Crawlers, Metrics, Robots.txt, and Settings tabs but cannot modify crawler actions or settings.

This role is specific for AI Crawl Control. You still require correct permissions to access other areas / features of the dashboard.

To assign, go to Manage Account > Members and add a policy with the AI Crawl Control Read Only role scoped to the desired domain.

-

The

wrangler typescommand now generates TypeScript types for bindings from all environments defined in your Wrangler configuration file by default.Previously,

wrangler typesonly generated types for bindings in the top-level configuration (or a single environment when using the--envflag). This meant that if you had environment-specific bindings — for example, a KV namespace only in production or an R2 bucket only in staging — those bindings would be missing from your generated types, causing TypeScript errors when accessing them.Now, running

wrangler typescollects bindings from all environments and includes them in the generatedEnvtype. This ensures your types are complete regardless of which environment you deploy to.If you want the previous behavior of generating types for only a specific environment, you can use the

--envflag:Terminal window wrangler types --env productionLearn more about generating types for your Worker in the Wrangler documentation.

-

The

ip.src.metro_codefield in the Ruleset Engine is now populated with DMA (Designated Market Area) data.You can use this field to build rules that target traffic based on geographic market areas, enabling more granular location-based policies for your applications.

Field Type Description ip.src.metro_codeString | null The metro code (DMA) of the incoming request's IP address. Returns the designated market area code for the client's location. Example filter expression:

ip.src.metro_code eq "501"For more information, refer to the Fields reference.

-

We are excited to announce that Cloudflare Threat Events now supports the STIX2 (Structured Threat Information Expression) format. This was a highly requested feature designed to streamline how security teams consume and act upon our threat intelligence.

By adopting this industry-standard format, you can now integrate Cloudflare's threat events data more effectively into your existing security ecosystem.

-

Eliminate the need for custom parsers, as STIX2 allows for "out of the box" ingestion into major Threat Intel Platforms (TIPs), SIEMs, and SOAR tools.

-

STIX2 provides a standardized way to represent relationships between indicators, sightings, and threat actors, giving your analysts a clearer picture of the threat landscape.

For technical details on how to query events using this format, please refer to our Threat Events API Documentation ↗.

-

-

This week's release focuses on improvements to existing detections to enhance coverage.

Key Findings

- Existing rule enhancements have been deployed to improve detection resilience against SQL Injection.

Ruleset Rule ID Legacy Rule ID Description Previous Action New Action Comments Cloudflare Managed Ruleset N/A SQLi - AND/OR MAKE_SET/ELT - Beta Log Block This rule is merged into the original rule "SQLi - AND/OR MAKE_SET/ELT" (ID: Cloudflare Managed Ruleset N/A SQLi - Benchmark Function - Beta Log Block This rule is merged into the original rule "SQLi - Benchmark Function" (ID:

-

Wrangler now supports a

--checkflag for thewrangler typescommand. This flag validates that your generated types are up to date without writing any changes to disk.This is useful in CI/CD pipelines where you want to ensure that developers have regenerated their types after making changes to their Wrangler configuration. If the types are out of date, the command will exit with a non-zero status code.

Terminal window npx wrangler types --checkIf your types are up to date, the command will succeed silently. If they are out of date, you'll see an error message indicating which files need to be regenerated.

For more information, see the Wrangler types documentation.

-

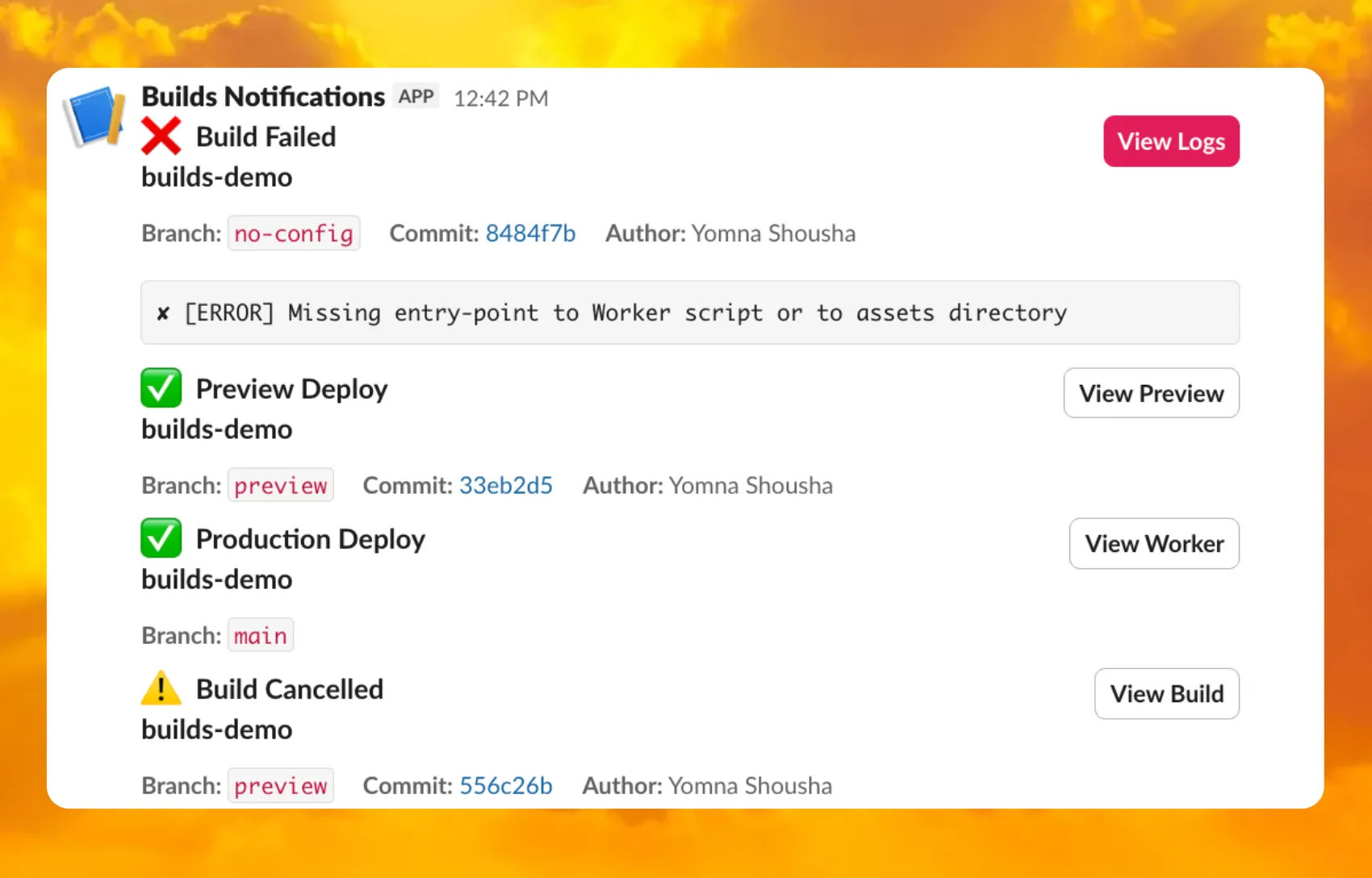

You can now receive notifications when your Workers' builds start, succeed, fail, or get cancelled using Event Subscriptions.

Workers Builds publishes events to a Queue that your Worker can read messages from, and then send notifications wherever you need — Slack, Discord, email, or any webhook endpoint.

You can deploy this Worker ↗ to your own Cloudflare account to send build notifications to Slack:

The template includes:

- Build status with Preview/Live URLs for successful deployments

- Inline error messages for failed builds

- Branch, commit hash, and author name

For setup instructions, refer to the template README ↗ or the Event Subscriptions documentation.

-

Cloudflare admin activity logs now capture each time a DNS over HTTP (DoH) user is created.

These logs can be viewed from the Cloudflare One dashboard ↗, pulled via the Cloudflare API, and exported through Logpush.

-

You can now use the

HAVINGclause andLIKEpattern matching operators in Workers Analytics Engine ↗.Workers Analytics Engine allows you to ingest and store high-cardinality data at scale and query your data through a simple SQL API.

The

HAVINGclause complements theWHEREclause by enabling you to filter groups based on aggregate values. WhileWHEREfilters rows before aggregation,HAVINGfilters groups after aggregation is complete.You can use

HAVINGto filter groups where the average exceeds a threshold:SELECTblob1 AS probe_name,avg(double1) AS average_tempFROM temperature_readingsGROUP BY probe_nameHAVING average_temp > 10You can also filter groups based on aggregates such as the number of items in the group:

SELECTblob1 AS probe_name,count() AS num_readingsFROM temperature_readingsGROUP BY probe_nameHAVING num_readings > 100The new pattern matching operators enable you to search for strings that match specific patterns using wildcard characters:

LIKE- case-sensitive pattern matchingNOT LIKE- case-sensitive pattern exclusionILIKE- case-insensitive pattern matchingNOT ILIKE- case-insensitive pattern exclusion

Pattern matching supports two wildcard characters:

%(matches zero or more characters) and_(matches exactly one character).You can match strings starting with a prefix:

SELECT *FROM logsWHERE blob1 LIKE 'error%'You can also match file extensions (case-insensitive):

SELECT *FROM requestsWHERE blob2 ILIKE '%.jpg'Another example is excluding strings containing specific text:

SELECT *FROM eventsWHERE blob3 NOT ILIKE '%debug%'Learn more about the

HAVINGclause or pattern matching operators in the Workers Analytics Engine SQL reference documentation.

-

Custom instance types are now enabled for all Cloudflare Containers users. You can now specify specific vCPU, memory, and disk amounts, rather than being limited to pre-defined instance types. Previously, only select Enterprise customers were able to customize their instance type.

To use a custom instance type, specify the

instance_typeproperty as an object withvcpu,memory_mib, anddisk_mbfields in your Wrangler configuration:[[containers]]image = "./Dockerfile"instance_type = { vcpu = 2, memory_mib = 6144, disk_mb = 12000 }Individual limits for custom instance types are based on the

standard-4instance type (4 vCPU, 12 GiB memory, 20 GB disk). You must allocate at least 1 vCPU for custom instance types. For workloads requiring less than 1 vCPU, use the predefined instance types likeliteorbasic.See the limits documentation for the full list of constraints on custom instance types. See the getting started guide to deploy your first Container,

-

Agents SDK v0.3.0, workers-ai-provider v3.0.0, and ai-gateway-provider v3.0.0 with AI SDK v6 support

We've shipped a new release for the Agents SDK ↗ v0.3.0 bringing full compatibility with AI SDK v6 ↗ and introducing the unified tool pattern, dynamic tool approval, and enhanced React hooks with improved tool handling.

This release includes improved streaming and tool support, dynamic tool approval (for "human in the loop" systems), enhanced React hooks with

onToolCallcallback, improved error handling for streaming responses, and seamless migration from v5 patterns.This makes it ideal for building production AI chat interfaces with Cloudflare Workers AI models, agent workflows, human-in-the-loop systems, or any application requiring reliable tool execution and approval workflows.

Additionally, we've updated workers-ai-provider v3.0.0, the official provider for Cloudflare Workers AI models, and ai-gateway-provider v3.0.0, the provider for Cloudflare AI Gateway, to be compatible with AI SDK v6.

AI SDK v6 introduces a unified tool pattern where all tools are defined on the server using the

tool()function. This replaces the previous client-sideAIToolpattern.TypeScript import { tool } from "ai";import { z } from "zod";// Server: Define ALL tools on the serverconst tools = {// Server-executed toolgetWeather: tool({description: "Get weather for a city",inputSchema: z.object({ city: z.string() }),execute: async ({ city }) => fetchWeather(city)}),// Client-executed tool (no execute = client handles via onToolCall)getLocation: tool({description: "Get user location from browser",inputSchema: z.object({})// No execute function}),// Tool requiring approval (dynamic based on input)processPayment: tool({description: "Process a payment",inputSchema: z.object({ amount: z.number() }),needsApproval: async ({ amount }) => amount > 100,execute: async ({ amount }) => charge(amount)})};TypeScript // Client: Handle client-side tools via onToolCall callbackimport { useAgentChat } from "agents/ai-react";const { messages, sendMessage, addToolOutput } = useAgentChat({agent,onToolCall: async ({ toolCall, addToolOutput }) => {if (toolCall.toolName === "getLocation") {const position = await new Promise((resolve, reject) => {navigator.geolocation.getCurrentPosition(resolve, reject);});addToolOutput({toolCallId: toolCall.toolCallId,output: {lat: position.coords.latitude,lng: position.coords.longitude}});}}});Key benefits of the unified tool pattern:

- Server-defined tools: All tools are defined in one place on the server

- Dynamic approval: Use

needsApprovalto conditionally require user confirmation - Cleaner client code: Use

onToolCallcallback instead of managing tool configs - Type safety: Full TypeScript support with proper tool typing

Creates a new chat interface with enhanced v6 capabilities.

TypeScript // Basic chat setup with onToolCallconst { messages, sendMessage, addToolOutput } = useAgentChat({agent,onToolCall: async ({ toolCall, addToolOutput }) => {// Handle client-side tool executionawait addToolOutput({toolCallId: toolCall.toolCallId,output: { result: "success" }});}});Use

needsApprovalon server tools to conditionally require user confirmation:TypeScript const paymentTool = tool({description: "Process a payment",inputSchema: z.object({amount: z.number(),recipient: z.string()}),needsApproval: async ({ amount }) => amount > 1000,execute: async ({ amount, recipient }) => {return await processPayment(amount, recipient);}});The

isToolUIPartandgetToolNamefunctions now check both static and dynamic tool parts:TypeScript import { isToolUIPart, getToolName } from "ai";const pendingToolCallConfirmation = messages.some((m) =>m.parts?.some((part) => isToolUIPart(part) && part.state === "input-available",),);// Handle tool confirmationif (pendingToolCallConfirmation) {await addToolOutput({toolCallId: part.toolCallId,output: "User approved the action"});}If you need the v5 behavior (static-only checks), use the new functions:

TypeScript import { isStaticToolUIPart, getStaticToolName } from "ai";The

convertToModelMessages()function is now asynchronous. Update all calls to await the result:TypeScript import { convertToModelMessages } from "ai";const result = streamText({messages: await convertToModelMessages(this.messages),model: openai("gpt-4o")});The

CoreMessagetype has been removed. UseModelMessageinstead:TypeScript import { convertToModelMessages, type ModelMessage } from "ai";const modelMessages: ModelMessage[] = await convertToModelMessages(messages);The

modeoption forgenerateObjecthas been removed:TypeScript // Before (v5)const result = await generateObject({mode: "json",model,schema,prompt});// After (v6)const result = await generateObject({model,schema,prompt});While

generateObjectandstreamObjectare still functional, the recommended approach is to usegenerateText/streamTextwith theOutput.object()helper:TypeScript import { generateText, Output, stepCountIs } from "ai";const { output } = await generateText({model: openai("gpt-4"),output: Output.object({schema: z.object({ name: z.string() })}),stopWhen: stepCountIs(2),prompt: "Generate a name"});Note: When using structured output with

generateText, you must configure multiple steps withstopWhenbecause generating the structured output is itself a step.Seamless integration with Cloudflare Workers AI models through the updated workers-ai-provider v3.0.0 with AI SDK v6 support.

Use Cloudflare Workers AI models directly in your agent workflows:

TypeScript import { createWorkersAI } from "workers-ai-provider";import { useAgentChat } from "agents/ai-react";// Create Workers AI model (v3.0.0 - enhanced v6 internals)const model = createWorkersAI({binding: env.AI,})("@cf/meta/llama-3.2-3b-instruct");Workers AI models now support v6 file handling with automatic conversion:

TypeScript // Send images and files to Workers AI modelssendMessage({role: "user",parts: [{ type: "text", text: "Analyze this image:" },{type: "file",data: imageBuffer,mediaType: "image/jpeg",},],});// Workers AI provider automatically converts to proper formatEnhanced streaming support with automatic warning detection:

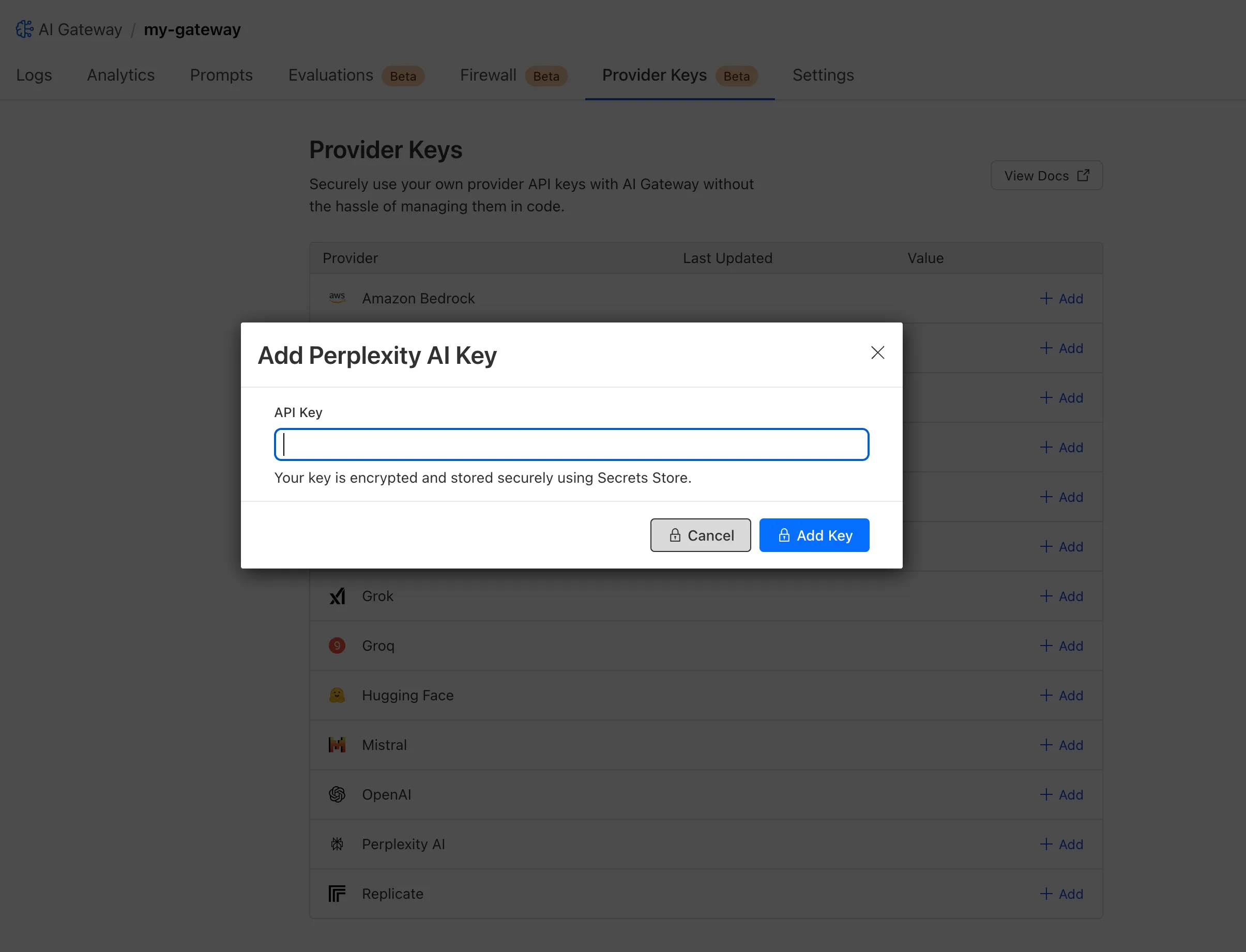

TypeScript // Streaming with Workers AI modelsconst result = await streamText({model: createWorkersAI({ binding: env.AI })("@cf/meta/llama-3.2-3b-instruct"),messages: await convertToModelMessages(messages),onChunk: (chunk) => {// Enhanced streaming with warning handlingconsole.log(chunk);},});The ai-gateway-provider v3.0.0 now supports AI SDK v6, enabling you to use Cloudflare AI Gateway with multiple AI providers including Anthropic, Azure, AWS Bedrock, Google Vertex, and Perplexity.

Use Cloudflare AI Gateway to add analytics, caching, and rate limiting to your AI applications:

TypeScript import { createAIGateway } from "ai-gateway-provider";// Create AI Gateway provider (v3.0.0 - enhanced v6 internals)const model = createAIGateway({gatewayUrl: "https://gateway.ai.cloudflare.com/v1/your-account-id/gateway",headers: {"Authorization": `Bearer ${env.AI_GATEWAY_TOKEN}`}})({provider: "openai",model: "gpt-4o"});The following APIs are deprecated in favor of the unified tool pattern:

Deprecated Replacement AITooltypeUse AI SDK's tool()function on serverextractClientToolSchemas()Define tools on server, no client schemas needed createToolsFromClientSchemas()Define tools on server with tool()toolsRequiringConfirmationoptionUse needsApprovalon server toolsexperimental_automaticToolResolutionUse onToolCallcallbacktoolsoption inuseAgentChatUse onToolCallfor client-side executionaddToolResult()Use addToolOutput()- Unified Tool Pattern: All tools must be defined on the server using

tool() convertToModelMessages()is async: Addawaitto all callsCoreMessageremoved: UseModelMessageinsteadgenerateObjectmode removed: RemovemodeoptionisToolUIPartbehavior changed: Now checks both static and dynamic tool parts

Update your dependencies to use the latest versions:

Terminal window npm install agents@^0.3.0 workers-ai-provider@^3.0.0 ai-gateway-provider@^3.0.0 ai@^6.0.0 @ai-sdk/react@^3.0.0 @ai-sdk/openai@^3.0.0- Migration Guide ↗ - Comprehensive migration documentation from v5 to v6

- AI SDK v6 Documentation ↗ - Official AI SDK migration guide

- AI SDK v6 Announcement ↗ - Learn about new features in v6

- AI SDK Documentation ↗ - Complete AI SDK reference

- GitHub Issues ↗ - Report bugs or request features

We'd love your feedback! We're particularly interested in feedback on:

- Migration experience - How smooth was the upgrade from v5 to v6?

- Unified tool pattern - How does the new server-defined tool pattern work for you?

- Dynamic tool approval - Does the

needsApprovalfeature meet your needs? - AI Gateway integration - How well does the new provider work with your setup?

-

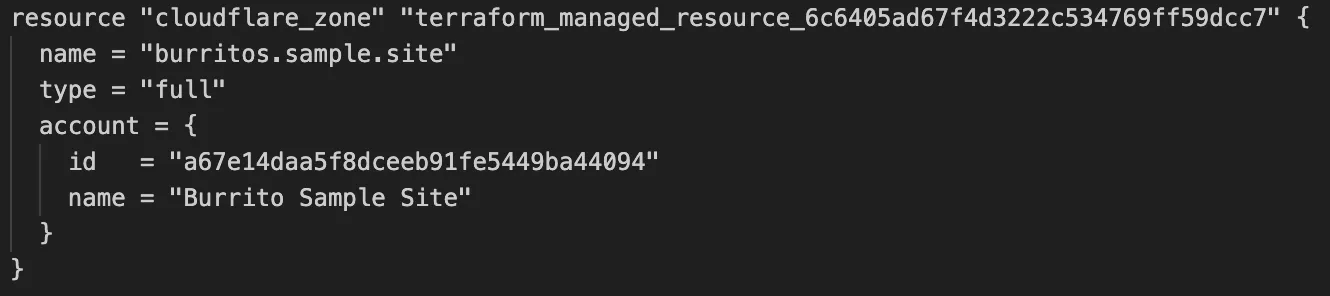

Earlier this year, we announced the launch of the new Terraform v5 Provider. We are aware of the high number of issues reported by the Cloudflare community related to the v5 release. We have committed to releasing improvements on a 2-3 week cadence ↗ to ensure its stability and reliability, including the v5.15 release. We have also pivoted from an issue-to-issue approach to a resource-per-resource approach ↗ - we will be focusing on specific resources to not only stabilize the resource but also ensure it is migration-friendly for those migrating from v4 to v5.

Thank you for continuing to raise issues. They make our provider stronger and help us build products that reflect your needs.

This release includes bug fixes, the stabilization of even more popular resources, and more.

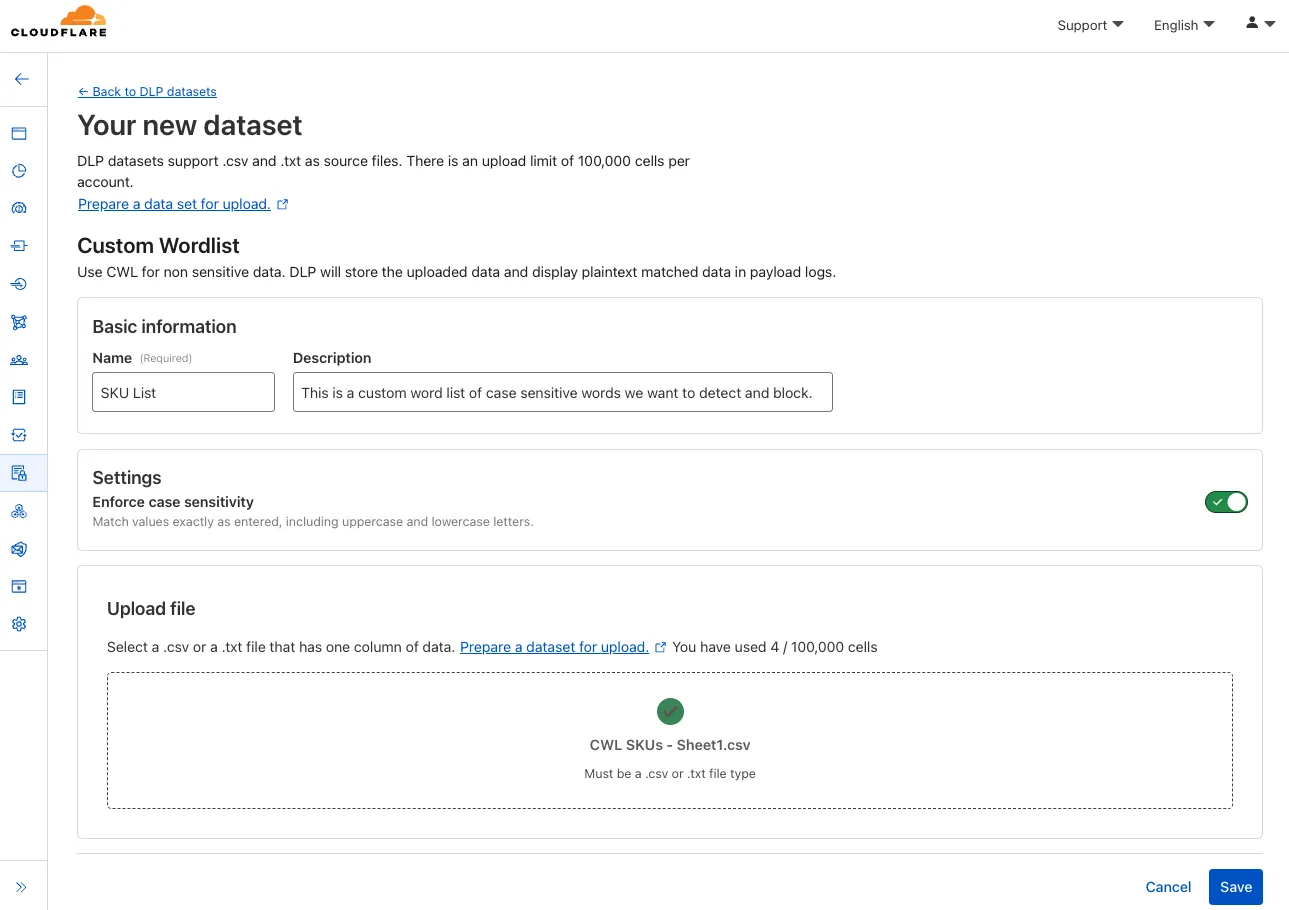

- ai_search: Add AI Search endpoints (6f02adb ↗)

- certificate_pack: Ensure proper Terraform resource ID handling for path parameters in API calls (081f32a ↗)

- worker_version: Support

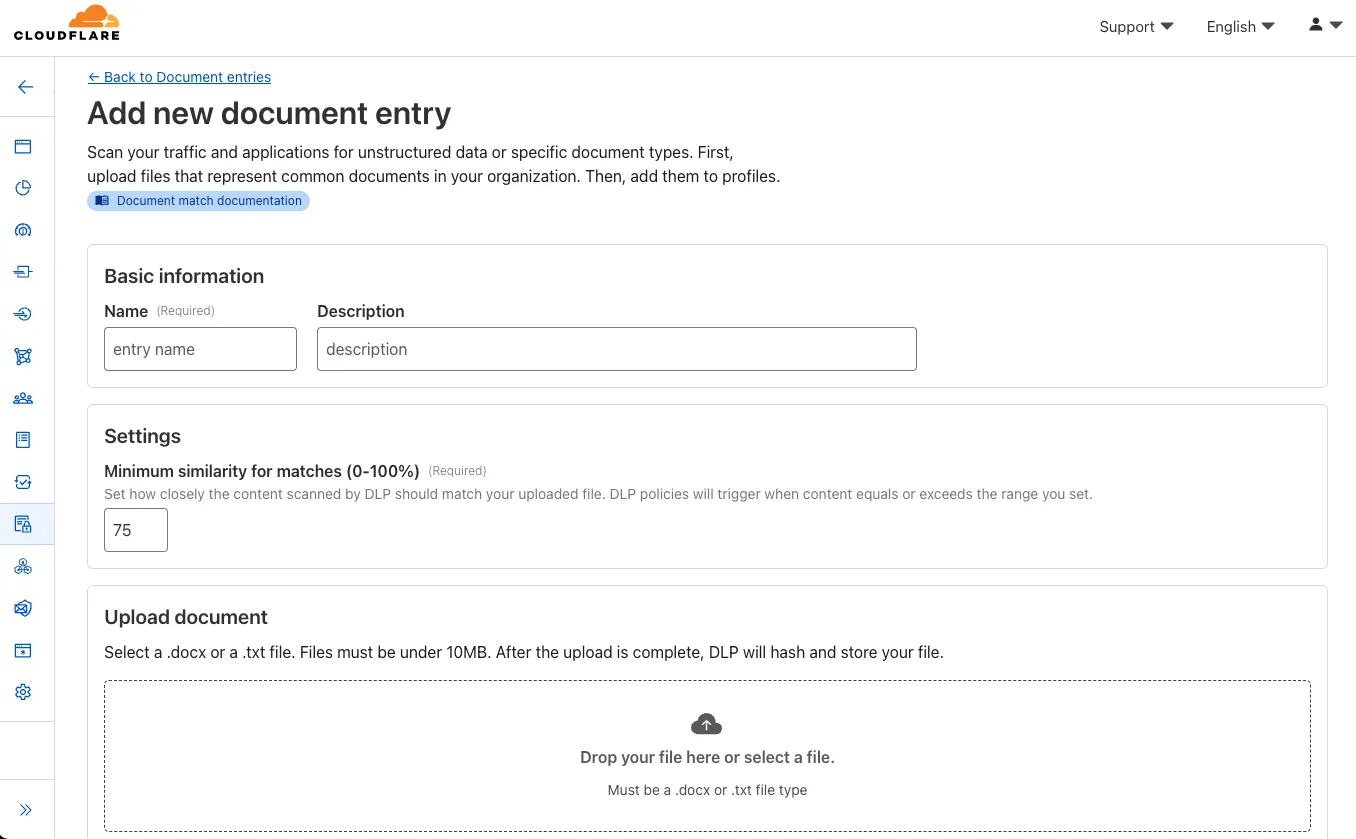

startup_time_ms(286ab55 ↗) - zero_trust_dlp_custom_entry: Support

upload_status(7dc0fe3 ↗) - zero_trust_dlp_entry: Support

upload_status(7dc0fe3 ↗) - zero_trust_dlp_integration_entry: Support

upload_status(7dc0fe3 ↗) - zero_trust_dlp_predefined_entry: Support

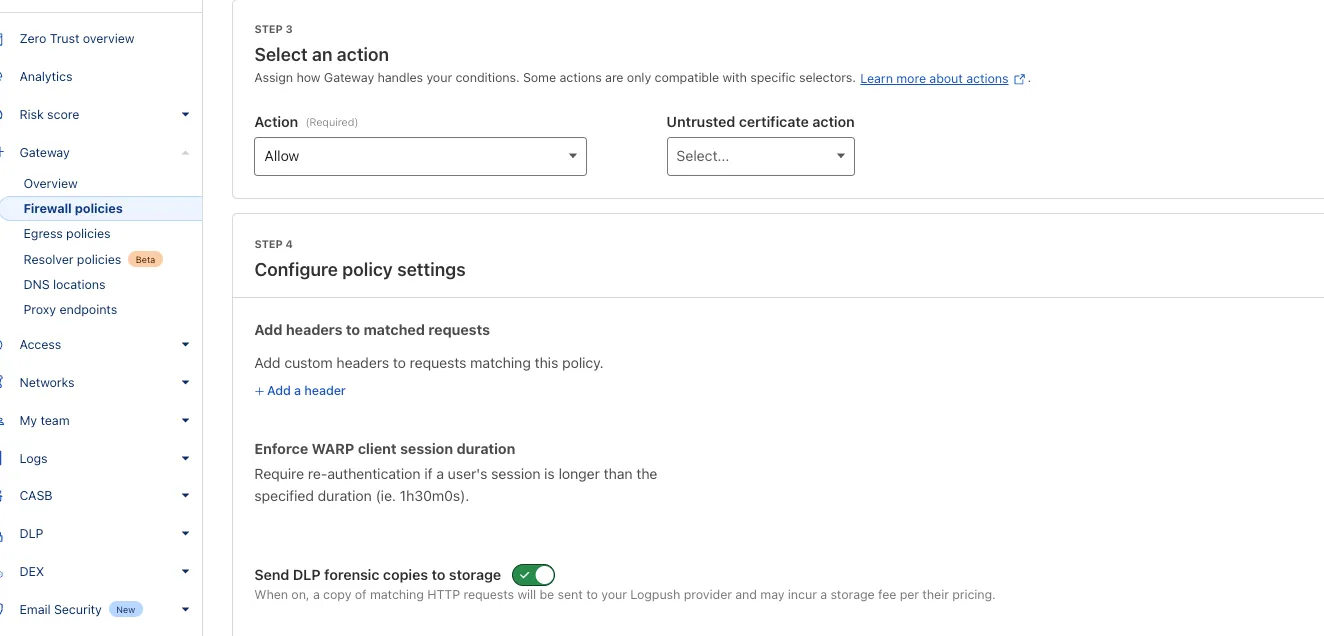

upload_status(7dc0fe3 ↗) - zero_trust_gateway_policy: Support

forensic_copy(5741fd0 ↗) - zero_trust_list: Support additional types (category, location, device) (5741fd0 ↗)

- access_rules: Add validation to prevent state drift. Ideally, we'd use Semantic Equality but since that isn't an option, this will remove a foot-gun. (4457791 ↗)

- cloudflare_pages_project: Addressing drift issues (6edffcf ↗) (3db318e ↗)

- cloudflare_worker: Can be cleanly imported (4859b52 ↗)

- cloudflare_worker: Ensure clean imports (5b525bc ↗)

- list_items: Add validation for IP List items to avoid inconsistent state (b6733dc ↗)

- zero_trust_access_application: Remove all conditions from sweeper (3197f1a ↗)

- spectrum_application: Map missing fields during spectrum resource import (#6495 ↗) (ddb4e72 ↗)

We suggest waiting to migrate to v5 while we work on stabilization. This helps with avoiding any blocking issues while the Terraform resources are actively being stabilized ↗. We will be releasing a new migration tool in March 2026 to help support v4 to v5 transitions for our most popular resources.

-

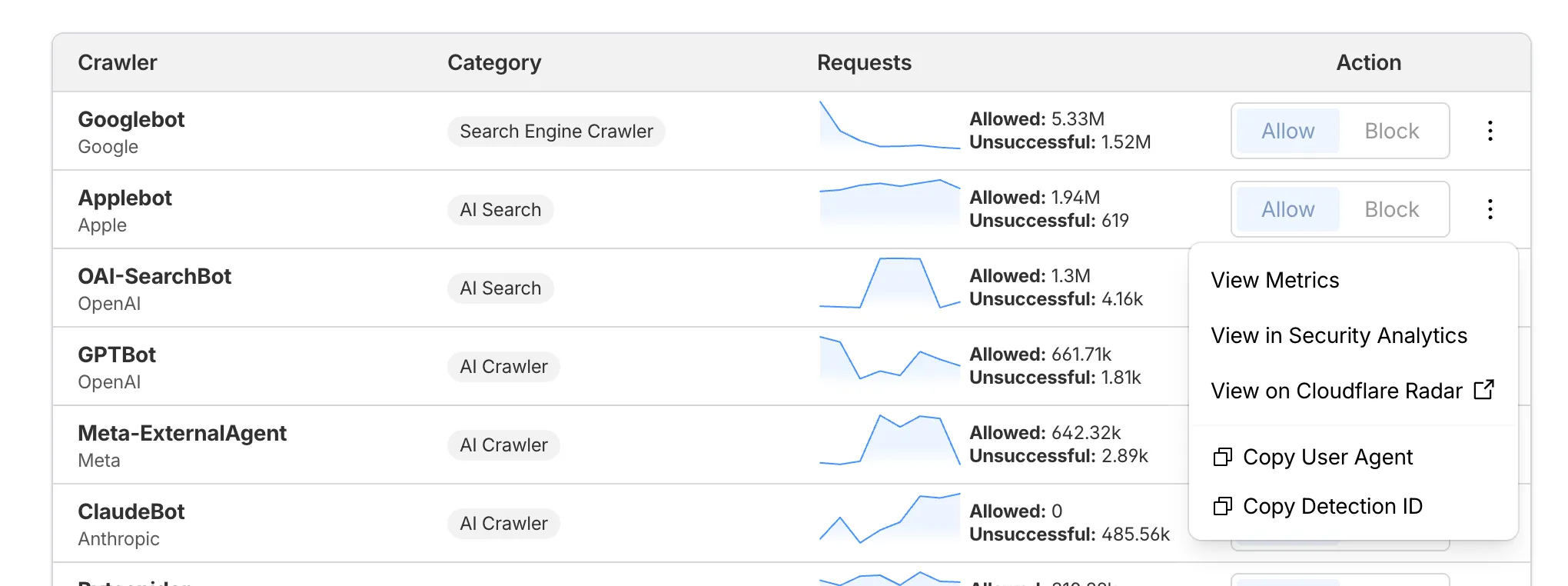

The Overview tab is now the default view in AI Crawl Control. The previous default view with controls for individual AI crawlers is available in the Crawlers tab.

- Executive summary — Monitor total requests, volume change, most common status code, most popular path, and high-volume activity

- Operator grouping — Track crawlers by their operating companies (OpenAI, Microsoft, Google, ByteDance, Anthropic, Meta)

- Customizable filters — Filter your snapshot by date range, crawler, operator, hostname, or path

- Log in to the Cloudflare dashboard and select your account and domain.

- Go to AI Crawl Control, where the Overview tab opens by default with your activity snapshot.

- Use filters to customize your view by date range, crawler, operator, hostname, or path.

- Navigate to the Crawlers tab to manage controls for individual crawlers.

Learn more about analyzing AI traffic and managing AI crawlers.

-

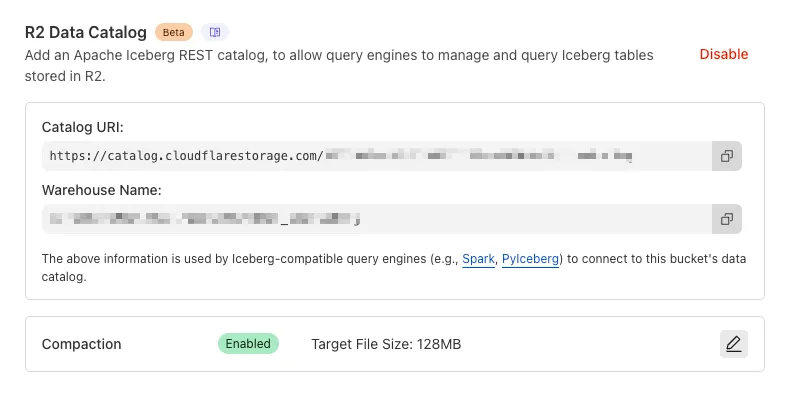

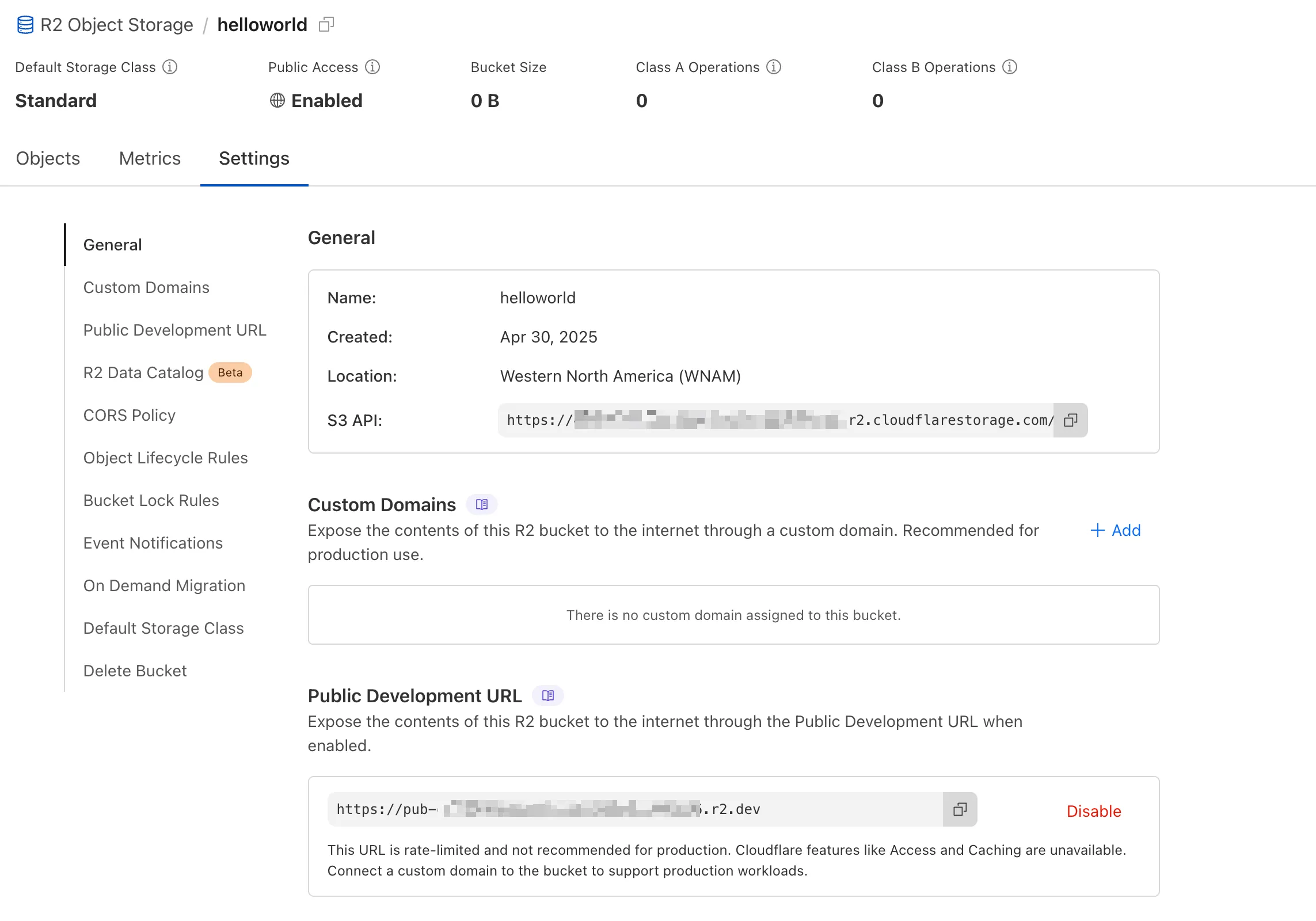

R2 Data Catalog now supports automatic snapshot expiration for Apache Iceberg tables.

In Apache Iceberg, a snapshot is metadata that represents the state of a table at a given point in time. Every mutation creates a new snapshot which enable powerful features like time travel queries and rollback capabilities but will accumulate over time.

Without regular cleanup, these accumulated snapshots can lead to:

- Metadata overhead

- Slower table operations

- Increased storage costs.

Snapshot expiration in R2 Data Catalog automatically removes old table snapshots based on your configured retention policy, improving performance and storage costs.

Terminal window # Enable catalog-level snapshot expiration# Expire snapshots older than 7 days, always retain at least 10 recent snapshotsnpx wrangler r2 bucket catalog snapshot-expiration enable my-bucket \--older-than-days 7 \--retain-last 10Snapshot expiration uses two parameters to determine which snapshots to remove:

--older-than-days: age threshold in days--retain-last: minimum snapshot count to retain

Both conditions must be met before a snapshot is expired, ensuring you always retain recent snapshots even if they exceed the age threshold.

This feature complements automatic compaction, which optimizes query performance by combining small data files into larger ones. Together, these automatic maintenance operations keep your Iceberg tables performant and cost-efficient without manual intervention.

To learn more about snapshot expiration and how to configure it, visit our table maintenance documentation or see how to manage catalogs.

-

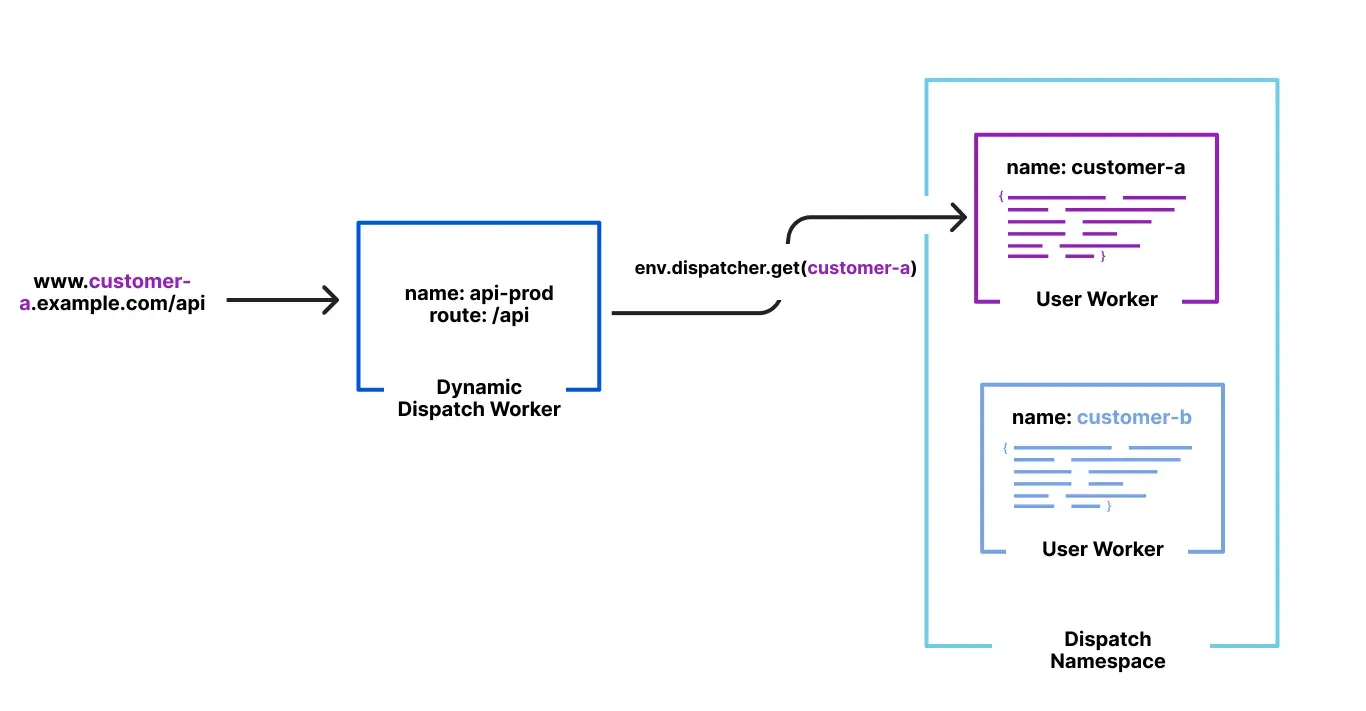

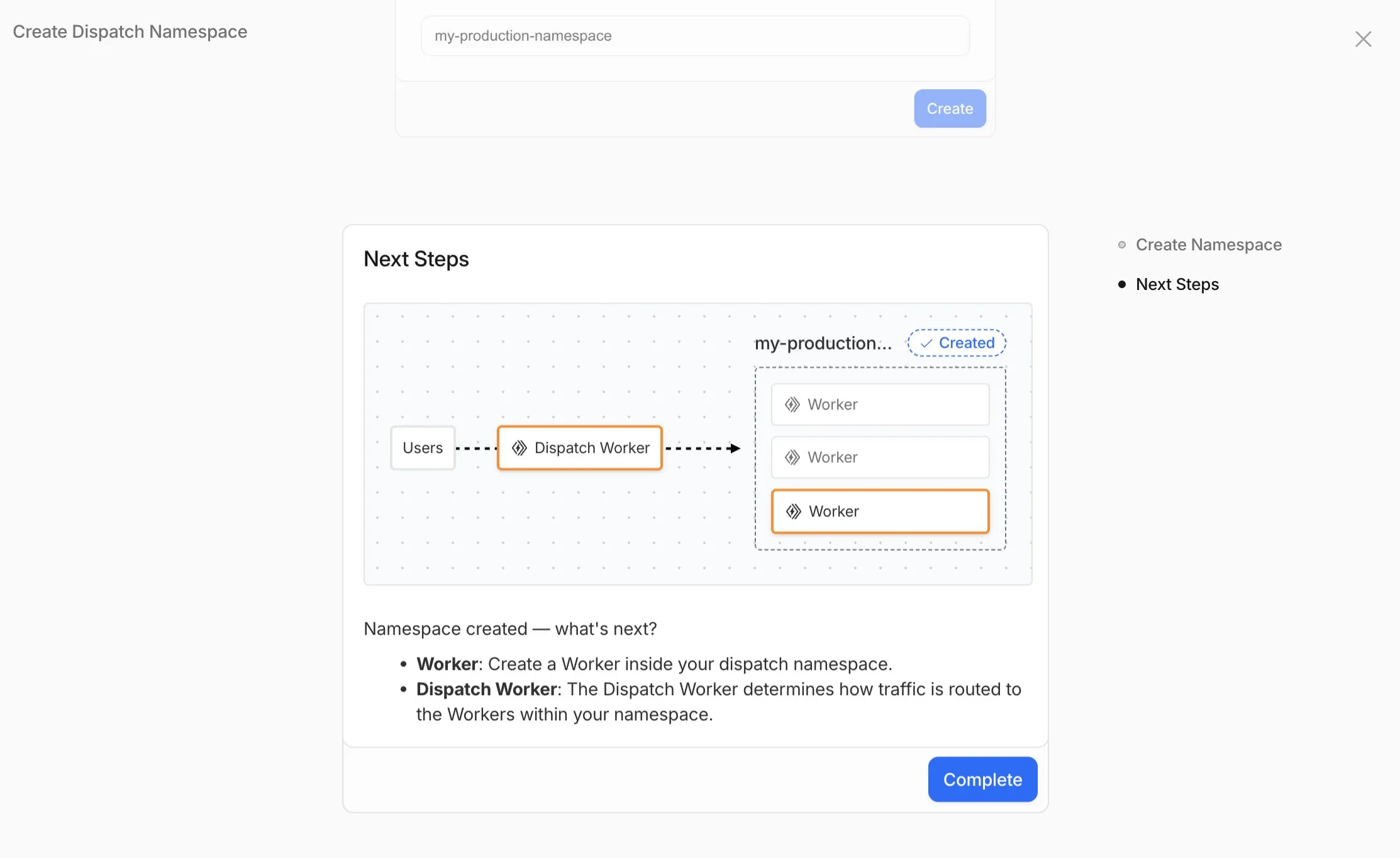

Workers for Platforms lets you build multi-tenant platforms on Cloudflare Workers, allowing your end users to deploy and run their own code on your platform. It's designed for anyone building an AI vibe coding platform, e-commerce platform, website builder, or any product that needs to securely execute user-generated code at scale.

Previously, setting up Workers for Platforms required using the API. Now, the Workers for Platforms UI supports namespace creation, dispatch worker templates, and tag management, making it easier for Workers for Platforms customers to build and manage multi-tenant platforms directly from the Cloudflare dashboard.

- Namespace Management: You can now create and configure dispatch namespaces directly within the dashboard to start a new platform setup.

- Dispatch Worker Templates: New Dispatch Worker templates allow you to quickly define how traffic is routed to individual Workers within your namespace. Refer to the Dynamic Dispatch documentation for more examples.

- Tag Management: You can now set and update tags on User Workers, making it easier to group and manage your Workers.

- Binding Visibility: Bindings attached to User Workers are now visible directly within the User Worker view.

- Deploy Vibe Coding Platform in one-click: Deploy a reference implementation of an AI vibe coding platform directly from the dashboard. Powered by the Cloudflare's VibeSDK ↗, this starter kit integrates with Workers for Platforms to handle the deployment of AI-generated projects at scale.

To get started, go to Workers for Platforms under Compute & AI in the Cloudflare dashboard ↗.

-

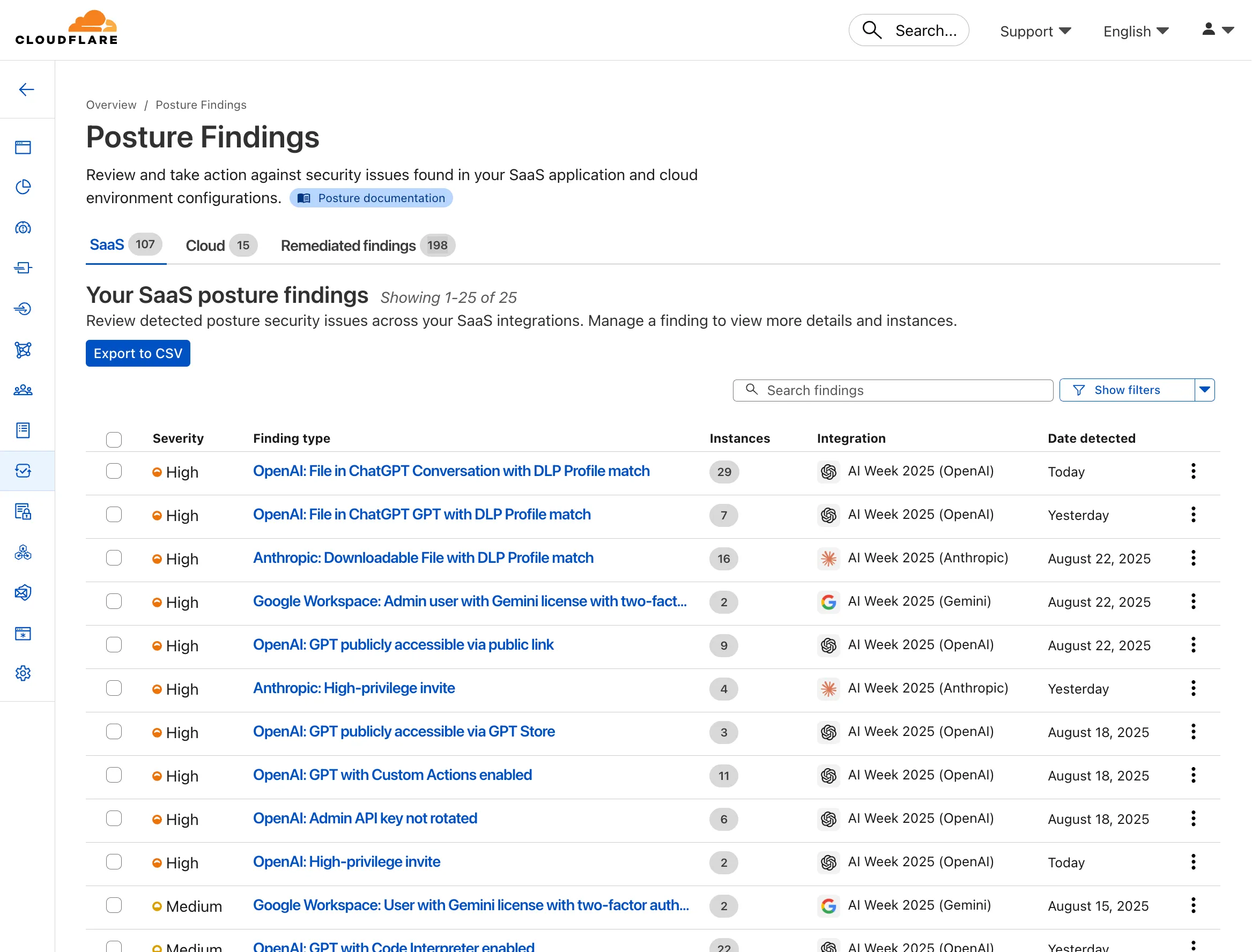

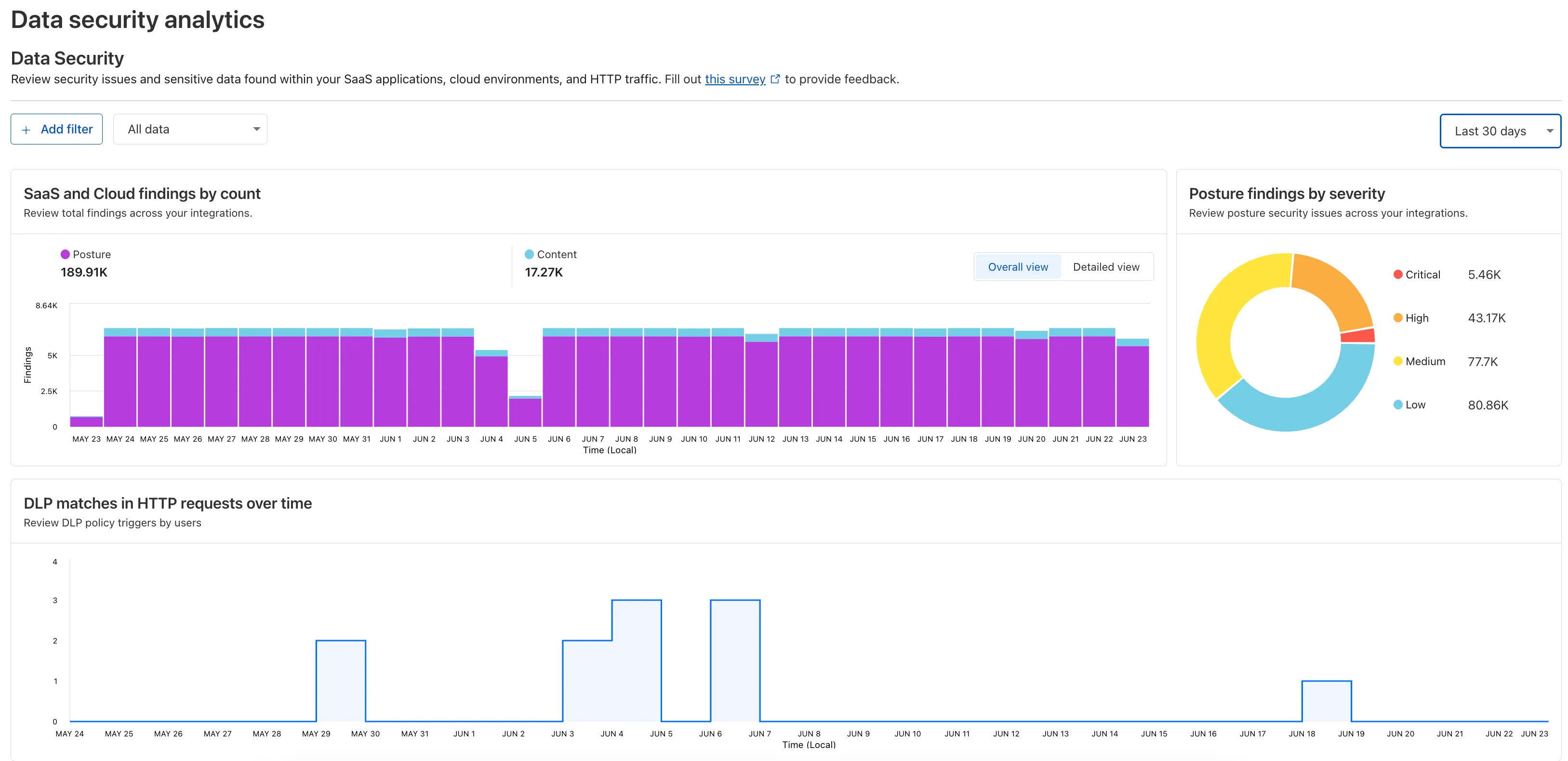

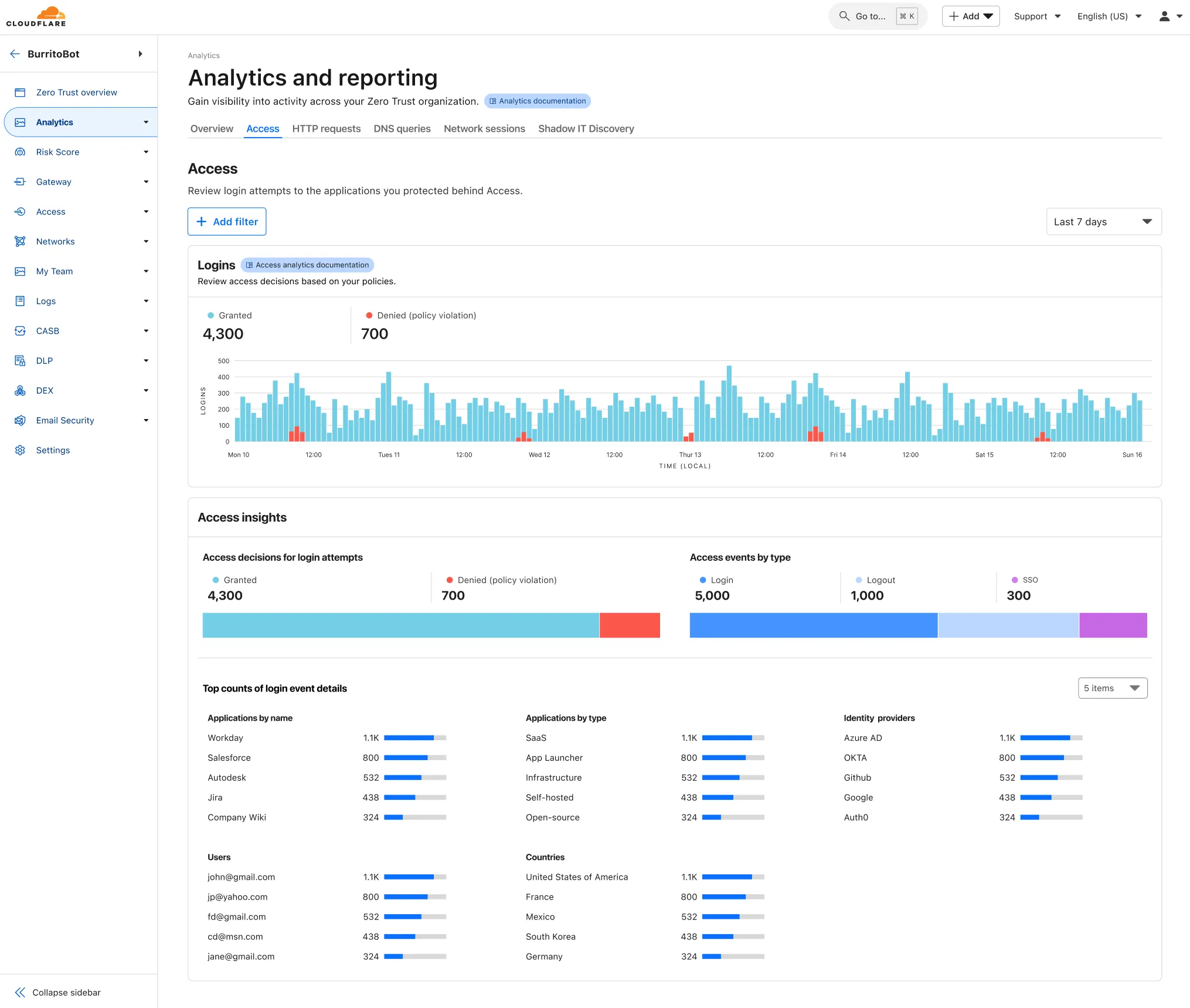

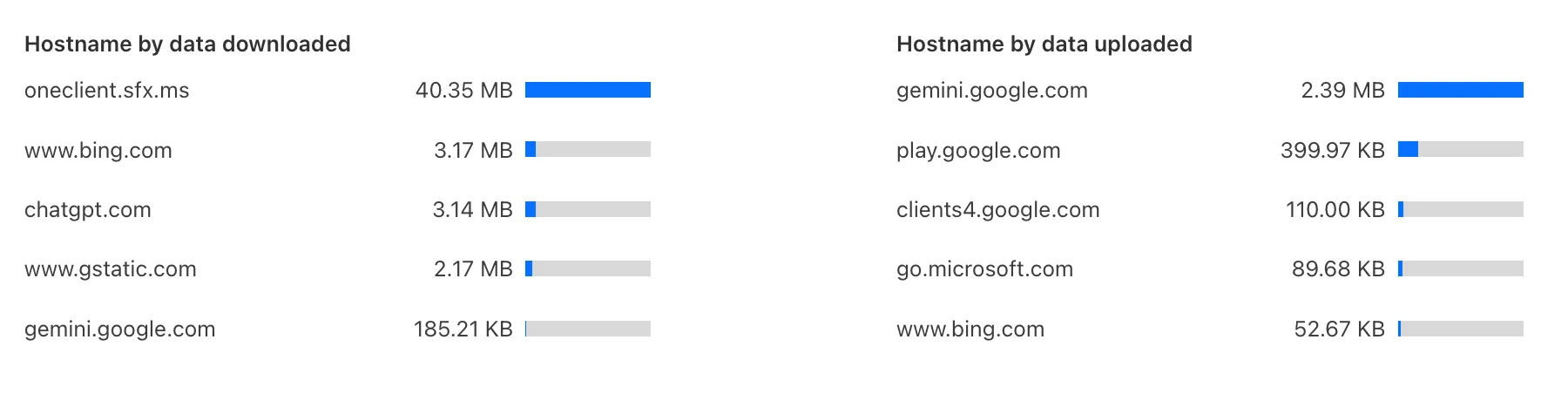

Zero Trust has again upgraded its Shadow IT analytics, providing you with unprecedented visibility into your organizations use of SaaS tools. With this dashboard, you can review who is using an application and volumes of data transfer to the application.

With this update, you can review data transfer metrics at the domain level, rather than just the application level, providing more granular insight into your data transfer patterns.

These metrics can be filtered by all available filters on the dashboard, including user, application, or content category.

Both the analytics and policies are accessible in the Cloudflare Zero Trust dashboard ↗, empowering organizations with better visibility and control.

-

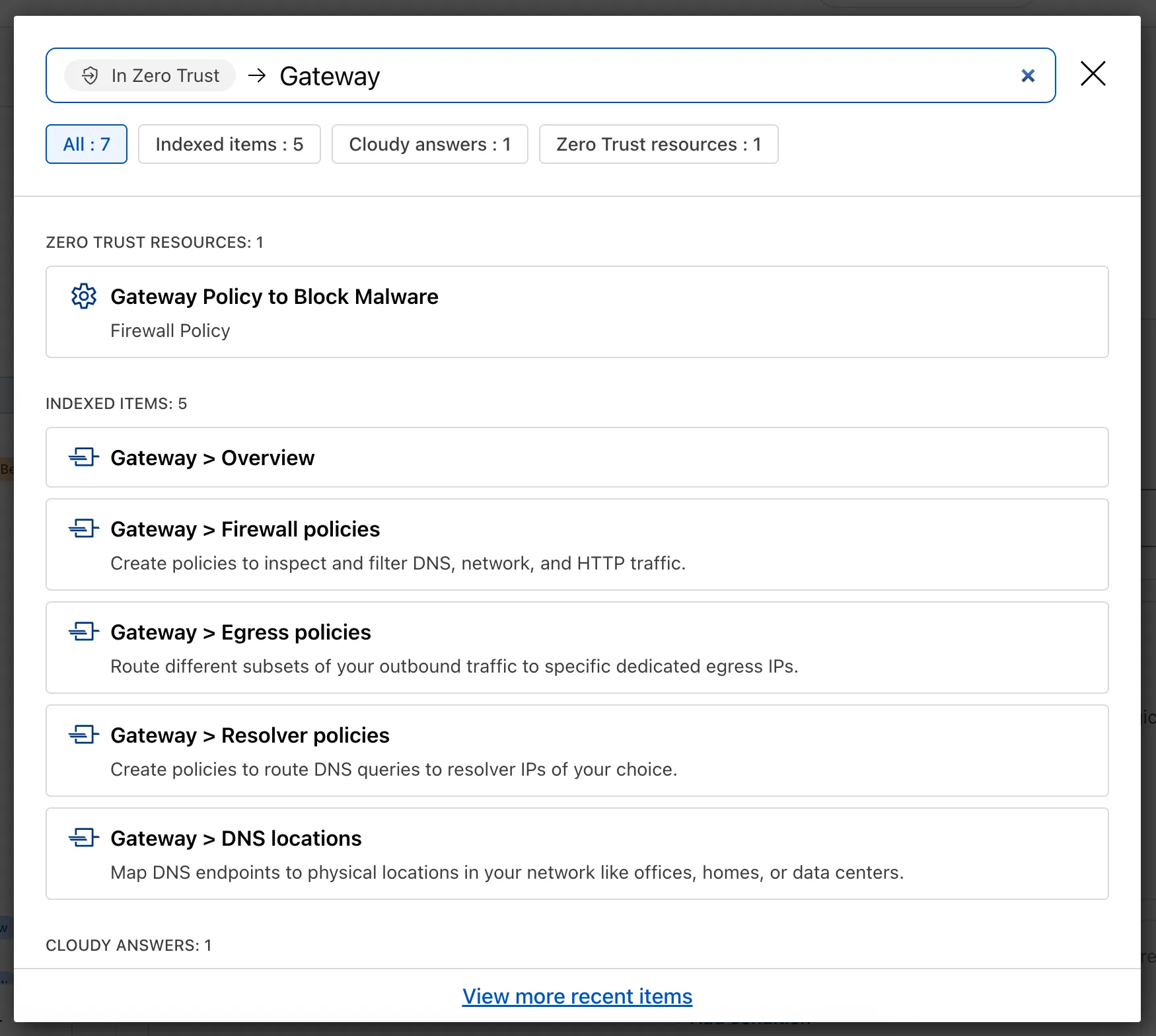

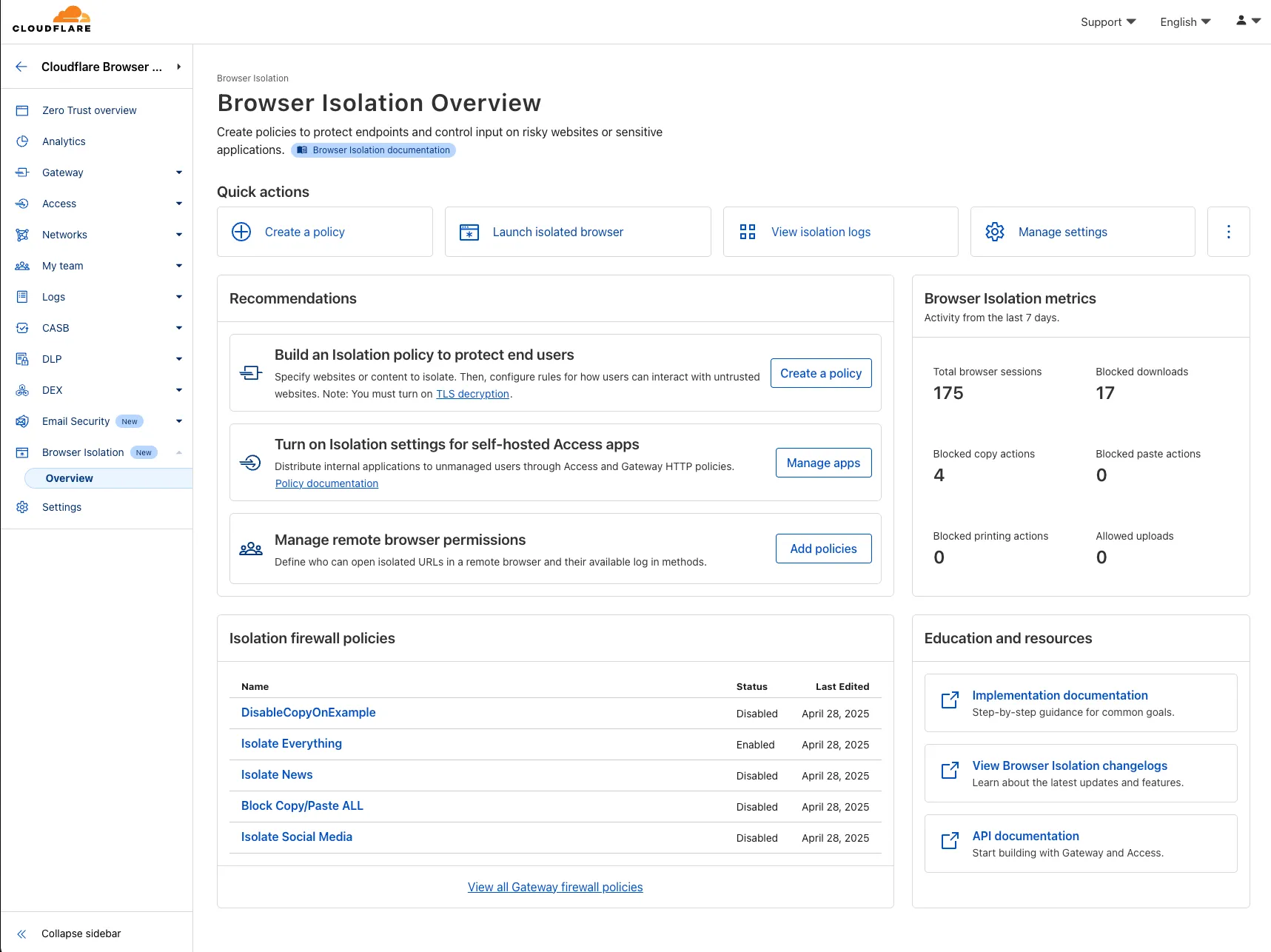

You can now duplicate specific Cloudflare One resources with a single click from the dashboard.

Initially supported resources:

- Access Applications

- Access Policies

- Gateway Policies

To try this out, simply click on the overflow menu (⋮) from the resource table and click Duplicate. We will continue to add the Duplicate action for resources throughout 2026.

-

A new Rules of Durable Objects guide is now available, providing opinionated best practices for building effective Durable Objects applications. This guide covers design patterns, storage strategies, concurrency, and common anti-patterns to avoid.

Key guidance includes:

- Design around your "atom" of coordination — Create one Durable Object per logical unit (chat room, game session, user) instead of a global singleton that becomes a bottleneck.

- Use SQLite storage with RPC methods — SQLite-backed Durable Objects with typed RPC methods provide the best developer experience and performance.

- Understand input and output gates — Learn how Cloudflare's runtime prevents data races by default, how write coalescing works, and when to use

blockConcurrencyWhile(). - Leverage Hibernatable WebSockets — Reduce costs for real-time applications by allowing Durable Objects to sleep while maintaining WebSocket connections.

The testing documentation has also been updated with modern patterns using

@cloudflare/vitest-pool-workers, including examples for testing SQLite storage, alarms, and direct instance access:test/counter.test.js import { env, runDurableObjectAlarm } from "cloudflare:test";import { it, expect } from "vitest";it("can test Durable Objects with isolated storage", async () => {const stub = env.COUNTER.getByName("test");// Call RPC methods directly on the stubawait stub.increment();expect(await stub.getCount()).toBe(1);// Trigger alarms immediately without waitingawait runDurableObjectAlarm(stub);});test/counter.test.ts import { env, runDurableObjectAlarm } from "cloudflare:test";import { it, expect } from "vitest";it("can test Durable Objects with isolated storage", async () => {const stub = env.COUNTER.getByName("test");// Call RPC methods directly on the stubawait stub.increment();expect(await stub.getCount()).toBe(1);// Trigger alarms immediately without waitingawait runDurableObjectAlarm(stub);});

-

Storage billing for SQLite-backed Durable Objects will be enabled in January 2026, with a target date of January 7, 2026 (no earlier).

To view your SQLite storage usage, go to the Durable Objects page

Go to Durable ObjectsIf you do not want to incur costs, please take action such as optimizing queries or deleting unnecessary stored data in order to reduce your SQLite storage usage ahead of the January 7th target. Only usage on and after the billing target date will incur charges.

Developers on the Workers Paid plan with Durable Object's SQLite storage usage beyond included limits will incur charges according to SQLite storage pricing announced in September 2024 with the public beta ↗. Developers on the Workers Free plan will not be charged.

Compute billing for SQLite-backed Durable Objects has been enabled since the initial public beta. SQLite-backed Durable Objects currently incur charges for requests and duration, and no changes are being made to compute billing.

For more information about SQLite storage pricing and limits, refer to the Durable Objects pricing documentation.

-

R2 SQL now supports aggregation functions,

GROUP BY,HAVING, along with schema discovery commands to make it easy to explore your data catalog.You can now perform aggregations on Apache Iceberg tables in R2 Data Catalog using standard SQL functions including

COUNT(*),SUM(),AVG(),MIN(), andMAX(). Combine these withGROUP BYto analyze data across dimensions, and useHAVINGto filter aggregated results.-- Calculate average transaction amounts by departmentSELECT department, COUNT(*), AVG(total_amount)FROM my_namespace.sales_dataWHERE region = 'North'GROUP BY departmentHAVING COUNT(*) > 50ORDER BY AVG(total_amount) DESC-- Find high-value departmentsSELECT department, SUM(total_amount)FROM my_namespace.sales_dataGROUP BY departmentHAVING SUM(total_amount) > 50000New metadata commands make it easy to explore your data catalog and understand table structures:

SHOW DATABASESorSHOW NAMESPACES- List all available namespacesSHOW TABLES IN namespace_name- List tables within a namespaceDESCRIBE namespace_name.table_name- View table schema and column types

Terminal window ❯ npx wrangler r2 sql query "{ACCOUNT_ID}_{BUCKET_NAME}" "DESCRIBE default.sales_data;"⛅️ wrangler 4.54.0─────────────────────────────────────────────┌──────────────────┬────────────────┬──────────┬─────────────────┬───────────────┬───────────────────────────────────────────────────────────────────────────────────────────────────┐│ column_name │ type │ required │ initial_default │ write_default │ doc │├──────────────────┼────────────────┼──────────┼─────────────────┼───────────────┼───────────────────────────────────────────────────────────────────────────────────────────────────┤│ sale_id │ BIGINT │ false │ │ │ Unique identifier for each sales transaction │├──────────────────┼────────────────┼──────────┼─────────────────┼───────────────┼───────────────────────────────────────────────────────────────────────────────────────────────────┤│ sale_timestamp │ TIMESTAMPTZ │ false │ │ │ Exact date and time when the sale occurred (used for partitioning) │├──────────────────┼────────────────┼──────────┼─────────────────┼───────────────┼───────────────────────────────────────────────────────────────────────────────────────────────────┤│ department │ TEXT │ false │ │ │ Product department (8 categories: Electronics, Beauty, Home, Toys, Sports, Food, Clothing, Books) │├──────────────────┼────────────────┼──────────┼─────────────────┼───────────────┼───────────────────────────────────────────────────────────────────────────────────────────────────┤│ category │ TEXT │ false │ │ │ Product category grouping (4 categories: Premium, Standard, Budget, Clearance) │├──────────────────┼────────────────┼──────────┼─────────────────┼───────────────┼───────────────────────────────────────────────────────────────────────────────────────────────────┤│ region │ TEXT │ false │ │ │ Geographic sales region (5 regions: North, South, East, West, Central) │├──────────────────┼────────────────┼──────────┼─────────────────┼───────────────┼───────────────────────────────────────────────────────────────────────────────────────────────────┤│ product_id │ INT │ false │ │ │ Unique identifier for the product sold │├──────────────────┼────────────────┼──────────┼─────────────────┼───────────────┼───────────────────────────────────────────────────────────────────────────────────────────────────┤│ quantity │ INT │ false │ │ │ Number of units sold in this transaction (range: 1-50) │├──────────────────┼────────────────┼──────────┼─────────────────┼───────────────┼───────────────────────────────────────────────────────────────────────────────────────────────────┤│ unit_price │ DECIMAL(10, 2) │ false │ │ │ Price per unit in dollars (range: $5.00-$500.00) │├──────────────────┼────────────────┼──────────┼─────────────────┼───────────────┼───────────────────────────────────────────────────────────────────────────────────────────────────┤│ total_amount │ DECIMAL(10, 2) │ false │ │ │ Total sale amount before tax (quantity × unit_price with discounts applied) │├──────────────────┼────────────────┼──────────┼─────────────────┼───────────────┼───────────────────────────────────────────────────────────────────────────────────────────────────┤│ discount_percent │ INT │ false │ │ │ Discount percentage applied to this sale (0-50%) │├──────────────────┼────────────────┼──────────┼─────────────────┼───────────────┼───────────────────────────────────────────────────────────────────────────────────────────────────┤│ tax_amount │ DECIMAL(10, 2) │ false │ │ │ Tax amount collected on this sale │├──────────────────┼────────────────┼──────────┼─────────────────┼───────────────┼───────────────────────────────────────────────────────────────────────────────────────────────────┤│ profit_margin │ DECIMAL(10, 2) │ false │ │ │ Profit margin on this sale as a decimal percentage │├──────────────────┼────────────────┼──────────┼─────────────────┼───────────────┼───────────────────────────────────────────────────────────────────────────────────────────────────┤│ customer_id │ INT │ false │ │ │ Unique identifier for the customer who made the purchase │├──────────────────┼────────────────┼──────────┼─────────────────┼───────────────┼───────────────────────────────────────────────────────────────────────────────────────────────────┤│ is_online_sale │ BOOLEAN │ false │ │ │ Boolean flag indicating if sale was made online (true) or in-store (false) │├──────────────────┼────────────────┼──────────┼─────────────────┼───────────────┼───────────────────────────────────────────────────────────────────────────────────────────────────┤│ sale_date │ DATE │ false │ │ │ Calendar date of the sale (extracted from sale_timestamp) │└──────────────────┴────────────────┴──────────┴─────────────────┴───────────────┴───────────────────────────────────────────────────────────────────────────────────────────────────┘Read 0 B across 0 files from R2On average, 0 B / sTo learn more about the new aggregation capabilities and schema discovery commands, check out the SQL reference. If you're new to R2 SQL, visit our getting started guide to begin querying your data.

-

Cloudflare Logpush now supports SentinelOne as a native destination.

Logs from Cloudflare can be sent to SentinelOne AI SIEM ↗ via Logpush. The destination can be configured through the Logpush UI in the Cloudflare dashboard or by using the Logpush API.

For more information, refer to the Destination Configuration documentation.

-

Pay Per Crawl is introducing enhancements for both AI crawler operators and site owners, focusing on programmatic discovery, flexible pricing models, and granular configuration control.

A new authenticated API endpoint allows verified crawlers to programmatically discover domains participating in Pay Per Crawl. Crawlers can use this to build optimized crawl queues, cache domain lists, and identify new participating sites. This eliminates the need to discover payable content through trial requests.

The API endpoint is

GET https://crawlers-api.ai-audit.cfdata.org/charged_zonesand requires Web Bot Auth authentication. Refer to Discover payable content for authentication steps, request parameters, and response schema.Payment headers (

crawler-exact-priceorcrawler-max-price) must now be included in the Web Bot Authsignature-inputheader components. This security enhancement prevents payment header tampering, ensures authenticated payment intent, validates crawler identity with payment commitment, and protects against replay attacks with modified pricing. Crawlers must add their payment header to the list of signed components when constructing the signature-input header.Pay Per Crawl error responses now include a new

crawler-errorheader with 11 specific error codes for programmatic handling. Error response bodies remain unchanged for compatibility. These codes enable robust error handling, automated retry logic, and accurate spending tracking.Site owners can now offer free access to specific pages like homepages, navigation, or discovery pages while charging for other content. Create a Configuration Rule in Rules > Configuration Rules, set your URI pattern using wildcard, exact, or prefix matching on the URI Full field, and enable the Disable Pay Per Crawl setting. When disabled for a URI pattern, crawler requests pass through without blocking or charging.

Some paths are always free to crawl. These paths are:

/robots.txt,/sitemap.xml,/security.txt,/.well-known/security.txt,/crawlers.json.AI crawler operators: Discover payable content | Crawl pages

Site owners: Advanced configuration

-

A new Beta release for the Windows WARP client is now available on the beta releases downloads page.

This release contains minor fixes and improvements.

Changes and improvements

- The Local Domain Fallback feature has been fixed for devices running WARP client version 2025.4.929.0 and newer. Previously, these devices could experience failures with Local Domain Fallback unless a fallback server was explicitly configured. This configuration is no longer a requirement for the feature to function correctly.

- Proxy mode now supports transparent HTTP proxying in addition to CONNECT-based proxying.

- Fixed an issue where sending large messages to the WARP daemon by Inter-Process Communication (IPC) could cause WARP to crash and result in service interruptions.

Known issues

For Windows 11 24H2 users, Microsoft has confirmed a regression that may lead to performance issues like mouse lag, audio cracking, or other slowdowns. Cloudflare recommends users experiencing these issues upgrade to a minimum Windows 11 24H2 KB5062553 or higher for resolution.

Devices with KB5055523 installed may receive a warning about

Win32/ClickFix.ABAbeing present in the installer. To resolve this false positive, update Microsoft Security Intelligence to version 1.429.19.0 or later.DNS resolution may be broken when the following conditions are all true:

- WARP is in Secure Web Gateway without DNS filtering (tunnel-only) mode.

- A custom DNS server address is configured on the primary network adapter.

- The custom DNS server address on the primary network adapter is changed while WARP is connected.

To work around this issue, reconnect the WARP client by toggling off and back on.

-

A new Beta release for the macOS WARP client is now available on the beta releases downloads page.

This release contains minor fixes and improvements.

Changes and improvements

- The Local Domain Fallback feature has been fixed for devices running WARP client version 2025.4.929.0 and newer. Previously, these devices could experience failures with Local Domain Fallback unless a fallback server was explicitly configured. This configuration is no longer a requirement for the feature to function correctly.

- Proxy mode now supports transparent HTTP proxying in addition to CONNECT-based proxying.

-

Earlier this year, we announced the launch of the new Terraform v5 Provider. We are aware of the high number of issues reported by the Cloudflare community related to the v5 release. We have committed to releasing improvements on a 2-3 week cadence ↗ to ensure its stability and reliability, including the v5.14 release. We have also pivoted from an issue-to-issue approach to a resource-per-resource approach ↗ - we will be focusing on specific resources to not only stabilize the resource but also ensure it is migration-friendly for those migrating from v4 to v5.

Thank you for continuing to raise issues. They make our provider stronger and help us build products that reflect your needs.

This release includes bug fixes, the stabilization of even more popular resources, and more.

Resource affected:

api_shield_discovery_operationCloudflare continuously discovers and updates API endpoints and web assets of your web applications. To improve the maintainability of these dynamic resources, we are working on reducing the need to actively engage with discovered operations.

The corresponding public API endpoint of discovered operations ↗ is not affected and will continue to be supported.

- pages_project: Add v4 -> v5 migration tests (#6506 ↗)

- account_members: Makes member policies a set (#6488 ↗)

- pages_project: Ensures non empty refresh plans (#6515 ↗)

- R2: Improves sweeper (#6512 ↗)

- workers_kv: Ignores value import state for verify (#6521 ↗)

- workers_script: No longer treats the migrations attribute as WriteOnly (#6489 ↗)

- workers_script: Resolves resource drift when worker has unmanaged secret (#6504 ↗)

- zero_trust_device_posture_rule: Preserves input.version and other fields (#6500 ↗) and (#6503 ↗)

- zero_trust_dlp_custom_profile: Adds sweepers for

dlp_custom_profile - zone_subscription|account_subscription: Adds

partners_entas valid enum forrate_plan.id(#6505 ↗) - zone: Ensures datasource model schema parity (#6487 ↗)

- subscription: Updates import signature to accept account_id/subscription_id to import account subscription (#6510 ↗)

We suggest waiting to migrate to v5 while we work on stabilization. This helps with avoiding any blocking issues while the Terraform resources are actively being stabilized ↗. We will be releasing a new migration tool in March 2026 to help support v4 to v5 transitions for our most popular resources.

-

You can now connect directly to remote databases and databases requiring TLS with