How AI Search works

AI Search (formerly AutoRAG) is Cloudflare’s managed search service. You can connect your data such as websites or unstructured content, and it automatically creates a continuously updating index that you can query with natural language in your applications or AI agents.

AI Search consists of two core processes:

- Indexing: An asynchronous background process that monitors your data source for changes and converts your data into vectors for search.

- Querying: A synchronous process triggered by user queries. It retrieves the most relevant content and generates context-aware responses.

Indexing begins automatically when you create an AI Search instance and connect a data source.

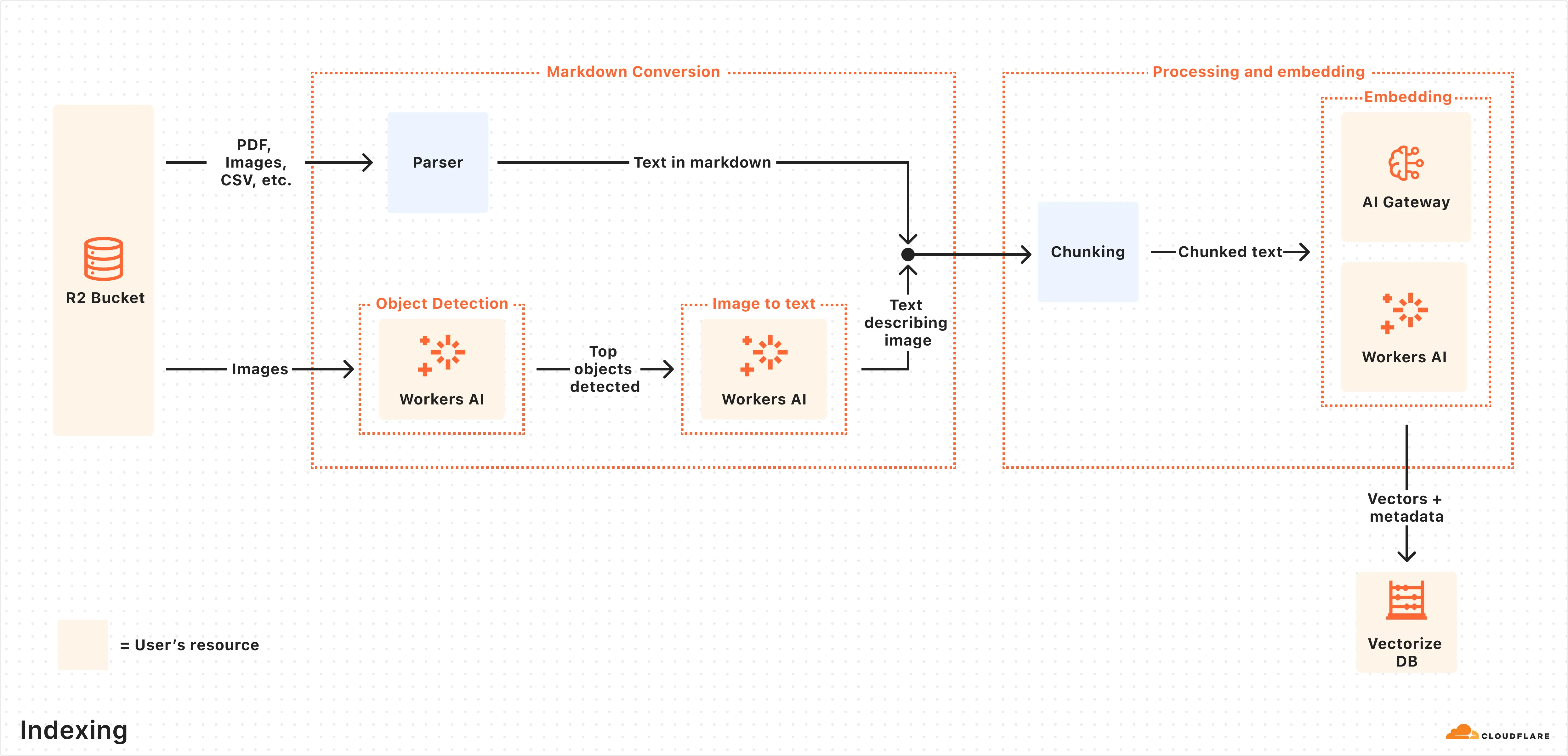

Here is what happens during indexing:

- Data ingestion: AI Search reads from your connected data source.

- Markdown conversion: AI Search uses Workers AI’s Markdown Conversion to convert supported data types into structured Markdown. This ensures consistency across diverse file types. For images, Workers AI is used to perform object detection followed by vision-to-language transformation to convert images into Markdown text.

- Chunking: The extracted text is chunked into smaller pieces to improve retrieval granularity.

- Embedding: Each chunk is embedded using Workers AI’s embedding model to transform the content into vectors.

- Vector storage: The resulting vectors, along with metadata like file name, are stored in a the Vectorize database created on your Cloudflare account.

After the initial data set is indexed, AI Search will regularly check for updates in your data source (e.g. additions, updates, or deletes) and index changes to ensure your vector database is up to date.

Once indexing is complete, AI Search is ready to respond to end-user queries in real time.

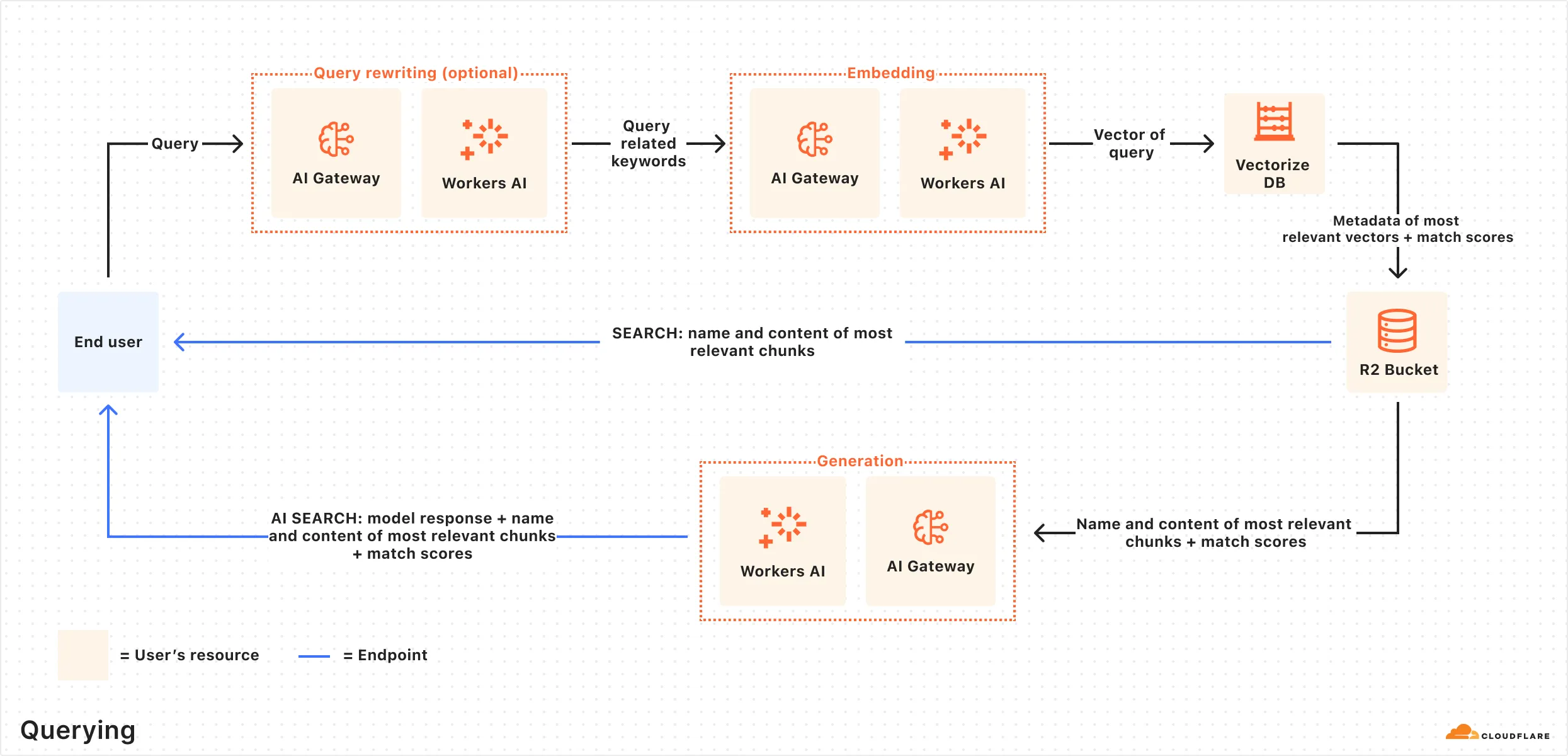

Here is how the querying pipeline works:

- Receive query from AI Search API: The query workflow begins when you send a request to either the AI Search’s AI Search or Search endpoints.

- Query rewriting (optional): AI Search provides the option to rewrite the input query using one of Workers AI’s LLMs to improve retrieval quality by transforming the original query into a more effective search query.

- Embedding the query: The rewritten (or original) query is transformed into a vector via the same embedding model used to embed your data so that it can be compared against your vectorized data to find the most relevant matches.

- Querying Vectorize index: The query vector is queried against stored vectors in the associated Vectorize database for your AI Search.

- Content retrieval: Vectorize returns the metadata of the most relevant chunks, and the original content is retrieved from the R2 bucket. If you are using the Search endpoint, the content is returned at this point.

- Response generation: If you are using the AI Search endpoint, then a text-generation model from Workers AI is used to generate a response using the retrieved content and the original user’s query, combined via a system prompt. The context-aware response from the model is returned.