Serverless global APIs

Serverless APIs represent a modern approach to building and deploying scalable and reliable application programming interfaces (APIs) without the need to manage traditional server infrastructure. These APIs are designed to handle incoming requests from users or other systems, execute the necessary logic or operations, and return a response, all without the need for developers to provision or manage underlying servers.

At the heart of serverless APIs is the concept of serverless computing, where developers focus solely on writing code to implement business logic, without concerning themselves with server provisioning, scaling, or maintenance. This allows for greater agility and faster time-to-market for API-based applications.

Developers define the API endpoints and the corresponding logic or functionality using functions or microservices, which are then deployed to the serverless platform. The platform handles the execution of these functions in response to incoming requests.

Additionally, serverless APIs often integrate seamlessly with other cloud services, such as authentication and authorization services, databases, and event-driven architectures, enabling developers to build complex, scalable, and resilient applications with minimal operational overhead.

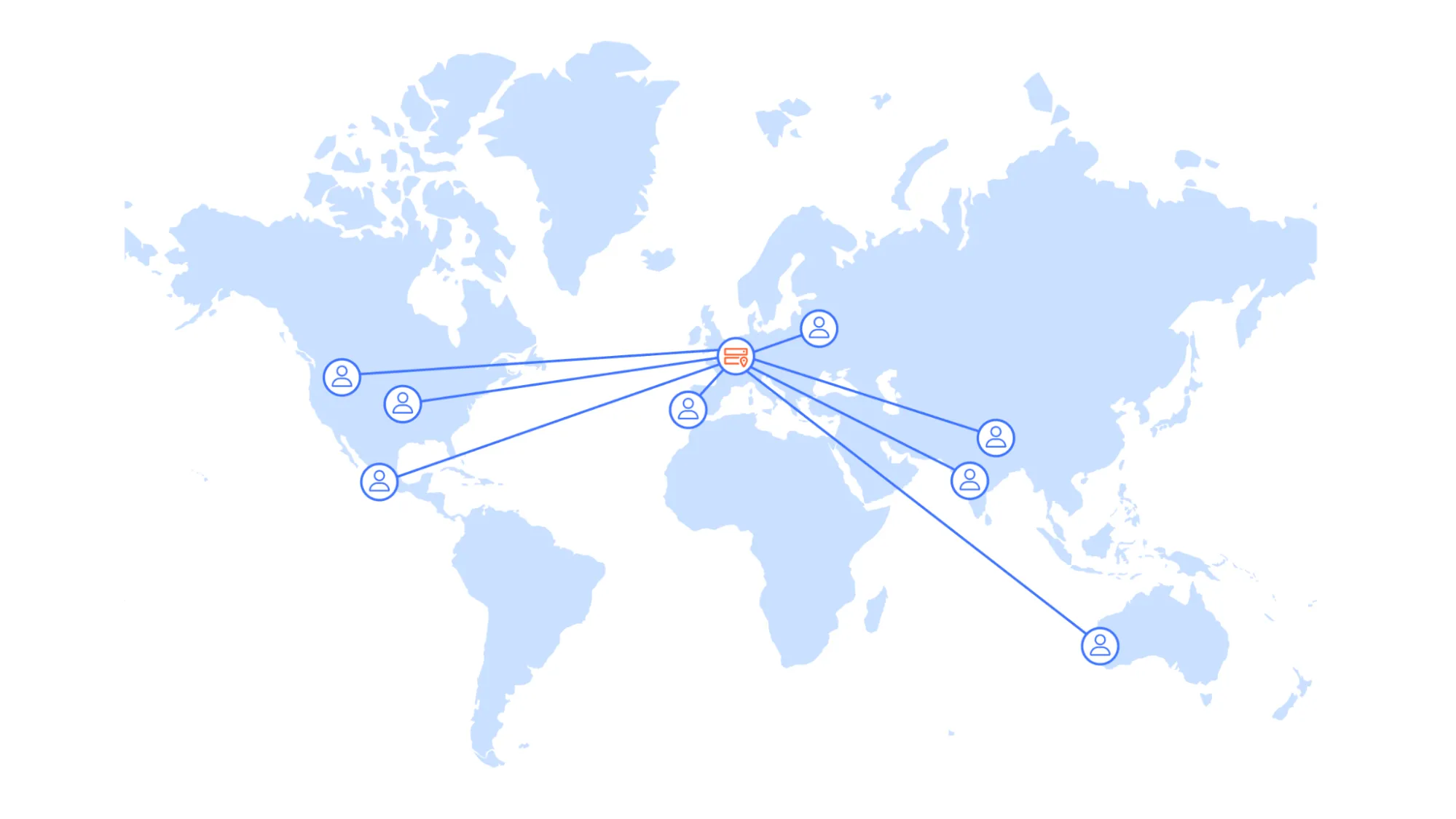

Most cloud serverless implementations have a single region where your code is executed. This means any request, from anywhere in the world, must traverse the Internet to get to this single location. All responses to the API request must also be sent back over the same Internet route to the user.

Cloudflare follows a different, global-first approach. Globally-deployed architectures enable lower latency and high availability for users accessing the API from different parts of the world. In order to realize performance gains, not only the compute needs to be distributed, but ideally the data as well. Different solutions such as a caching as well as global replication can enable this.

Overall, serverless globally-deployed APIs offer a cost-effective, scalable, and agile approach to building modern applications and services, allowing organizations to focus on delivering value to their users without being encumbered by the complexities of managing infrastructure.

This is an example architecture of a serverless API on Cloudflare and aims to illustrate how different compute and data products could interact with each other.

- Client request: Send request to API endpoint.

- API Gateway/Router: Process incoming request using Workers, check for validity, and perform authentication logic, if needed. Then, forward the (potentially transformed and/or enriched) API call to individual Workers using Service Bindings. This allows for a separation of concerns.

- Read-heavy data: Read from KV to serve read-heavy, non-dynamic data. This could include configuration data or product information. Perform writes as needed keeping limits in mind.

- Relational data: Query D1 to handle relational-data. This could include user data, product data or other data.

- External data: Query external databases using Hyperdrive. Leverage caching to improve performance where applicable. This can be especially helpful when a data migration is out of scope of the implementation.

Was this helpful?

- Resources

- API

- New to Cloudflare?

- Products

- Sponsorships

- Open Source

- Support

- Help Center

- System Status

- Compliance

- GDPR

- Company

- cloudflare.com

- Our team

- Careers

- 2025 Cloudflare, Inc.

- Privacy Policy

- Terms of Use

- Report Security Issues

- Trademark