Enable Cloudflare R2

Cloudflare Logpush supports pushing logs directly to R2. You can do so via the automatic setup (Cloudflare creates an R2 bucket for you), or you can create your own R2 bucket with the custom setup. The automatic setup is ideal for quickly setting up a bucket or for testing purposes. Instead, use the custom setup if you need full control over the configuration.

For more information about R2, refer to the Cloudflare R2 documentation.

If you want to use the automatic setup for your logpush job:

-

Log in to the Cloudflare dashboard ↗.

-

Select the Enterprise account or domain (also known as zone) you want to use with Logpush. Depending on your choice, you have access to account-scoped datasets and zone-scoped datasets, respectively.

-

Go to Analytics & Logs > Logpush.

-

Select Create a Logpush job.

-

Select R2 Object Storage - automatic as destination.

-

Next, select the dataset and the storage region you want to use.

-

To finalize, select Create Logpush job.

Your setup should now be complete. If you require full control over the configuration, consider using the custom setup instead.

Cloudflare Logpush supports pushing logs directly to R2 via the Cloudflare dashboard or via API.

Before getting started:

-

Create an R2 bucket and set up R2 API tokens.

-

Go to the R2 UI > Create bucket.

-

Select Manage R2 API Tokens.

-

Select Create API token.

-

Under Permission, select Edit permissions for your token.

-

Copy the Secret Access Key and Access Key ID. You will need these when setting up your Logpush job.

-

-

Ensure that you have the following permissions:

- R2 write, Logshare Edit.

-

Log in to the Cloudflare dashboard ↗.

-

Select the Enterprise account or domain (also known as zone) you want to use with Logpush. Depending on your choice, you have access to account-scoped datasets and zone-scoped datasets, respectively.

-

Go to Analytics & Logs > Logpush.

-

Select Create a Logpush job.

-

In Select a destination, choose R2 Object Storage.

-

Enter or select the following destination details:

- Bucket - R2 bucket name

- Path - bucket location, for example,

cloudflare-logs/http_requests/example.com - Organize logs into daily subfolders (recommended)

- Under Authentication add your R2 Access Key ID and R2 Secret Access Key. Refer to Manage R2 API tokens ↗ for more information.

When you are done entering the destination details, select Continue.

-

Select the dataset to push to the storage service.

-

In the next step, you need to configure your logpush job:

- Enter the Job name.

- Under If logs match, you can select the events to include and/or remove from your logs. Refer to Filters for more information. Not all datasets have this option available.

- In Send the following fields, you can choose to either push all logs to your storage destination or selectively choose which logs you want to push.

-

In Advanced Options, you can:

- Choose the format of timestamp fields in your logs (

RFC3339(default),Unix, orUnixNano). - Select a sampling rate for your logs or push a randomly-sampled percentage of logs.

- Enable redaction for

CVE-2021-44228. This option will replace every occurrence of${withx{.

- Choose the format of timestamp fields in your logs (

-

Select Submit once you are done configuring your logpush job.

To create a job, make a POST request to the Logpush jobs endpoint with the following fields:

- name (optional) - Use your domain name as the job name.

- destination_conf - A log destination consisting of bucket path, account ID, R2 access key ID and R2 secret access key.

r2://<BUCKET_PATH>/{DATE}?account-id=<ACCOUNT_ID>&access-key-id=<R2_ACCESS_KEY_ID>&secret-access-key=<R2_SECRET_ACCESS_KEY>- dataset - The category of logs you want to receive. Refer to Log fields for the full list of supported datasets.

- output_options (optional) - To configure fields, sample rate, and timestamp format, refer to API configuration options.

Example request using cURL:

curl https://api.cloudflare.com/client/v4/zones/{zone_id}/logpush/jobs \--header "X-Auth-Email: <EMAIL>" \--header "X-Auth-Key: <API_KEY>" \--header "Content-Type: application/json" \--data '{ "name": "<DOMAIN_NAME>", "output_options": { "field_names": ["ClientIP", "ClientRequestHost", "ClientRequestMethod", "ClientRequestURI", "EdgeEndTimestamp","EdgeResponseBytes", "EdgeResponseStatus", "EdgeStartTimestamp", "RayID"], "timestamp_format": "rfc3339" }, "destination_conf": "r2://<BUCKET_PATH>/{DATE}?account-id=<ACCOUNT_ID>&access-key-id=<R2_ACCESS_KEY_ID>&secret-access-key=<R2_SECRET_ACCESS_KEY>", "dataset": "http_requests", "enabled": true}'Once your logs are stored in R2, you can download them using various methods:

-

Log in to the Cloudflare dashboard ↗.

-

Navigate to R2 and select your bucket.

-

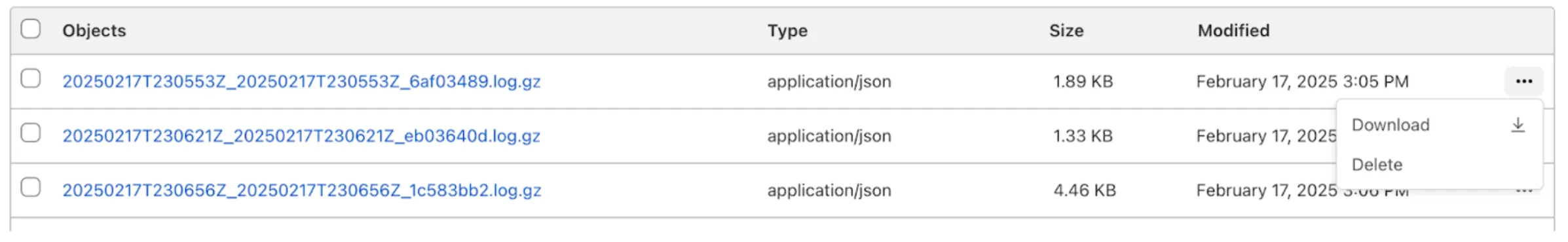

From your bucket's page, locate the desired log file.

-

Select on the ... icon next to the file to download it.

Cloudflare R2 is S3-compatible, so you can use the AWS CLI to interact with it.

- Configure the AWS CLI with your R2 credentials.

- Use the

aws s3 cpcommand to download the log file:

aws s3 cp s3://<BUCKET-NAME>/<PATH-TO-LOG-FILE><LOCAL-DESTINATION>`Replace <bucket-name>, <path-to-log-file>, and <local-destination> with your specific details.

Downloaded files are gzipped so they must be decompressed before you can open them in a text editor.

Was this helpful?

- Resources

- API

- New to Cloudflare?

- Products

- Sponsorships

- Open Source

- Support

- Help Center

- System Status

- Compliance

- GDPR

- Company

- cloudflare.com

- Our team

- Careers

- 2025 Cloudflare, Inc.

- Privacy Policy

- Terms of Use

- Report Security Issues

- Trademark