Securely deliver applications with Cloudflare

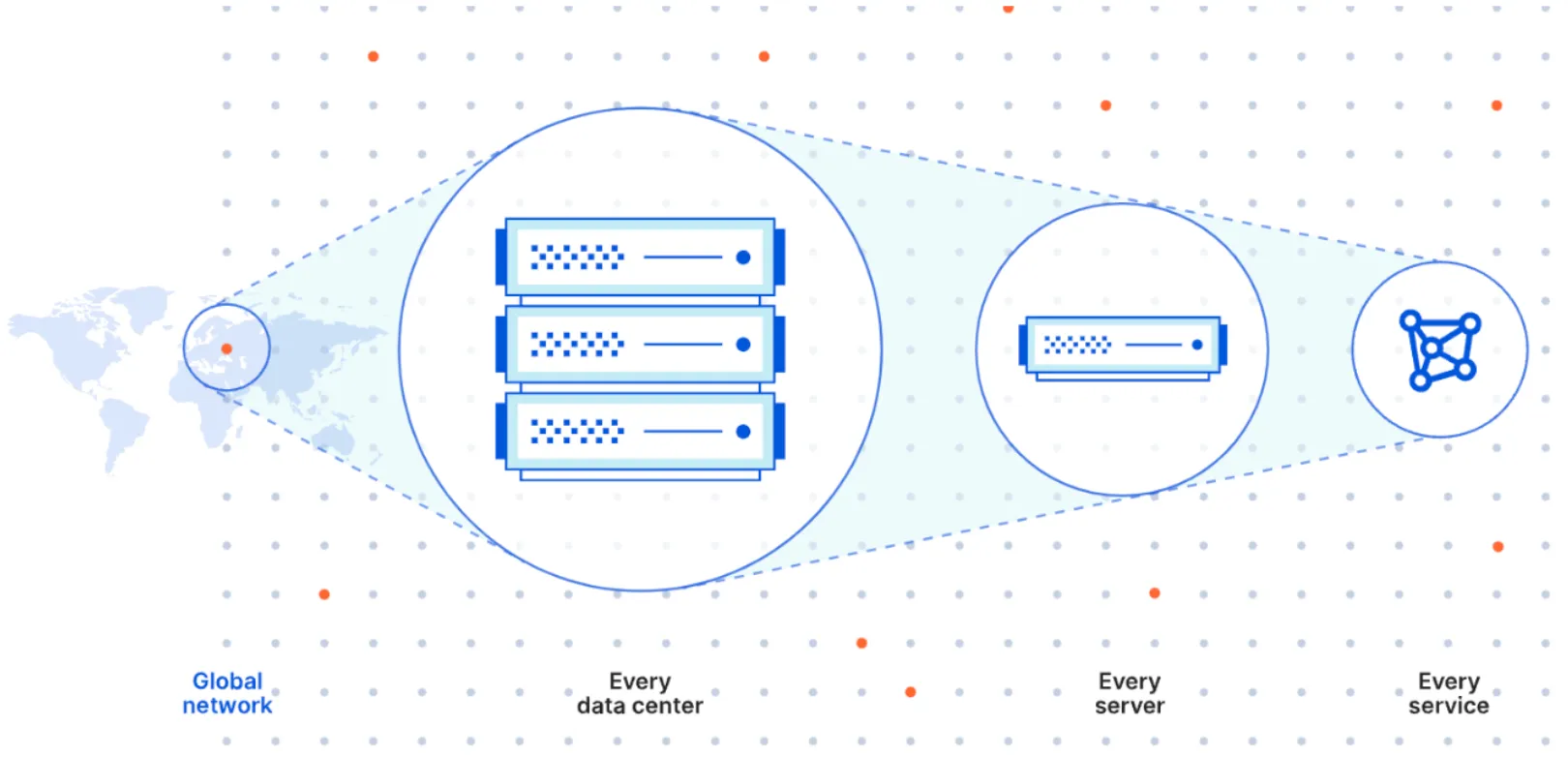

Cloudflare provides a complete suite of services around application performance, security, reliability, development, and zero trust. Cloudflare’s global network is approximately 50 ms away from about 95% of the Internet-connected population and consists of services that run on every server in every data center. The global scale of Cloudflare also allows for a robust threat intelligence source which is constantly fed back into Cloudflare security products to enhance the machine learning models and services even further.

Other differentiators include the fact that Cloudflare is not a point product unlike some vendors who only offer API security or zero trust services or specific performance/security services. Customers have started moving away from the point-product approach due to operational and management complexities, inefficiencies related to not being able to leverage cross-product innovation/integrations, and not being able to leverage scale of the network/resources across all services.

Additionally, customers do not want to be locked in to a specific cloud provider, but many performance and security vendors lock customers into their platform by focusing on and optimizing services to their own cloud and making it operationally difficult to adopt a multi-cloud strategy.

Cloudflare is agnostic to where the workloads run or what cloud provider is being used. Customers get the same consistent unified dashboard and operational simplicity whether workloads run in a specific cloud or on-premise. Unlike many vendors, taking advantage of cross-product innovations and integration does not depend on customers using a specific cloud for workloads.

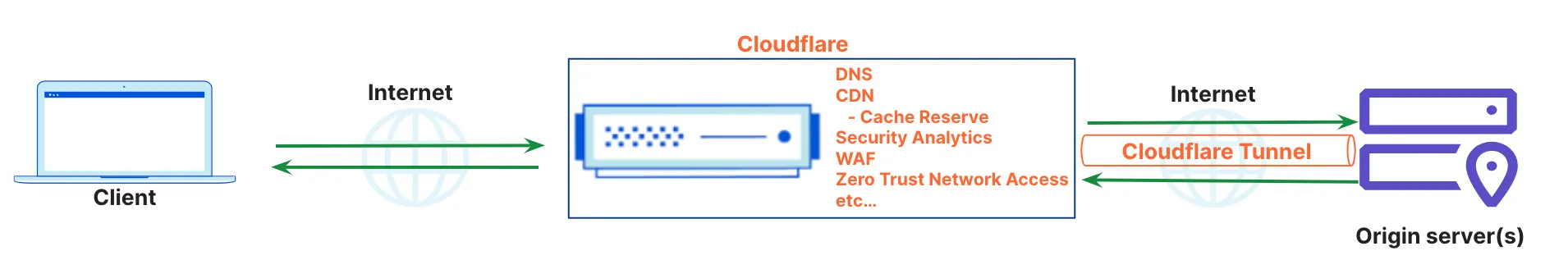

This document demonstrates how easy it is to use Cloudflare’s collective services regardless of where workloads run. For the example in this document, an application workload will use Cloudflare DNS, CDN, WAF, and Access while also using Cloudflare Tunnel to connect securely to the Cloudflare network. It’s rare for a vendor to provide this comprehensive level of security capability in an operationally simple and consistent fashion.

For additional details and reference architectures on specific services, see our reference architecture documents.

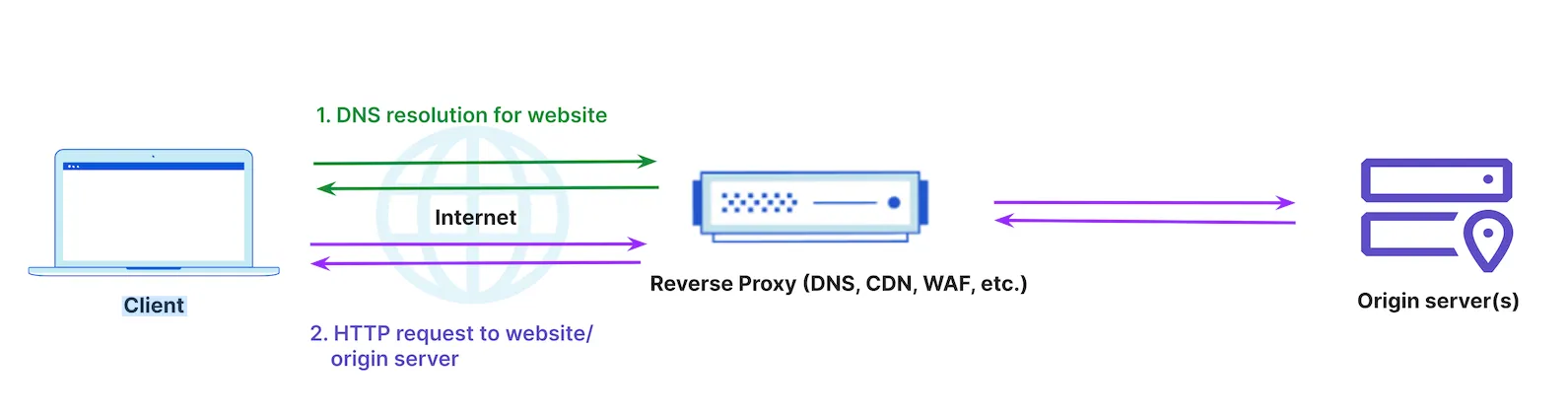

Cloud-based security and performance providers like Cloudflare work as a reverse proxy. A reverse proxy is a server that sits in front of web servers and forwards client requests to those web servers. Reverse proxies are typically implemented to help increase security, performance, and reliability.

Normal traffic flow without a reverse proxy would involve a client sending a DNS lookup request, receiving the origin IP address, and communicating directly to the origin server(s) ↗.

When a reverse proxy is introduced, the client still sends a DNS lookup request to its resolver, which is the first stop in the DNS lookup. In some cases, the vendor providing the reverse proxy also provides DNS services; this is visualized in Figure 3 below. However, the client now communicates to the reverse proxy and the reverse proxy communicates to the origin server(s). This traffic flow, where all traffic passes through the reverse proxy, allows for additional application security, performance, and reliability services to be implemented easily for applications.

In this example, we have a website running on one of the major cloud providers and we want to use Cloudflare DNS, CDN, WAF, and Access. We want to start with these services for demonstration purposes; customers can expand these to include other Cloudflare services as desired. Cloudflare provides the benefit of decoupling all services from the cloud provider and if we want to change cloud providers later or protect other applications running in other clouds, the dashboard and operations all stay consistent.

Customers can easily and securely connect their web application to the Cloudflare network and leverage application performance and security services. There are several connectivity options that fit different use cases.

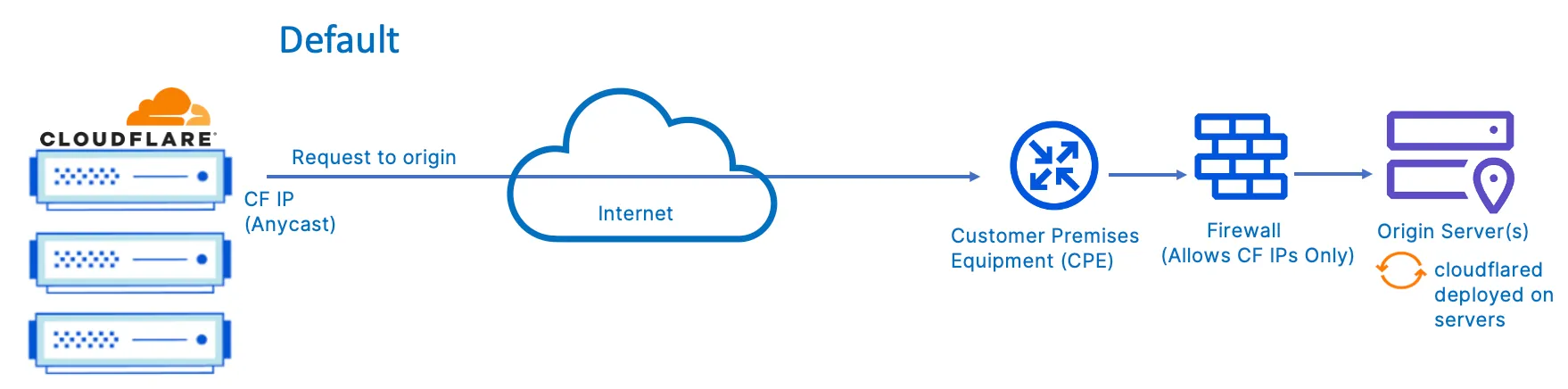

In the most basic scenario, the Cloudflare proxy will route the request traffic over the Internet to the origin. In this setup the client and origin are both endpoints directly connected to the Internet via their respective ISPs. The request is routed over the Internet from the client to Cloudflare proxy (via DNS configuration) before the proxy routes the request over the Internet to the customer's origin.

The below diagram describes the default connectivity to origins as requests flow through the Cloudflare network. When a request for the origin resolves to an IP hosted by Cloudflare, that request is then handled by the Cloudflare network and forwarded onto the origin server over the public Internet.

The origin is connected directly to the Internet and traffic is routed to the origin based on the IP address resolved by Cloudflare DNS. The DNS A record associates the domain name with the IP address of the origin server(s) or typically a load balancer the origin(s) are sitting behind.

In this model, when Cloudflare DNS receives a query for the A record, a Cloudflare anycast IP address is returned, so all traffic is routed through Cloudflare. However, unless additional precautions are taken, it’s possible for the origin to be reached directly bypassing Cloudflare if someone knows the IP address of the origin(s).

Additionally, in this model, the customer has to open firewall rules for the origin(s) or web server(s) so they can be accessible on the respective http/https ports. However, customers can choose to leverage Cloudflare Aegis, which allocates customer-specific IPs that Cloudflare will use to connect back to your origins. We recommend allowlisting traffic from only these networks to avoid direct access.

In addition to IP blocking at the origin-side firewall, we also strongly recommend additional verification of traffic via either the "Full (Strict)" SSL setting or mTLS auth to ensure all traffic is sourced from requests passing through the customer configured zones.

Cloudflare also supports Bring Your Own IP (BYOIP). When BYOIP is configured, the Cloudflare global network will announce a customer’s own IP prefixes and the prefixes can be used with the respective Cloudflare Layer 7 services. This allows customers to proxy traffic through Cloudflare and still have the customer IP address returned in the DNS resolution. This can be beneficial ↗ for cases where the customer IP prefixes are already allow-listed and updating firewall rules is not desirable or present an administrative hurdle.

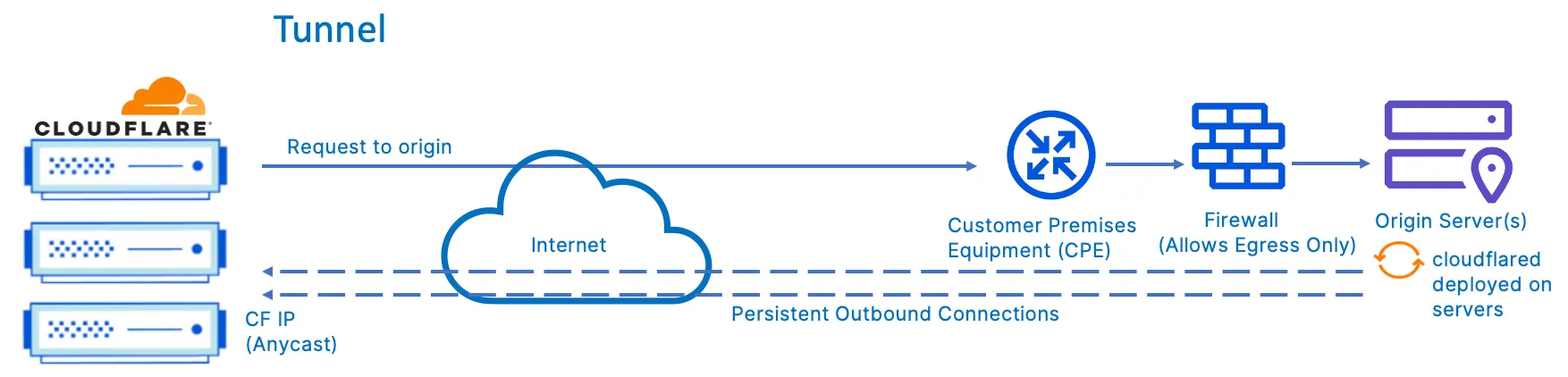

The recommended option when connecting origin(s) over the Internet is to have a private tunnel/connection over the Internet for additional security.

A traditional VPN setup is not optimal due to backhauling traffic to a centralized VPN gateway location which then connects back to the origin; this negatively impacts end-to-end throughput and latency. Cloudflare offers Cloudflare Tunnel software that provides an encrypted tunnel between your origin(s) and Cloudflare’s network. Also, since Cloudflare leverages anycast on its global network, the origin(s) will, like clients, connect to the closest Cloudflare data center(s) and therefore optimize the end-to-end latency and throughput.

When you run a tunnel, a lightweight daemon in your infrastructure, cloudflared, establishes four outbound-only connections between the origin server and the Cloudflare network. These four connections are made to four different servers spread across at least two distinct data centers providing robust resiliency. It is possible to install many cloudflared instances to increase resilience between your origin servers and the Cloudflare network.

Cloudflared creates an encrypted tunnel between your origin web server(s) and Cloudflare’s nearest data center(s), without the need for opening any public inbound ports. This provides for simplicity and speed of implementation as there are no security changes needed on the firewall. This solution also lowers the risk of firewall misconfigurations which could leave your company vulnerable to attacks.

The firewall and security posture is hardened by locking down all origin server ports and protocols via your firewall. Once Cloudflare Tunnel is in place and respective security applied, all requests on HTTP/S ports are dropped, including volumetric DDoS attacks. Data breach attempts, such as snooping of data in transit or brute force login attacks, are blocked entirely.

The above diagram describes the connectivity model through Cloudflare Tunnel. This option provides you with a secure way to connect your resources to Cloudflare without a publicly routable IP address. Cloudflare Tunnel can connect HTTP web servers, SSH servers, remote desktops, and other protocols safely to Cloudflare.

Most vendors also provide an option of directly connecting to their network. Direct connections provide security, reliability, and performance benefits over using the public Internet. These direct connections are done at peering facilities, Internet exchanges (IXs) where Internet service providers (ISPs) and Internet networks can interconnect with each other, or through vendor partners.

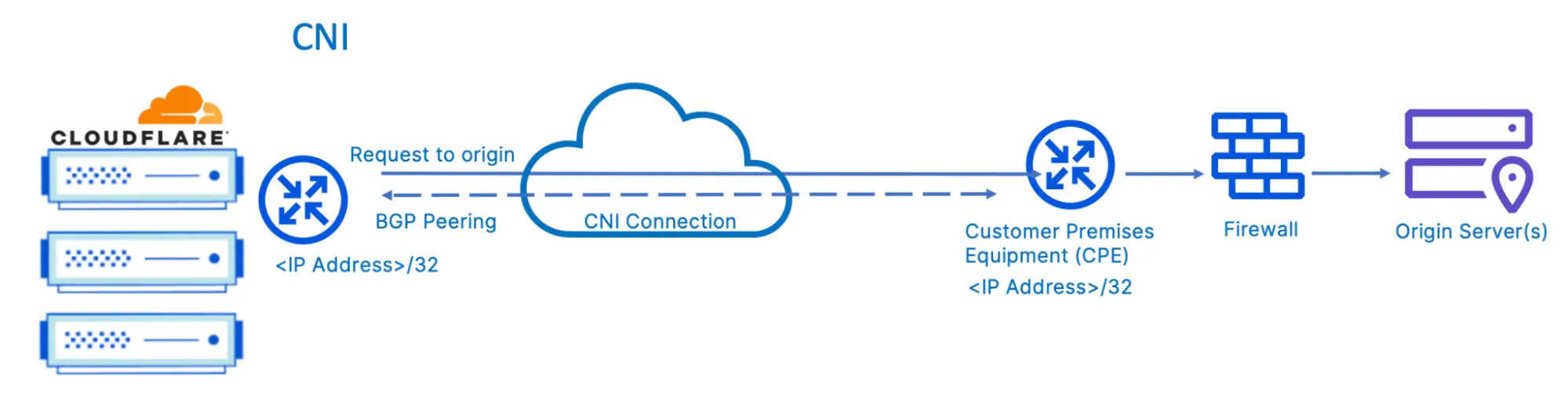

The above diagram describes origin connectivity through Cloudflare Network Interconnect (CNI) ↗ which allows you to connect your network infrastructure directly with Cloudflare and communicate only over those direct links. CNI allows customers to interconnect branch and headquarter locations directly with Cloudflare. Customers can interconnect with Cloudflare in one of three ways: over a private network interconnect (PNI) available at Cloudflare peering facilities ↗, via an IX at any of the many global exchanges Cloudflare participates in ↗, or through one of Cloudflare’s interconnection platform partners ↗.

Cloudflare’s global network allows for ease of connecting to the network regardless of where your infrastructure and employees are.

Regardless of which connectivity model is used, DNS resolution is done first and provides Cloudflare the information of where to route to. Cloudflare can support configurations as an authoritative DNS provider, secondary DNS provider, or non-Cloudflare DNS (CNAME) setups for a zone. For Cloudflare performance and security services to be applied, the traffic must be routed to the Cloudflare network.

Although there are multiple ways to onboard an application to use Cloudflare services, a common approach is to use Cloudflare DNS as the primary authoritative DNS. The additional benefit for customers here is that Cloudflare is consistently ranked the fastest available authoritative DNS provider globally ↗.

In this example, we’ll connect our origin server to Cloudflare securely with Cloudflare Tunnel. You can configure DNS in the dashboard and enter the site you want to onboard. You’ll receive a pair of Cloudflare nameservers to configure at your domain registrar’s site. Once that’s completed, Cloudflare becomes the primary authoritative DNS provider.

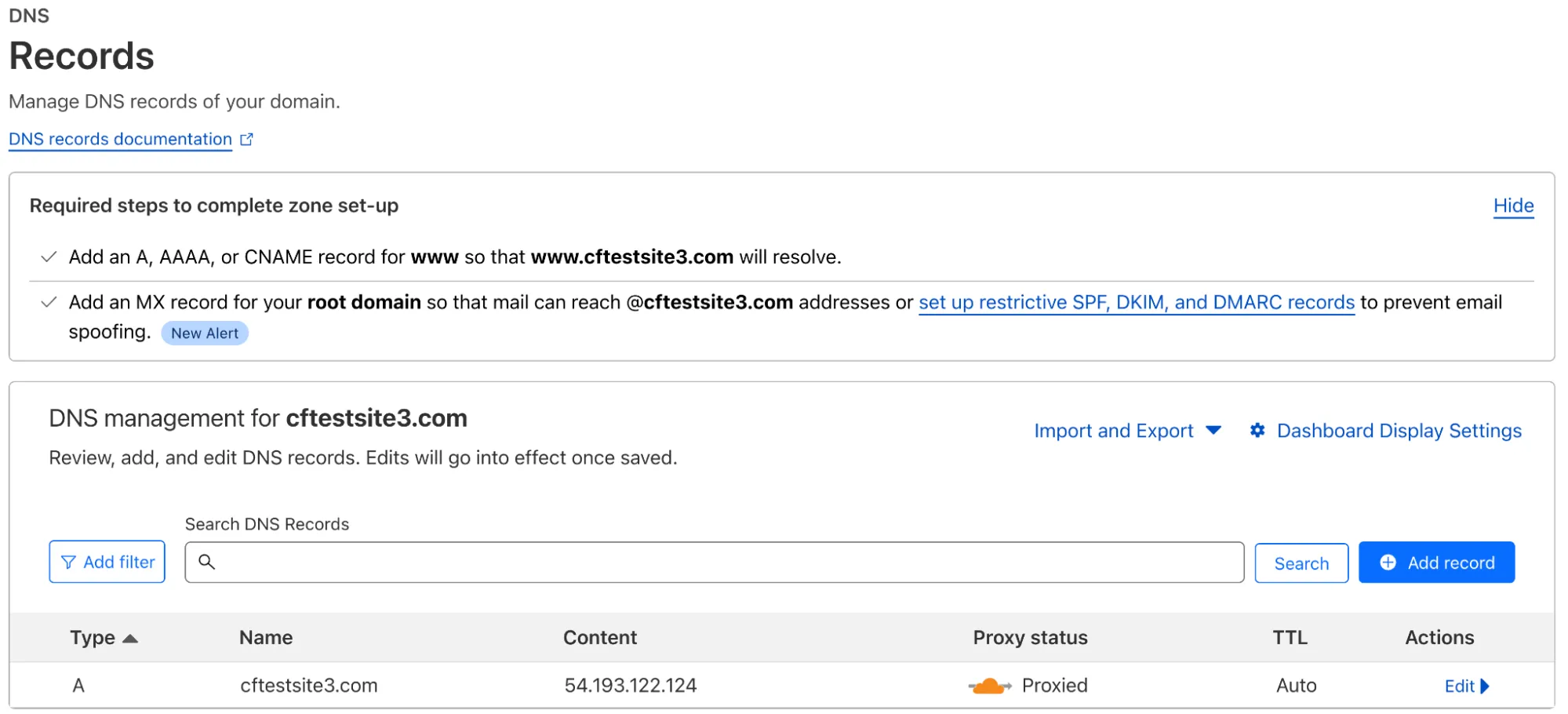

If Cloudflare is configured for just routing over the Internet, the DNS configuration would look something like below, where the A record points to the IP address of the origin server or respective load balancer. As Cloudflare is acting as a reverse proxy, the status shows as Proxied." As is, Cloudflare is still acting as a reverse proxy so all the Cloudflare services such as CDN, WAF, and Access can be used.

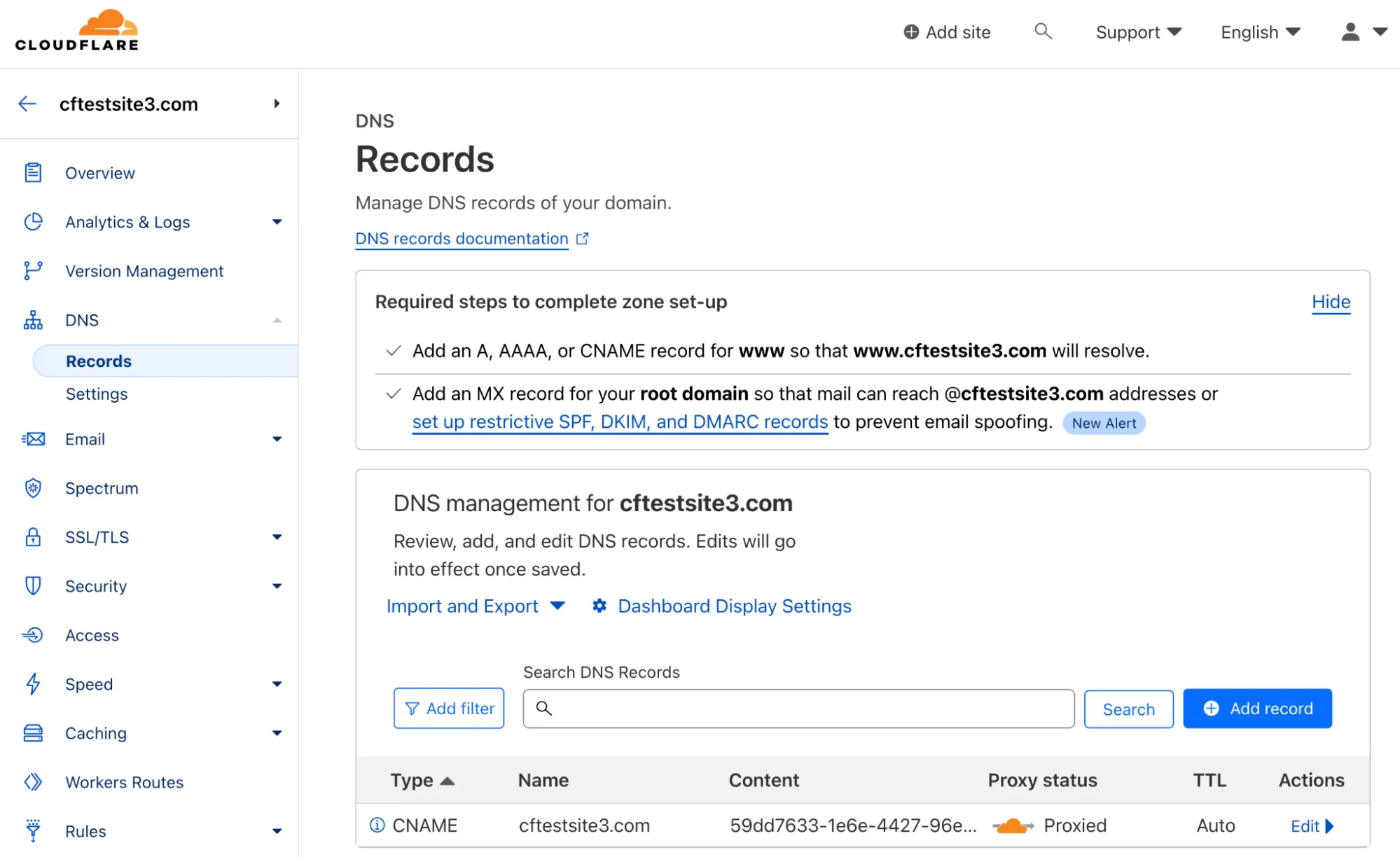

We can also use Cloudflare Tunnel over the Internet to provide for more security and to prevent the need for opening any inbound firewall rules to the origin(s). In this way, instead of an A record in the DNS configuration, we will have a CNAME record pointing to the tunnel we deploy. Here we deploy a tunnel from the origin to the Cloudflare network, and the DNS will automatically be configured. A CNAME record that points to the tunnel will be created; this enforces all traffic going to the origin(s) be routed over the Cloudflare Tunnel.

To create and manage tunnels, you need to install and authenticate cloudflared on your origin server. cloudflared is what connects your server to Cloudflare’s global network.

There are two options for creating a tunnel - via the dashboard or via the command line. It’s recommended getting started with the dashboard, since it will allow you to manage the tunnel from any machine.

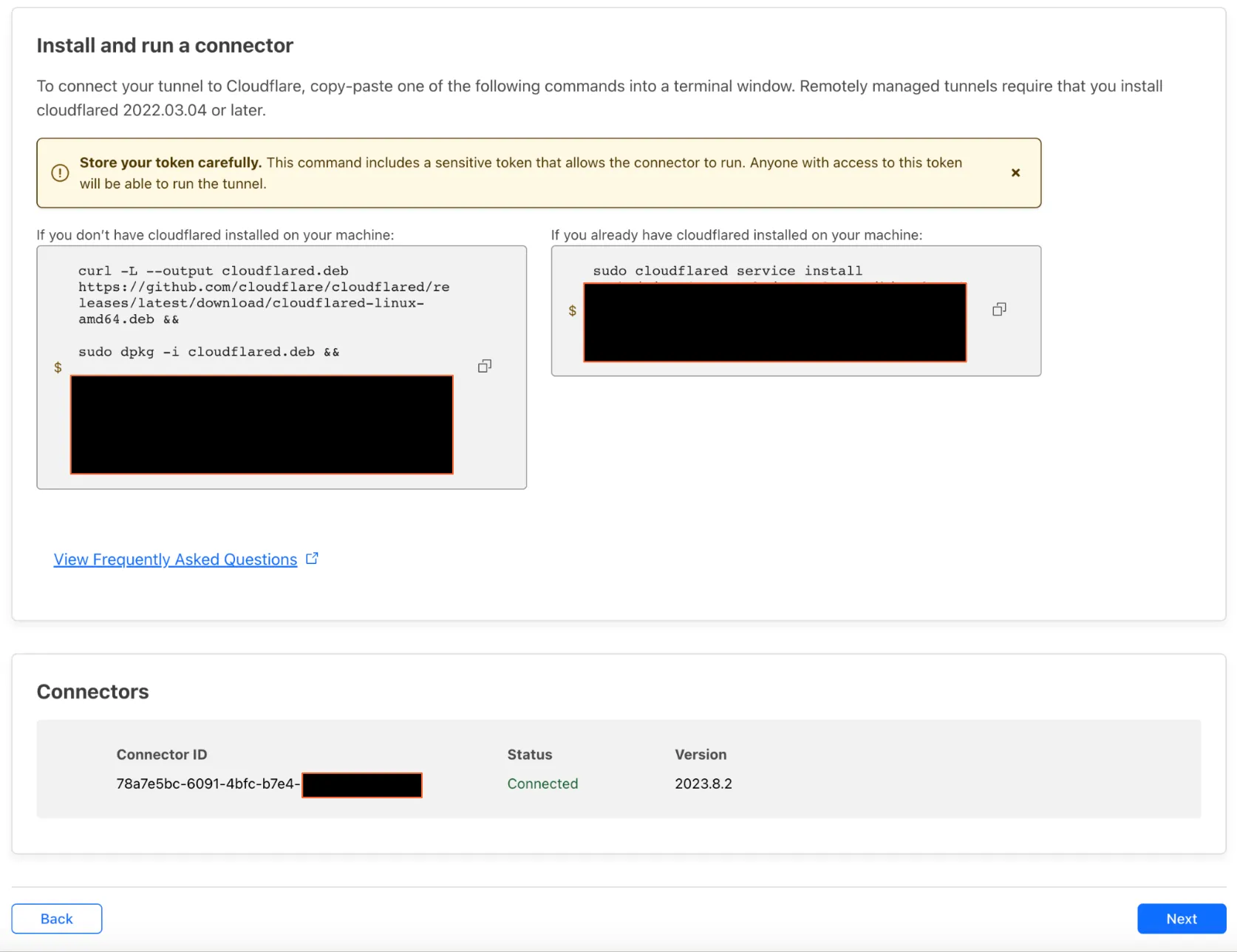

A remotely-managed tunnel only requires the tunnel token to run. Anyone with access to the token will be able to run the tunnel. You can get a tunnel’s token from the dashboard or via the API as shown below. The command provided in the dashboard will install and configure cloudflared to run as a service using an auth token.

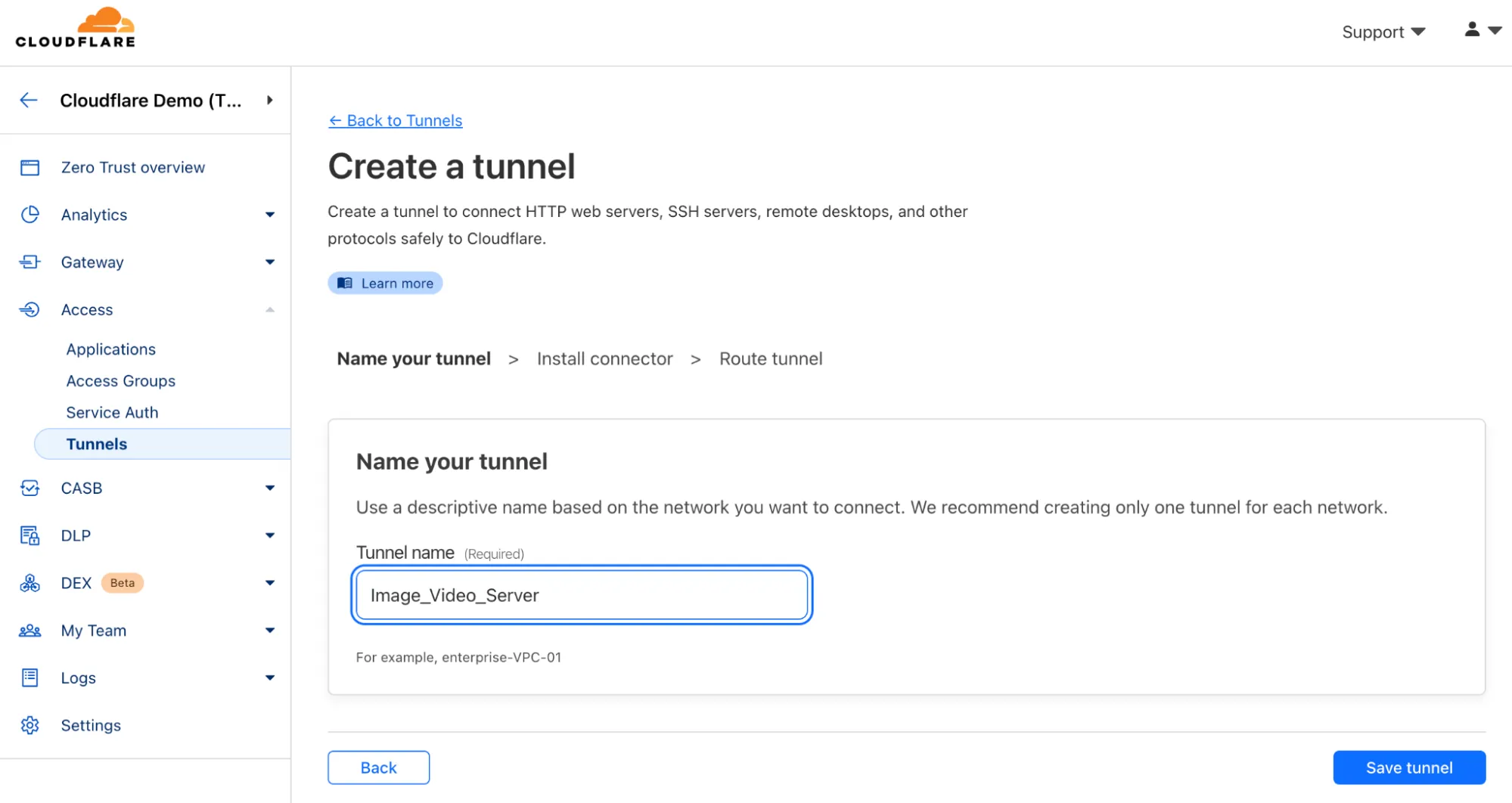

In the Cloudflare dashboard, navigate to Zero Trust > Access > Tunnels. Select the "Create a tunnel" button, name the tunnel, and save.

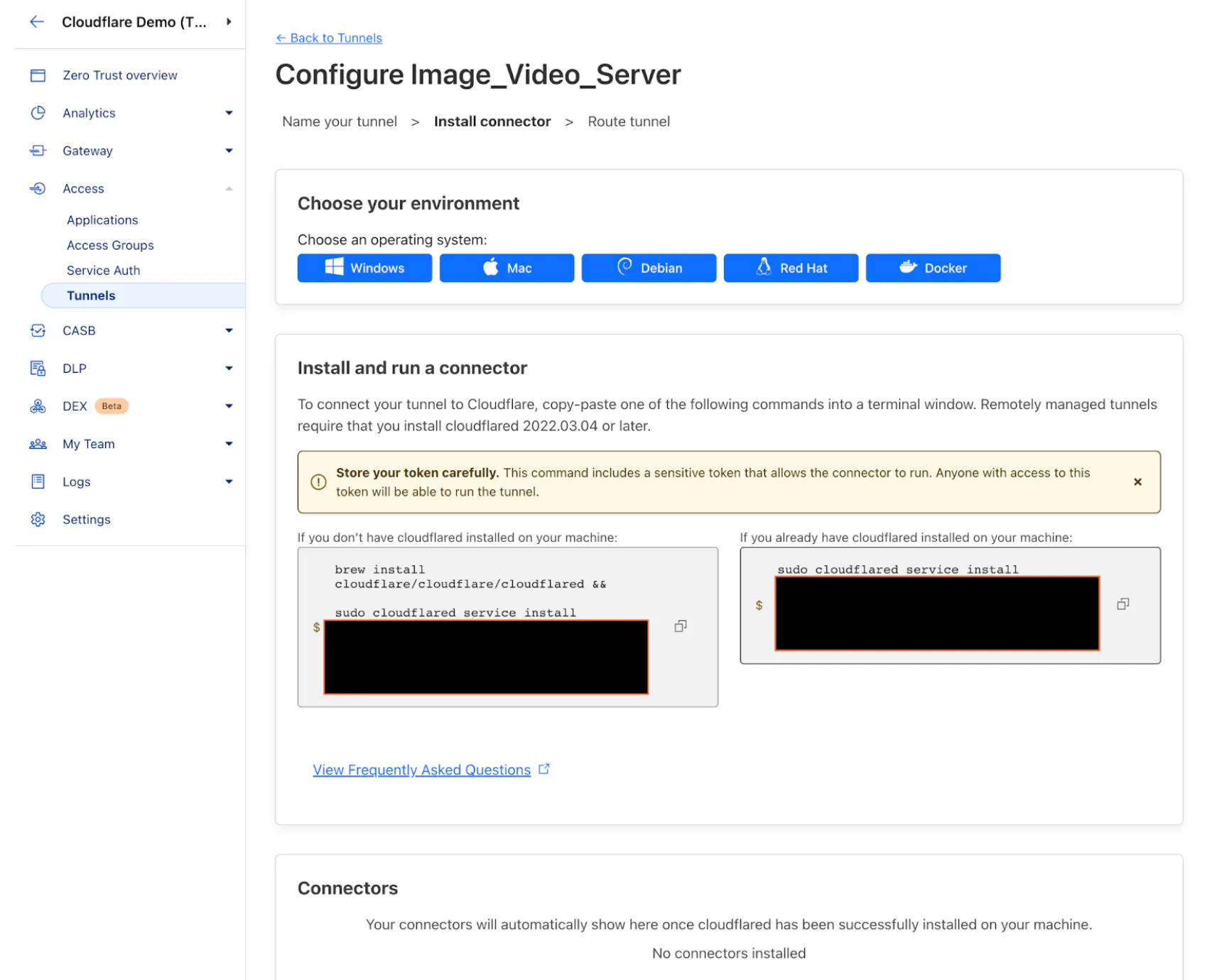

Next, you’ll be presented with a screen where you select the operating system (OS) of your origin server. You will then be provided a CLI command that you can run on your origin that will automatically download and install the Cloudflare Tunnel software.

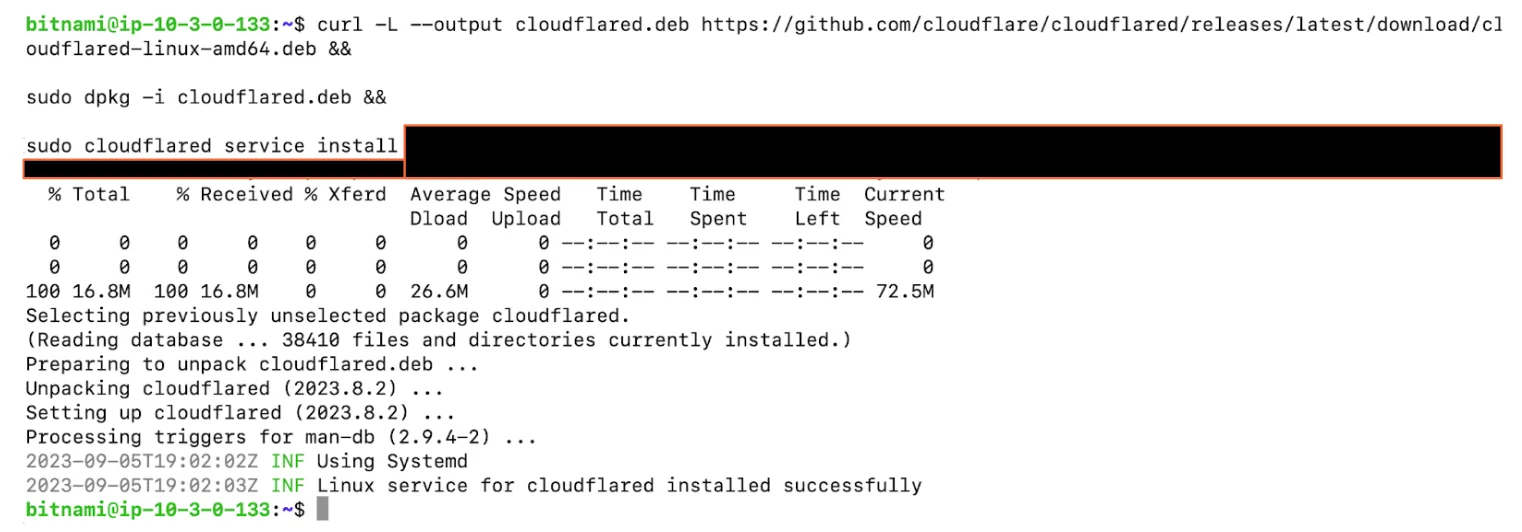

Below, the CLI command has been run to download and install the Cloudflare Tunnel software.

The connector will now automatically be displayed as connected.

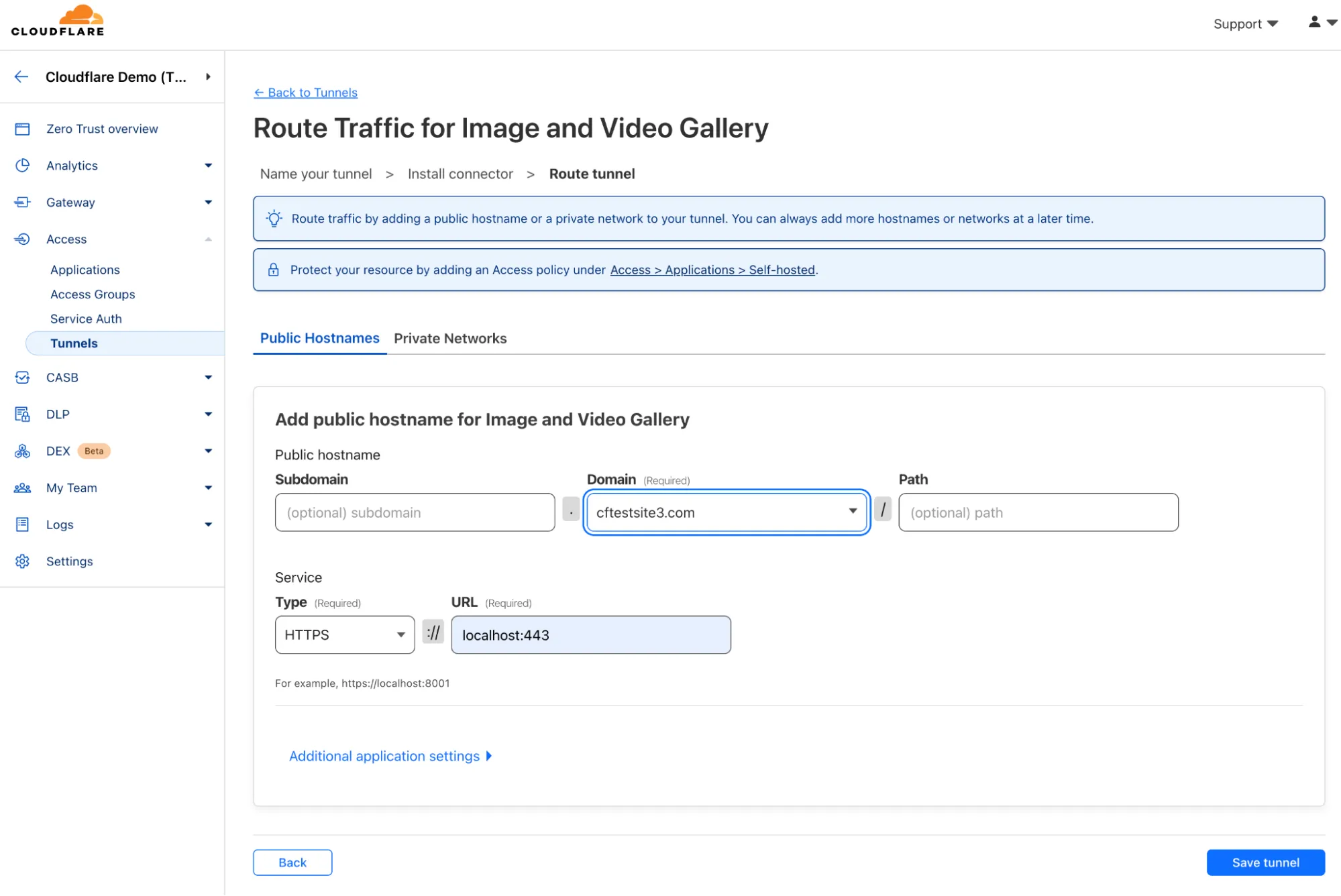

In the dashboard, you can now continue with the next step which is to create the tunnel and map it to a service on the origin as shown below. In this case, all HTTPS traffic will be sent over the tunnel to the origin server.

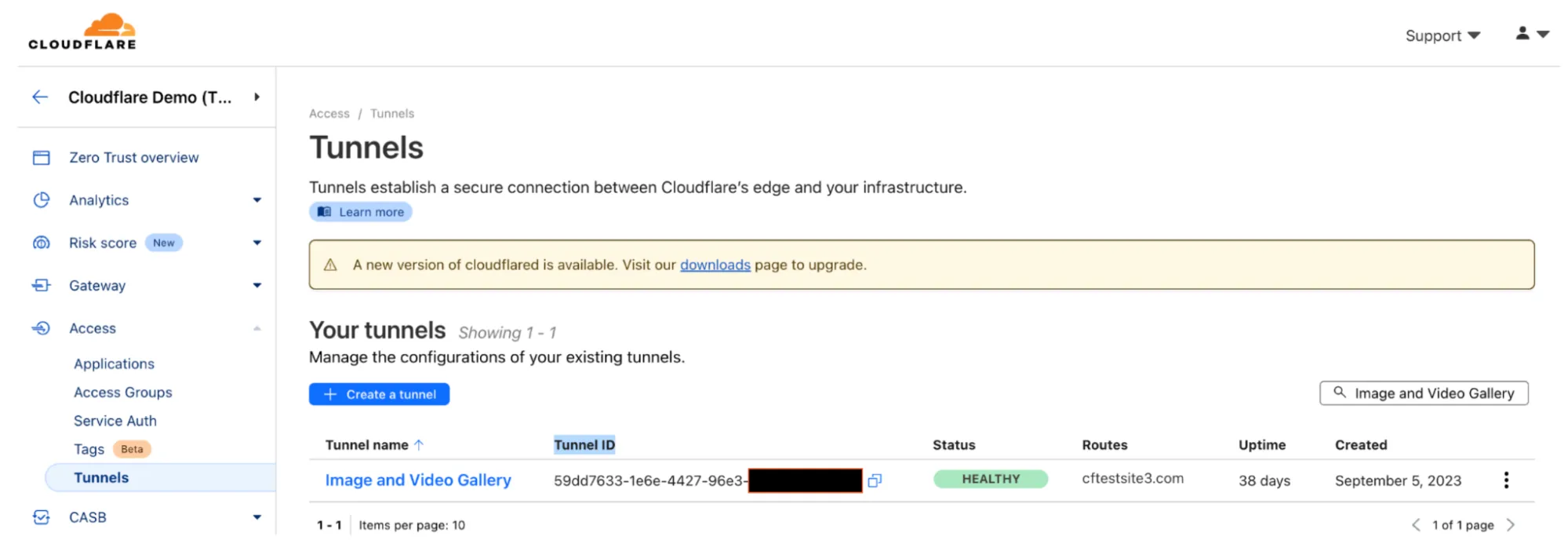

You can now see in the dashboard that the tunnel has been created and is healthy.

Further, if we look at the DNS configuration, we can see a DNS record was automatically created pointing to the tunnel ID. When you create a tunnel, Cloudflare generates a subdomain of cfargotunnel.com with the UUID of the created tunnel. Unlike publicly routable IP addresses, the subdomain will only proxy traffic for a DNS record in the same Cloudflare account. It’s not possible for another user to create a DNS record in another account or system to proxy traffic over this tunnel.

We now have secure application access. Users can only access the application through the tunnel connected to the Cloudflare network. Further, since Tunnel uses outbound connections to Cloudflare and any return traffic from an outbound connection will be allowed, no inbound firewall rule is required creating less overhead and more operational simplicity.

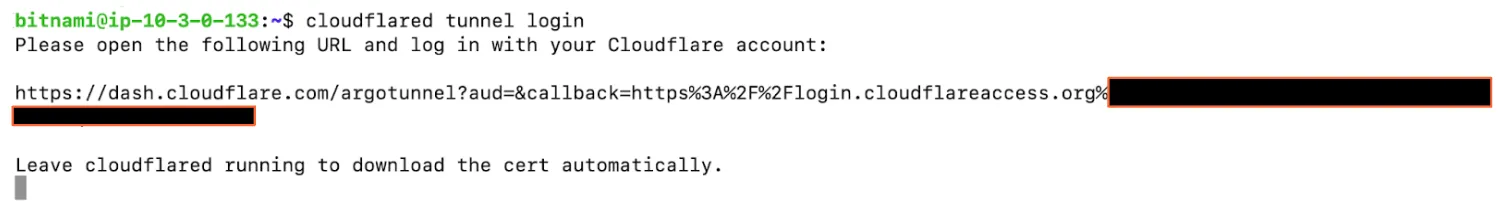

If you were to deploy the tunnel via CLI, after the tunnel install, you would also need to authenticate cloudflared on the origin server. cloudflared is what connects the server to Cloudflare’s global network. This authentication can be done with the cloudflared tunnel login command as shown below.

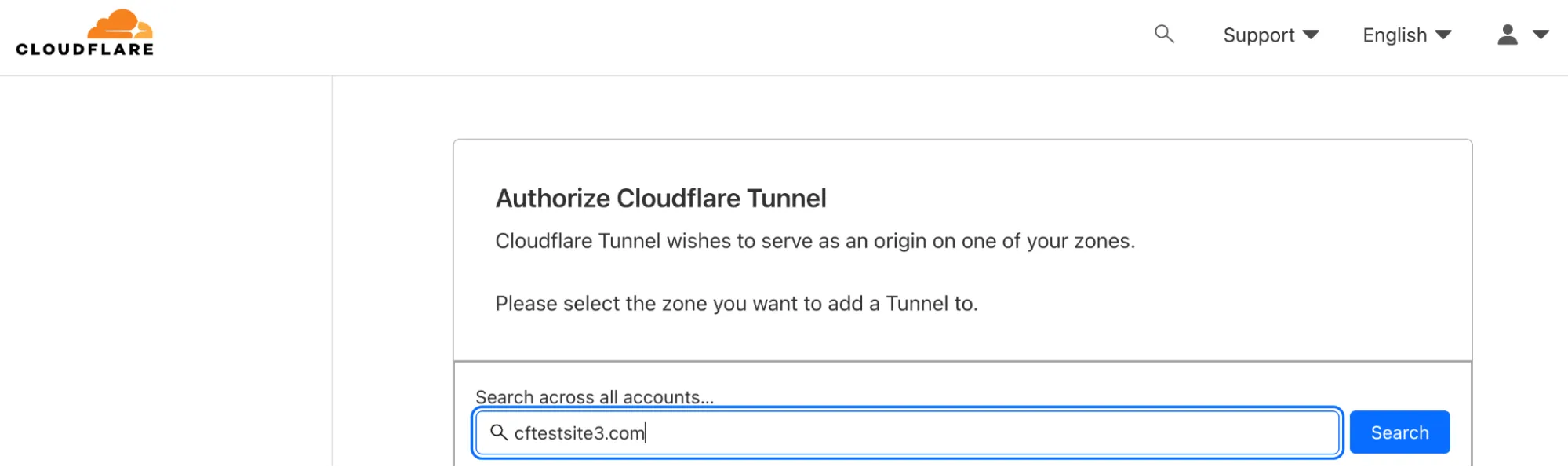

You’ll be asked to select the zone you want to add the tunnel to as shown below.

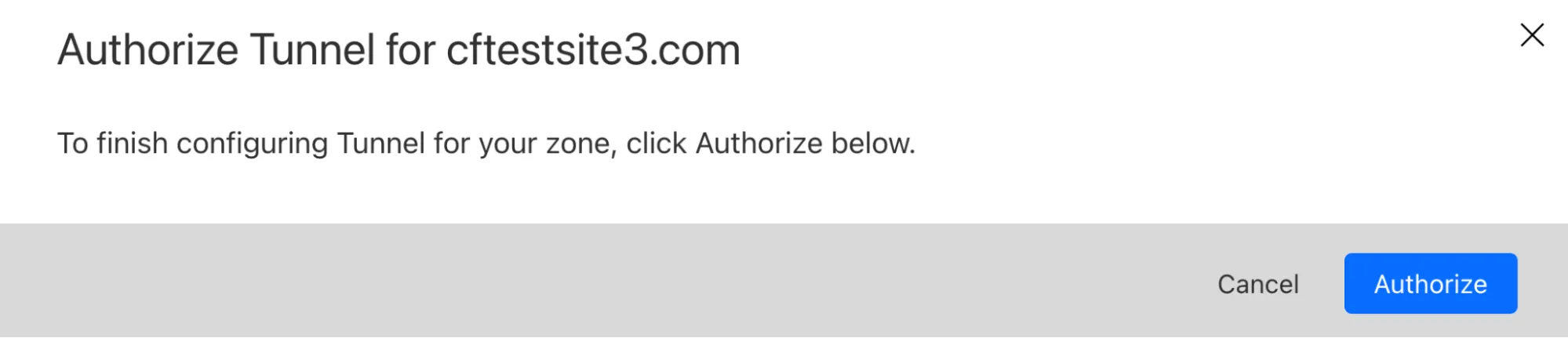

Next, you’ll authorize the tunnel for the zone.

Finally, you should receive confirmation that a certificate has been installed allowing your origin to create a tunnel on the respective zone.

The current setup as described prior in this document is shown below, where the origin server(s) are connected to the Cloudflare network via Tunnel. Now, we can start to consume Cloudflare services.

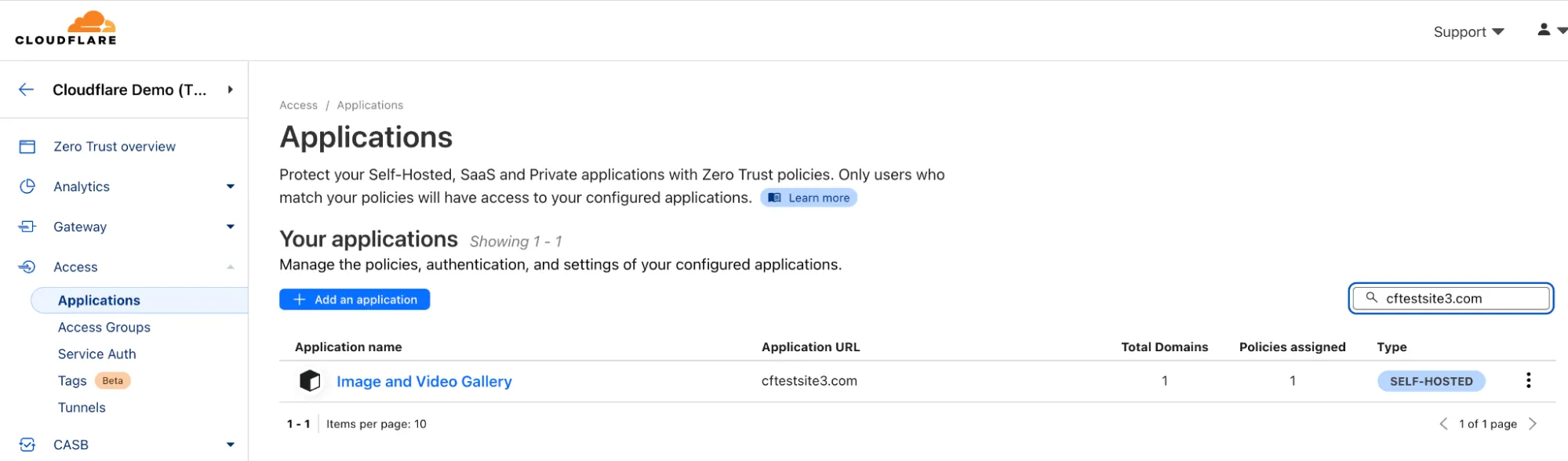

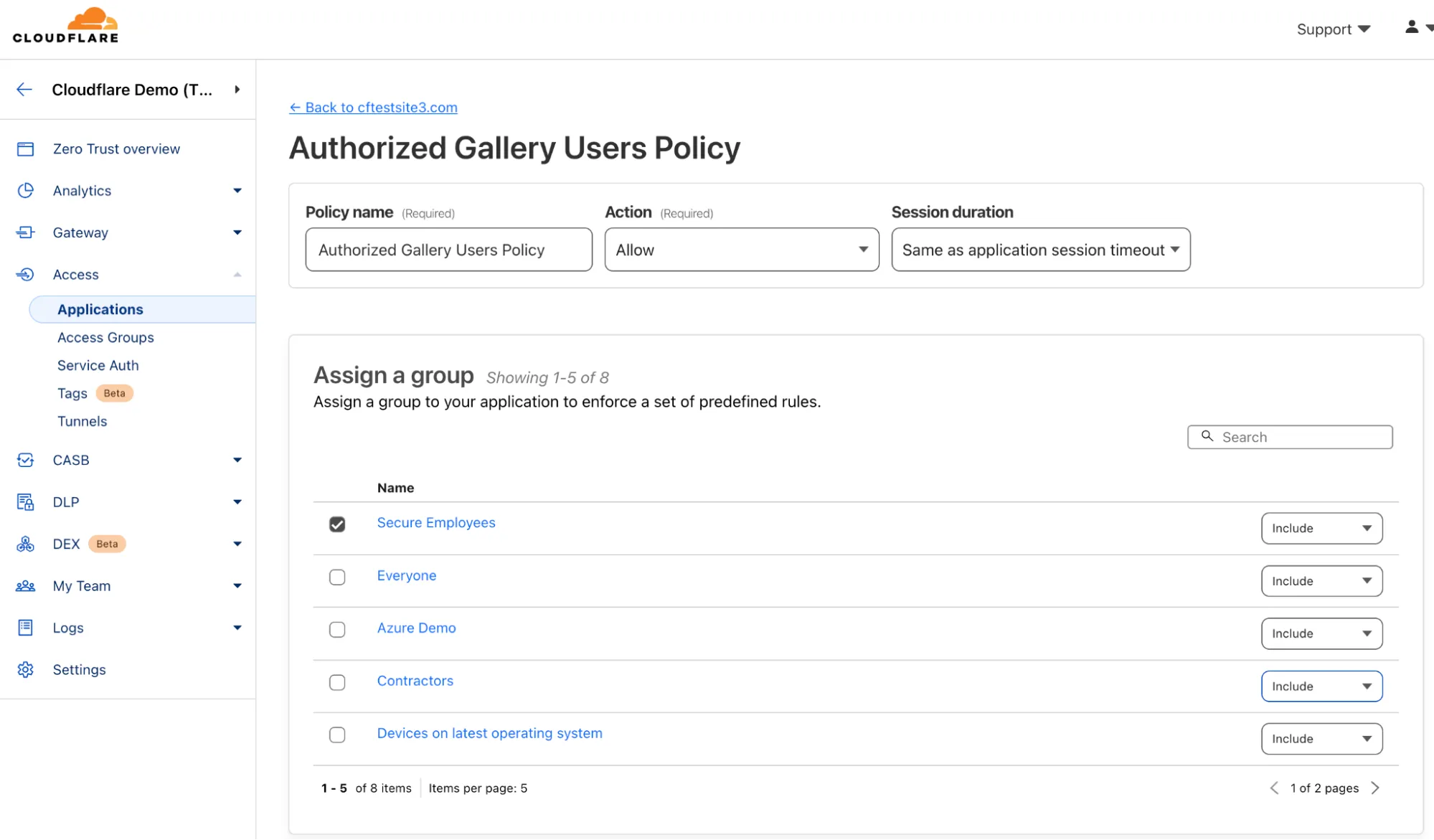

Currently the origin is only accessible via Cloudflare Tunnel. Because a public hostname is used, access to the origin is public. The application is secured behind Cloudflare and protected from DDoS and other types of attacks. For additional security, Cloudflare Access can be used to place a layer of authentication and access controls in front of the tunneled application. Access enforces an authentication step before requests to the origin can be served. Many other identity, device and network attributes can be used in the policy, allowing customers to define access beyond just authentication. For example, customers can define the network the request originates from, as well as ensuring the user device is running the latest operating system.

Below, you can see an application has been created for cftestsite3.com.

Looking at policy configuration below you can see it requires users to be part of the "Secure Employees" Access group.

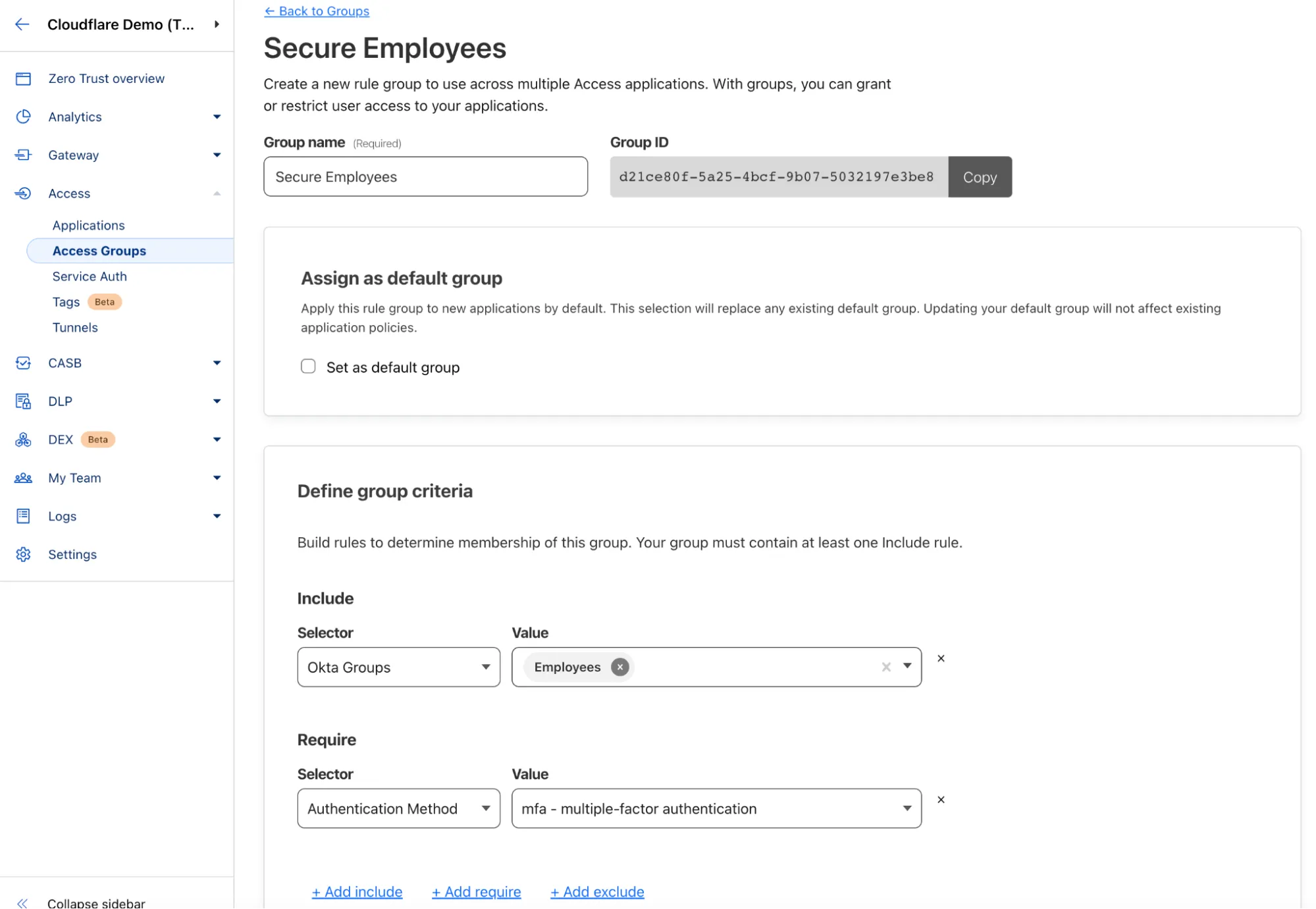

If we take a deeper look at the "Secure Employees" Access group, it can be seen below that members are from the company’s Okta identity provider (IdP) group called "Employees." Further, the Access group is enforcing multi-factor authentication (MFA).

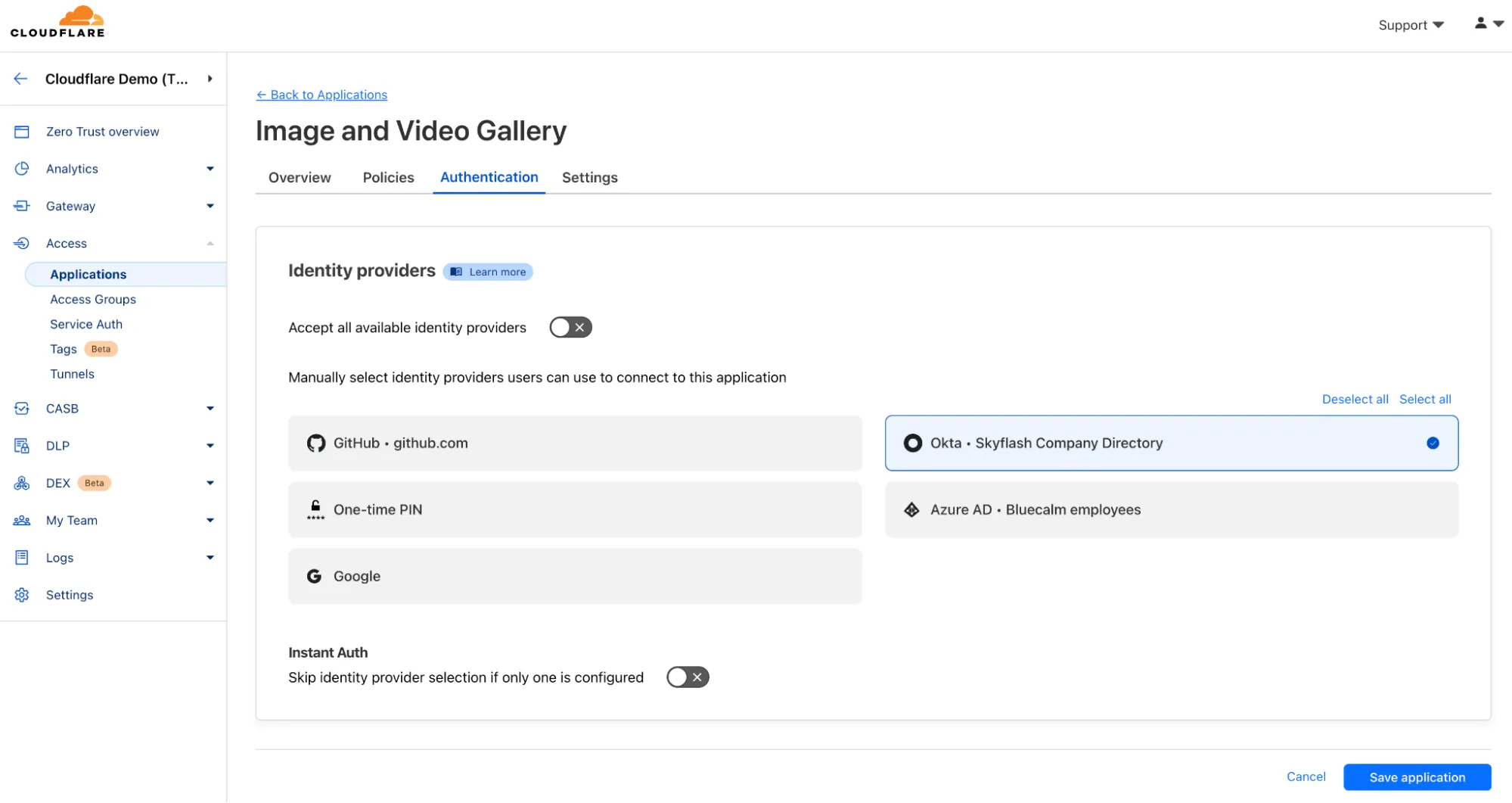

Looking at the "Image and Video Gallery" application, under "Authentication," customers can also manually select identity providers users can use to connect to this application.

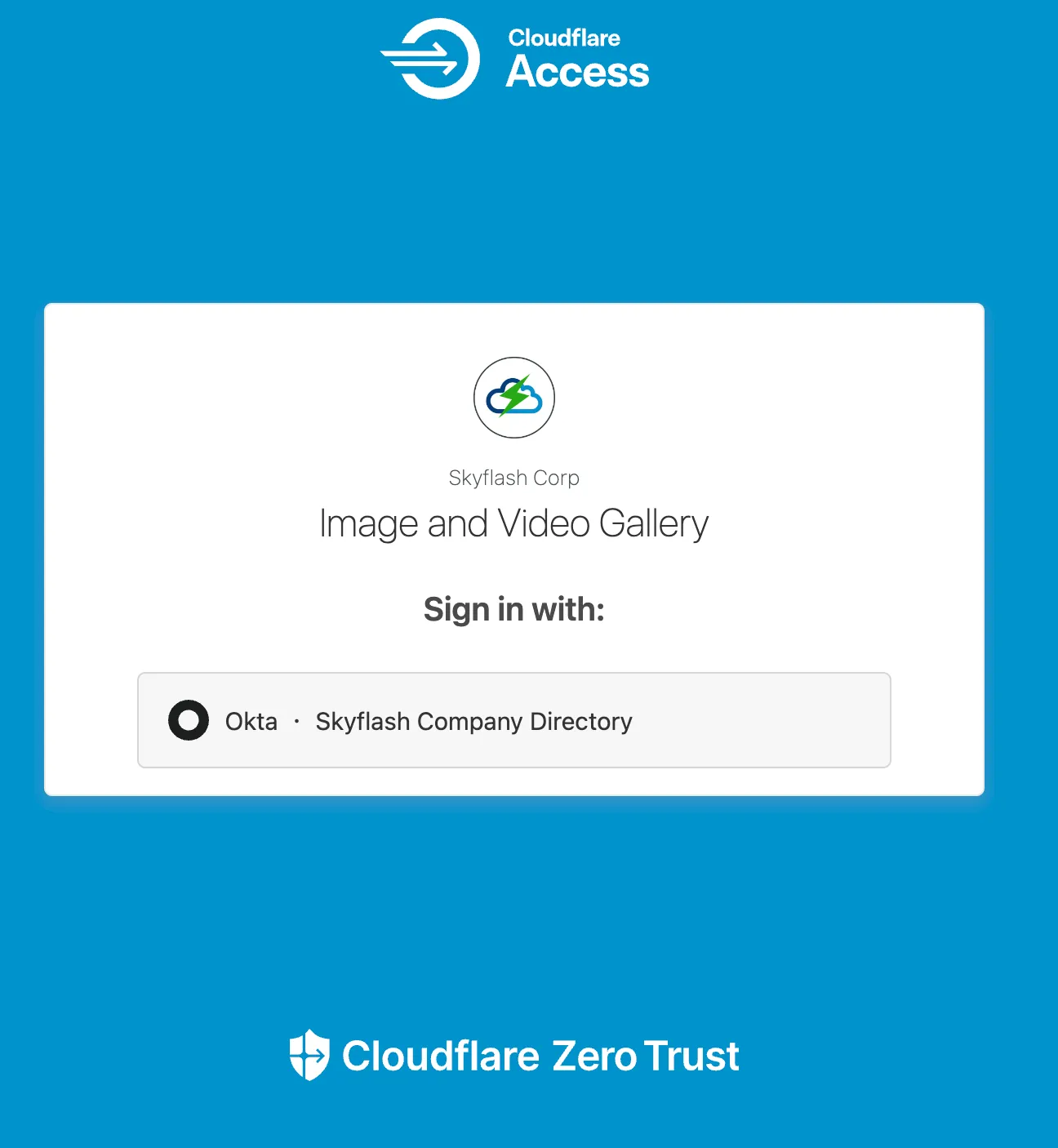

We now have secure application access to the origin(s) via Tunnel and also authentication and access policies to the application via Access. When users try to access the site, they are greeted with a Cloudflare Access page asking users to authenticate with the configured IdP; the page can be customized to customer’s liking as shown below.

In the current setup, the origin server(s) are securely connected to the Cloudflare network via Cloudflare Tunnel and Cloudflare Access via policies enforcing authentication and other security requirements.

Since Cloudflare is already set up and acting as a reverse proxy for the site, traffic is being directed through Cloudflare, so all Cloudflare services can easily be leveraged including CDN, Security Analytics, WAF, API Gateway, Bot Management, Page Shield for client-side security, etc.

When a DNS lookup request is made by a client for the respective website, in this case "cftestsite3.com," Cloudflare returns an anycast IP address, so all traffic is directed to the closest data center where all services will be applied before the request is forwarded over Cloudflare Tunnel to the origin server(s).

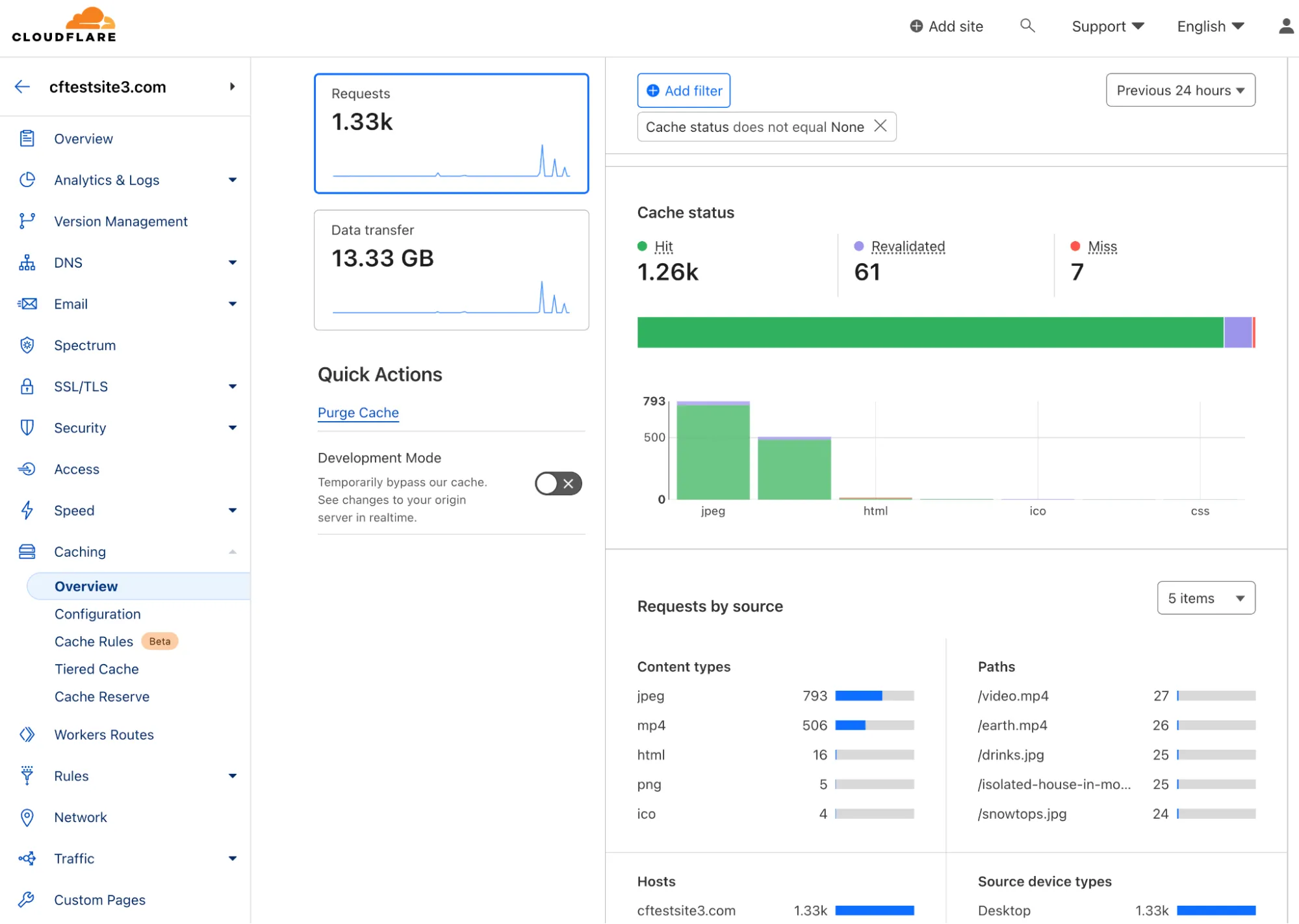

Cloudflare CDN leverages Cloudflare’s global anycast edge network. In addition to using anycast for network performance and resiliency, the Cloudflare CDN leverages Argo Tiered Cache to deliver optimized results while saving costs for customers. Customers can also enable Argo Smart Routing to find the fastest network path to route requests to the origin server. As shown below, the Cloudflare CDN is now caching content globally and granular CDN policies to affect default behavior can be applied.

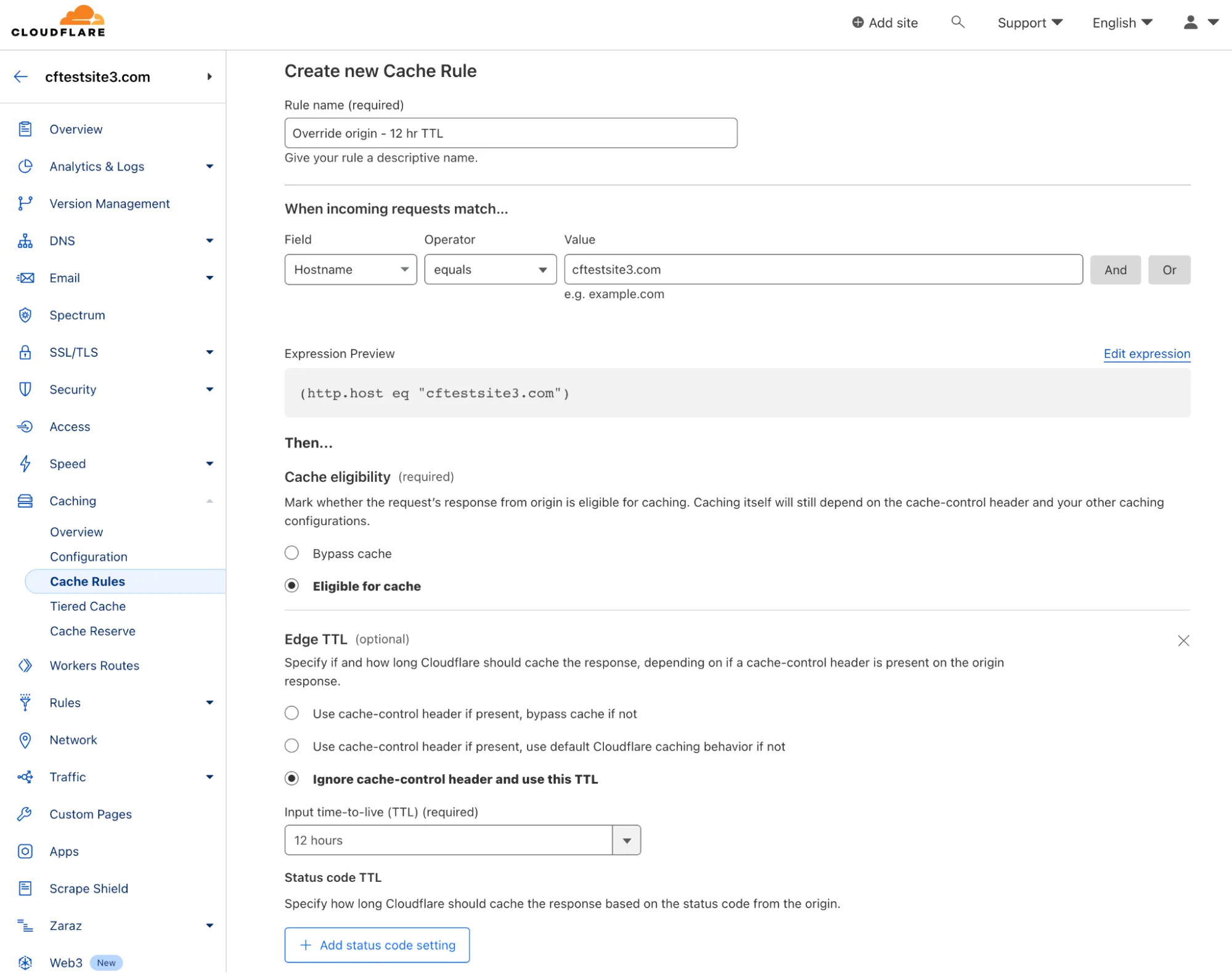

There are different caching topologies and configurations available. Below, you can see a Cache Rule has been configured to cache requests to the domain and override the origin TTL.

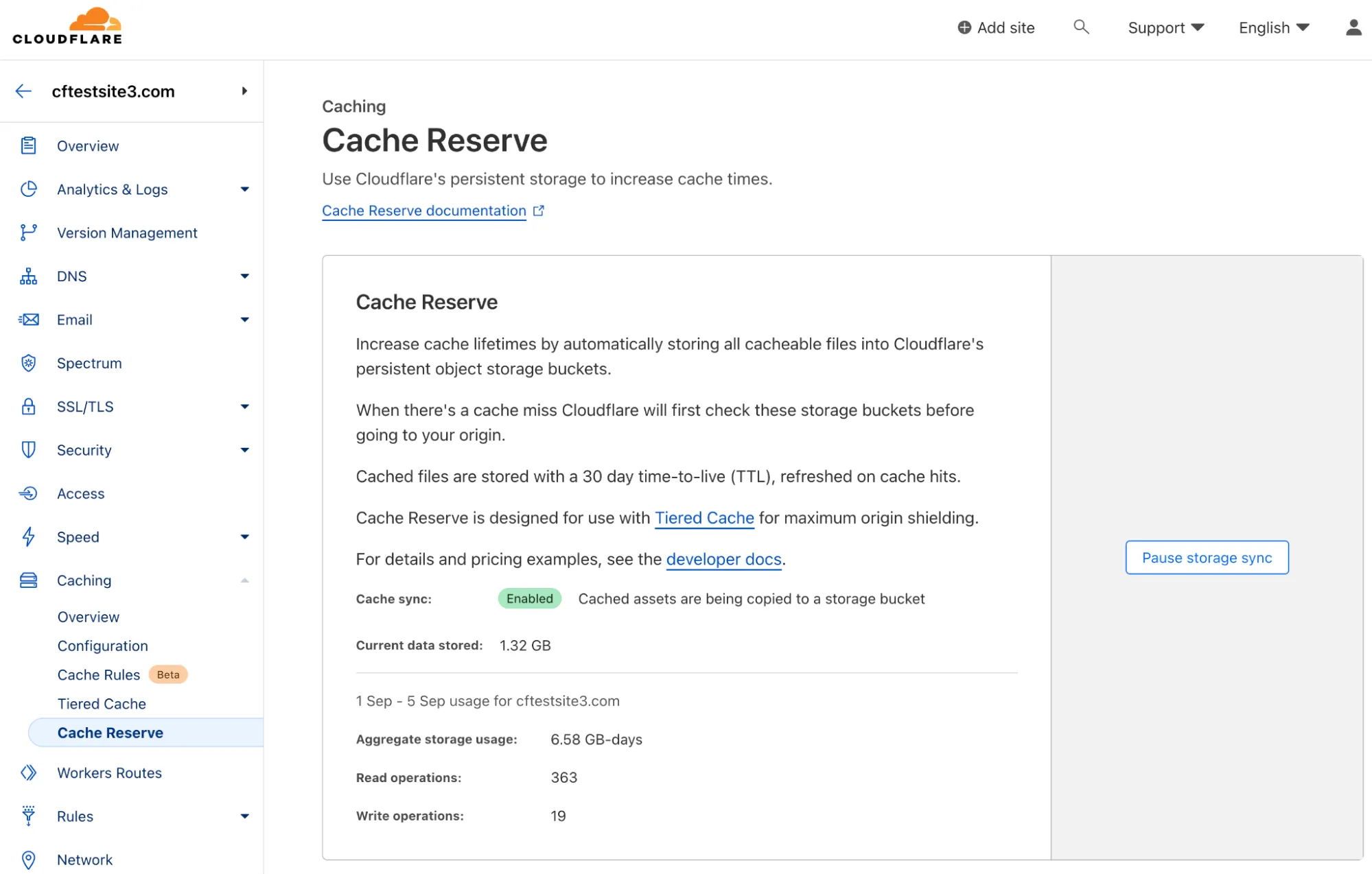

Cloudflare Cache Reserve has also been enabled by clicking the "Enable storage sync" button under "Caching > Cache Reserve" in the dashboard. Cache Reserve leverages Cloudflare’s persistent object storage, R2, to eliminate egress costs from other public cloud providers. It improves cache hit ratios by enabling customers to persistently cache data with the push of a single button.

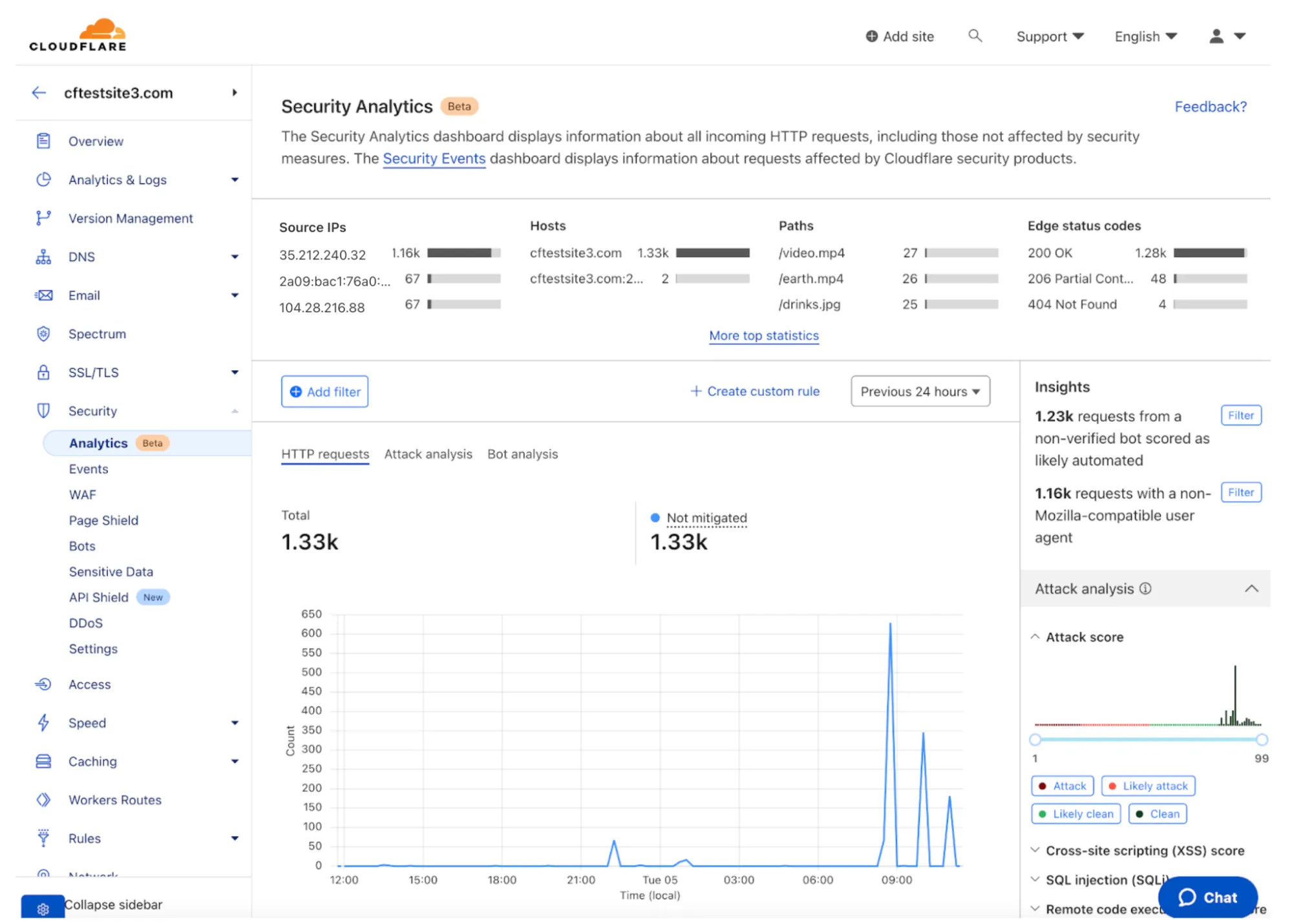

Additionally, as shown below, Cloudflare Security Analytics brings together all of Cloudflare’s detection capabilities and provides a global view and important insights for all traffic going to the respective site. As traffic is being routed through the Cloudflare network, Cloudflare has visibility into threats and insights which are exposed to customers in the dashboard, logs, and reporting.

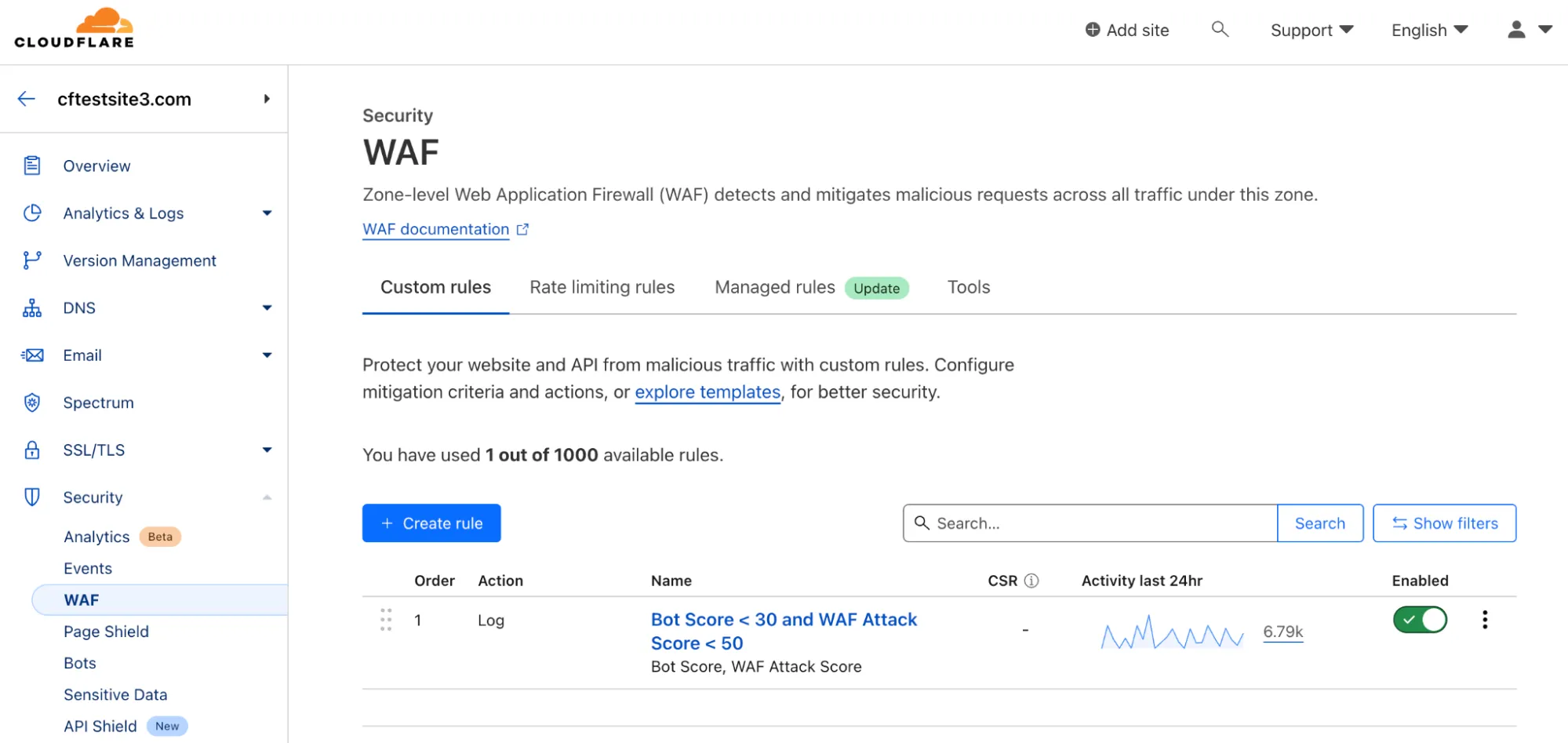

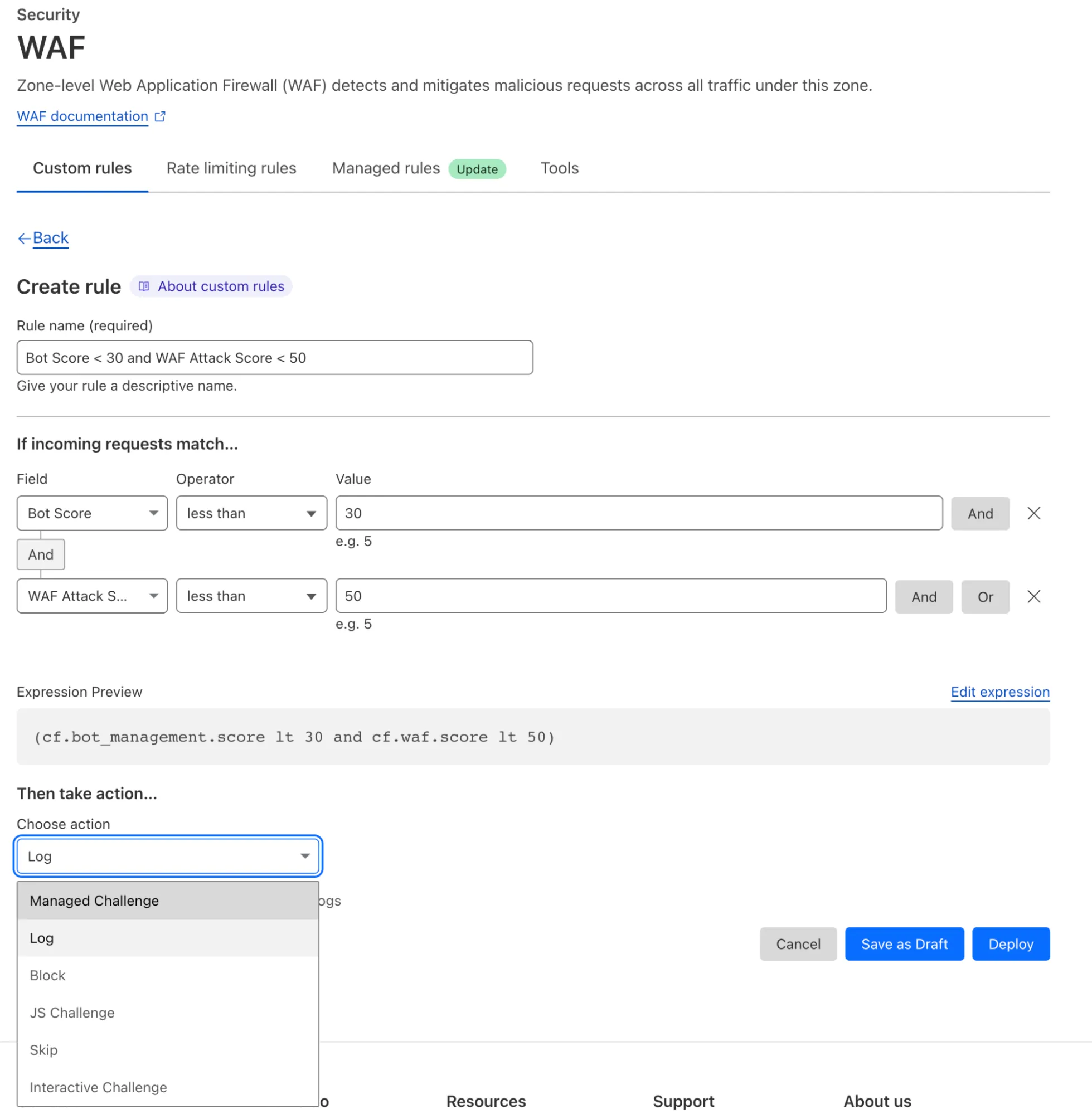

Cloudflare WAF rules can be applied to enforce policies on traffic inline. Below a firewall policy is in place to log all traffic with a bot score of < 30 and WAF attack score < 50. A bot score of < 30 signifies all traffic that’s classified as either automated or likely automated and a WAF attack score < 50 signifies all traffic that’s classified as either malicious or likely malicious.

Cloudflare WAF allows for granular policies that can leverage many different request criteria including header information. Customers can take a variety of actions including logging, blocking, and challenge.

Customers can use WAF to implement and use custom rules, rate limiting rules, and managed rules. A brief description of each is provided below.

- WAF Custom Rules: provides ability to create custom rules based on different request attributes and header information to block any threat

- WAF Rate Limiting Rules: prevents abuse, DDoS, brute force attempts, and provides for API-centric controls.

- WAF Managed Rules

- Cloudflare Managed Ruleset: provides advanced zero-day vulnerability protection

- Cloudflare OWASP Core Ruleset: block common web application vulnerabilities, some of which are in OWASP top 10

- Cloudflare Leaked Credential Check: checks exposed credential database for popular content management system (CMS) applications

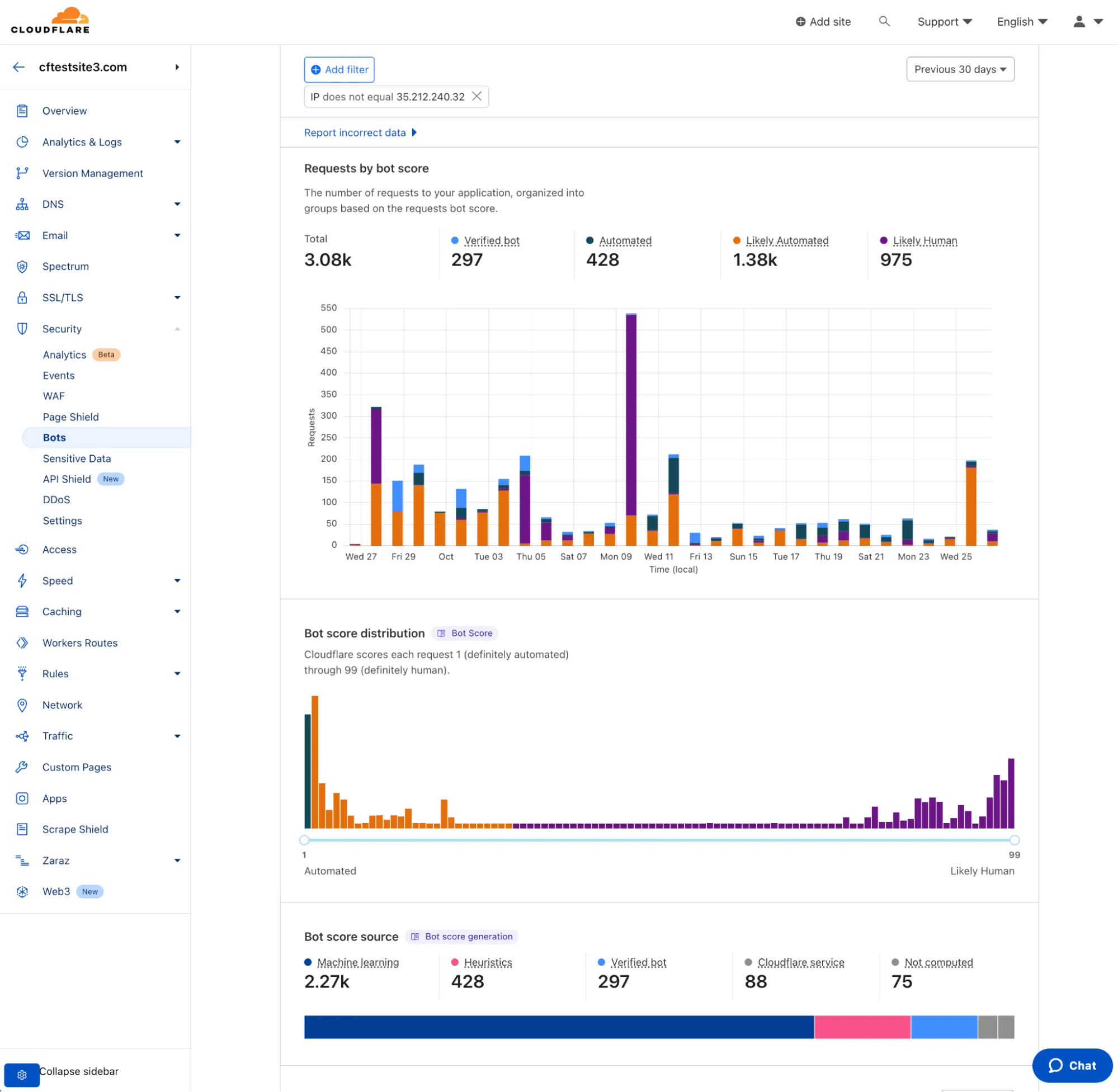

The same methodology applies for all other Cloudflare Application Performance and Security products (API Gateway, Bot Management, etc.): once configured to route traffic through the Cloudflare network, customers can start leveraging the Cloudflare services. Figure 31 displays Cloudflare’s Bot Analytics which categorizes the traffic based on bot score, shows the bot score distribution, and other bot analytics. All of the request data is captured inline and all enforcement based on defined policies is also done inline.

Cloudflare offers comprehensive application performance and security services. Customers can easily onboard and start using all performance and security services by routing traffic to their origin server(s) through Clooudflare’s network. Additionally, Cloudflare offers multiple connectivity options including Cloudflare Tunnel for securely connecting origin server(s) to Cloudflare’s network.