---

title: Overview · Cloudflare Hyperdrive docs

description: Hyperdrive is a service that accelerates queries you make to

existing databases, making it faster to access your data from across the globe

from Cloudflare Workers, irrespective of your users' location.

lastUpdated: 2026-02-06T18:26:52.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/hyperdrive/

md: https://developers.cloudflare.com/hyperdrive/index.md

---

Turn your existing regional database into a globally distributed database.

Available on Free and Paid plans

Hyperdrive is a service that accelerates queries you make to existing databases, making it faster to access your data from across the globe from [Cloudflare Workers](https://developers.cloudflare.com/workers/), irrespective of your users' location.

Hyperdrive supports any Postgres or MySQL database, including those hosted on AWS, Google Cloud, Azure, Neon and PlanetScale. Hyperdrive also supports Postgres-compatible databases like CockroachDB and Timescale. You do not need to write new code or replace your favorite tools: Hyperdrive works with your existing code and tools you use.

Use Hyperdrive's connection string from your Cloudflare Workers application with your existing Postgres drivers and object-relational mapping (ORM) libraries:

* PostgreSQL

* index.ts

```ts

import { Client } from "pg";

export default {

async fetch(request, env, ctx): Promise {

// Create a new client instance for each request. Hyperdrive maintains the

// underlying database connection pool, so creating a new client is fast.

const client = new Client({

connectionString: env.HYPERDRIVE.connectionString,

});

try {

// Connect to the database

await client.connect();

// Sample SQL query

const result = await client.query("SELECT * FROM pg_tables");

return Response.json(result.rows);

} catch (e) {

return Response.json({ error: e instanceof Error ? e.message : e }, { status: 500 });

}

},

} satisfies ExportedHandler<{ HYPERDRIVE: Hyperdrive }>;

```

* wrangler.jsonc

```json

{

"$schema": "node_modules/wrangler/config-schema.json",

"name": "WORKER-NAME",

"main": "src/index.ts",

"compatibility_date": "2025-02-04",

"compatibility_flags": [

"nodejs_compat"

],

"observability": {

"enabled": true

},

"hyperdrive": [

{

"binding": "HYPERDRIVE",

"id": "",

"localConnectionString": ""

}

]

}

```

* MySQL

* index.ts

```ts

import { createConnection } from 'mysql2/promise';

export default {

async fetch(request, env, ctx): Promise {

// Create a new connection on each request. Hyperdrive maintains the

// underlying database connection pool, so creating a new client is fast.

const connection = await createConnection({

host: env.HYPERDRIVE.host,

user: env.HYPERDRIVE.user,

password: env.HYPERDRIVE.password,

database: env.HYPERDRIVE.database,

port: env.HYPERDRIVE.port,

// This is needed to use mysql2 with Workers

// This configures mysql2 to use static parsing instead of eval() parsing (not available on Workers)

disableEval: true

});

const [results, fields] = await connection.query('SHOW tables;');

return new Response(JSON.stringify({ results, fields }), {

headers: {

'Content-Type': 'application/json',

'Access-Control-Allow-Origin': '\*',

},

});

}} satisfies ExportedHandler<{ HYPERDRIVE: Hyperdrive }>;

```

* wrangler.jsonc

```json

{

"$schema": "node_modules/wrangler/config-schema.json",

"name": "WORKER-NAME",

"main": "src/index.ts",

"compatibility_date": "2025-02-04",

"compatibility_flags": [

"nodejs_compat"

],

"observability": {

"enabled": true

},

"hyperdrive": [

{

"binding": "HYPERDRIVE",

"id": "",

"localConnectionString": ""

}

]

}

```

* index.ts

```ts

import { Client } from "pg";

export default {

async fetch(request, env, ctx): Promise {

// Create a new client instance for each request. Hyperdrive maintains the

// underlying database connection pool, so creating a new client is fast.

const client = new Client({

connectionString: env.HYPERDRIVE.connectionString,

});

try {

// Connect to the database

await client.connect();

// Sample SQL query

const result = await client.query("SELECT * FROM pg_tables");

return Response.json(result.rows);

} catch (e) {

return Response.json({ error: e instanceof Error ? e.message : e }, { status: 500 });

}

},

} satisfies ExportedHandler<{ HYPERDRIVE: Hyperdrive }>;

```

* wrangler.jsonc

```json

{

"$schema": "node_modules/wrangler/config-schema.json",

"name": "WORKER-NAME",

"main": "src/index.ts",

"compatibility_date": "2025-02-04",

"compatibility_flags": [

"nodejs_compat"

],

"observability": {

"enabled": true

},

"hyperdrive": [

{

"binding": "HYPERDRIVE",

"id": "",

"localConnectionString": ""

}

]

}

```

* index.ts

```ts

import { createConnection } from 'mysql2/promise';

export default {

async fetch(request, env, ctx): Promise {

// Create a new connection on each request. Hyperdrive maintains the

// underlying database connection pool, so creating a new client is fast.

const connection = await createConnection({

host: env.HYPERDRIVE.host,

user: env.HYPERDRIVE.user,

password: env.HYPERDRIVE.password,

database: env.HYPERDRIVE.database,

port: env.HYPERDRIVE.port,

// This is needed to use mysql2 with Workers

// This configures mysql2 to use static parsing instead of eval() parsing (not available on Workers)

disableEval: true

});

const [results, fields] = await connection.query('SHOW tables;');

return new Response(JSON.stringify({ results, fields }), {

headers: {

'Content-Type': 'application/json',

'Access-Control-Allow-Origin': '\*',

},

});

}} satisfies ExportedHandler<{ HYPERDRIVE: Hyperdrive }>;

```

* wrangler.jsonc

```json

{

"$schema": "node_modules/wrangler/config-schema.json",

"name": "WORKER-NAME",

"main": "src/index.ts",

"compatibility_date": "2025-02-04",

"compatibility_flags": [

"nodejs_compat"

],

"observability": {

"enabled": true

},

"hyperdrive": [

{

"binding": "HYPERDRIVE",

"id": "",

"localConnectionString": ""

}

]

}

```

[Get started](https://developers.cloudflare.com/hyperdrive/get-started/)

***

## Features

### Connect your database

Connect Hyperdrive to your existing database and deploy a [Worker](https://developers.cloudflare.com/workers/) that queries it.

[Connect Hyperdrive to your database](https://developers.cloudflare.com/hyperdrive/get-started/)

### PostgreSQL support

Hyperdrive allows you to connect to any PostgreSQL or PostgreSQL-compatible database.

[Connect Hyperdrive to your PostgreSQL database](https://developers.cloudflare.com/hyperdrive/examples/connect-to-postgres/)

### MySQL support

Hyperdrive allows you to connect to any MySQL database.

[Connect Hyperdrive to your MySQL database](https://developers.cloudflare.com/hyperdrive/examples/connect-to-mysql/)

### Query Caching

Default-on caching for your most popular queries executed against your database.

[Learn about Query Caching](https://developers.cloudflare.com/hyperdrive/concepts/query-caching/)

***

## Related products

**[Workers](https://developers.cloudflare.com/workers/)**

Build serverless applications and deploy instantly across the globe for exceptional performance, reliability, and scale.

**[Pages](https://developers.cloudflare.com/pages/)**

Deploy dynamic front-end applications in record time.

***

## More resources

[Pricing](https://developers.cloudflare.com/hyperdrive/platform/pricing/)

Learn about Hyperdrive's pricing.

[Limits](https://developers.cloudflare.com/hyperdrive/platform/limits/)

Learn about Hyperdrive limits.

[Storage options](https://developers.cloudflare.com/workers/platform/storage-options/)

Learn more about the storage and database options you can build on with Workers.

[Developer Discord](https://discord.cloudflare.com)

Connect with the Workers community on Discord to ask questions, show what you are building, and discuss the platform with other developers.

[@CloudflareDev](https://x.com/cloudflaredev)

Follow @CloudflareDev on Twitter to learn about product announcements, and what is new in Cloudflare Developer Platform.

---

title: 404 - Page Not Found · Cloudflare Hyperdrive docs

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/hyperdrive/404/

md: https://developers.cloudflare.com/hyperdrive/404/index.md

---

# 404

Check the URL, try using our [search](https://developers.cloudflare.com/search/) or try our LLM-friendly [llms.txt directory](https://developers.cloudflare.com/llms.txt).

---

title: Concepts · Cloudflare Hyperdrive docs

description: Learn about the core concepts and architecture behind Hyperdrive.

lastUpdated: 2025-11-12T15:17:36.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/hyperdrive/concepts/

md: https://developers.cloudflare.com/hyperdrive/concepts/index.md

---

Learn about the core concepts and architecture behind Hyperdrive.

---

title: Configuration · Cloudflare Hyperdrive docs

lastUpdated: 2024-09-06T08:27:36.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/hyperdrive/configuration/

md: https://developers.cloudflare.com/hyperdrive/configuration/index.md

---

* [Connect to a private database using Tunnel](https://developers.cloudflare.com/hyperdrive/configuration/connect-to-private-database/)

* [Local development](https://developers.cloudflare.com/hyperdrive/configuration/local-development/)

* [SSL/TLS certificates](https://developers.cloudflare.com/hyperdrive/configuration/tls-ssl-certificates-for-hyperdrive/)

* [Firewall and networking configuration](https://developers.cloudflare.com/hyperdrive/configuration/firewall-and-networking-configuration/)

* [Tune connection pooling](https://developers.cloudflare.com/hyperdrive/configuration/tune-connection-pool/)

* [Rotating database credentials](https://developers.cloudflare.com/hyperdrive/configuration/rotate-credentials/)

---

title: Demos and architectures · Cloudflare Hyperdrive docs

description: Learn how you can use Hyperdrive within your existing application

and architecture.

lastUpdated: 2025-10-13T13:40:40.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/hyperdrive/demos/

md: https://developers.cloudflare.com/hyperdrive/demos/index.md

---

Learn how you can use Hyperdrive within your existing application and architecture.

## Demos

Explore the following demo applications for Hyperdrive.

* [Hyperdrive demo:](https://github.com/cloudflare/hyperdrive-demo) A Remix app that connects to a database behind Cloudflare's Hyperdrive, making regional databases feel like they're globally distributed.

## Reference architectures

Explore the following reference architectures that use Hyperdrive:

[Serverless global APIs](https://developers.cloudflare.com/reference-architecture/diagrams/serverless/serverless-global-apis/)

[An example architecture of a serverless API on Cloudflare and aims to illustrate how different compute and data products could interact with each other.](https://developers.cloudflare.com/reference-architecture/diagrams/serverless/serverless-global-apis/)

---

title: Examples · Cloudflare Hyperdrive docs

lastUpdated: 2025-08-18T14:27:42.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/hyperdrive/examples/

md: https://developers.cloudflare.com/hyperdrive/examples/index.md

---

* [Connect to PostgreSQL](https://developers.cloudflare.com/hyperdrive/examples/connect-to-postgres/)

* [Connect to MySQL](https://developers.cloudflare.com/hyperdrive/examples/connect-to-mysql/)

---

title: Getting started · Cloudflare Hyperdrive docs

description: Hyperdrive accelerates access to your existing databases from

Cloudflare Workers, making even single-region databases feel globally

distributed.

lastUpdated: 2026-02-06T18:26:52.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/hyperdrive/get-started/

md: https://developers.cloudflare.com/hyperdrive/get-started/index.md

---

Hyperdrive accelerates access to your existing databases from Cloudflare Workers, making even single-region databases feel globally distributed.

By maintaining a connection pool to your database within Cloudflare's network, Hyperdrive reduces seven round-trips to your database before you can even send a query: the TCP handshake (1x), TLS negotiation (3x), and database authentication (3x).

Hyperdrive understands the difference between read and write queries to your database, and caches the most common read queries, improving performance and reducing load on your origin database.

This guide will instruct you through:

* Creating your first Hyperdrive configuration.

* Creating a [Cloudflare Worker](https://developers.cloudflare.com/workers/) and binding it to your Hyperdrive configuration.

* Establishing a database connection from your Worker to a public database.

Note

Hyperdrive currently works with PostgreSQL, MySQL and many compatible databases. This includes CockroachDB and Materialize (which are PostgreSQL-compatible), and PlanetScale.

Learn more about the [databases that Hyperdrive supports](https://developers.cloudflare.com/hyperdrive/reference/supported-databases-and-features).

## Prerequisites

Before you begin, ensure you have completed the following:

1. Sign up for a [Cloudflare account](https://dash.cloudflare.com/sign-up/workers-and-pages) if you have not already.

2. Install [`Node.js`](https://docs.npmjs.com/downloading-and-installing-node-js-and-npm). Use a Node version manager like [nvm](https://github.com/nvm-sh/nvm) or [Volta](https://volta.sh/) to avoid permission issues and change Node.js versions. [Wrangler](https://developers.cloudflare.com/workers/wrangler/install-and-update/) requires a Node version of `16.17.0` or later.

3. Have a publicly accessible PostgreSQL or MySQL (or compatible) database. *If your database is in a private network (like a VPC)*, refer to [Connect to a private database](https://developers.cloudflare.com/hyperdrive/configuration/connect-to-private-database/) for instructions on using Cloudflare Tunnel with Hyperdrive.

## 1. Log in

Before creating your Hyperdrive binding, log in with your Cloudflare account by running:

```sh

npx wrangler login

```

You will be directed to a web page asking you to log in to the Cloudflare dashboard. After you have logged in, you will be asked if Wrangler can make changes to your Cloudflare account. Scroll down and select **Allow** to continue.

## 2. Create a Worker

New to Workers?

Refer to [How Workers works](https://developers.cloudflare.com/workers/reference/how-workers-works/) to learn about the Workers serverless execution model works. Go to the [Workers Get started guide](https://developers.cloudflare.com/workers/get-started/guide/) to set up your first Worker.

Create a new project named `hyperdrive-tutorial` by running:

* npm

```sh

npm create cloudflare@latest -- hyperdrive-tutorial

```

* yarn

```sh

yarn create cloudflare hyperdrive-tutorial

```

* pnpm

```sh

pnpm create cloudflare@latest hyperdrive-tutorial

```

For setup, select the following options:

* For *What would you like to start with?*, choose `Hello World example`.

* For *Which template would you like to use?*, choose `Worker only`.

* For *Which language do you want to use?*, choose `TypeScript`.

* For *Do you want to use git for version control?*, choose `Yes`.

* For *Do you want to deploy your application?*, choose `No` (we will be making some changes before deploying).

This will create a new `hyperdrive-tutorial` directory. Your new `hyperdrive-tutorial` directory will include:

* A `"Hello World"` [Worker](https://developers.cloudflare.com/workers/get-started/guide/#3-write-code) at `src/index.ts`.

* A [`wrangler.jsonc`](https://developers.cloudflare.com/workers/wrangler/configuration/) configuration file. `wrangler.jsonc` is how your `hyperdrive-tutorial` Worker will connect to Hyperdrive.

### Enable Node.js compatibility

[Node.js compatibility](https://developers.cloudflare.com/workers/runtime-apis/nodejs/) is required for database drivers, and needs to be configured for your Workers project.

To enable both built-in runtime APIs and polyfills for your Worker or Pages project, add the [`nodejs_compat`](https://developers.cloudflare.com/workers/configuration/compatibility-flags/#nodejs-compatibility-flag) [compatibility flag](https://developers.cloudflare.com/workers/configuration/compatibility-flags/#nodejs-compatibility-flag) to your [Wrangler configuration file](https://developers.cloudflare.com/workers/wrangler/configuration/), and set your compatibility date to September 23rd, 2024 or later. This will enable [Node.js compatibility](https://developers.cloudflare.com/workers/runtime-apis/nodejs/) for your Workers project.

* wrangler.jsonc

```jsonc

{

"compatibility_flags": [

"nodejs_compat"

],

// Set this to today's date

"compatibility_date": "2026-02-26"

}

```

* wrangler.toml

```toml

compatibility_flags = [ "nodejs_compat" ]

# Set this to today's date

compatibility_date = "2026-02-26"

```

## 3. Connect Hyperdrive to a database

Hyperdrive works by connecting to your database, pooling database connections globally, and speeding up your database access through Cloudflare's network.

It will provide a secure connection string that is only accessible from your Worker which you can use to connect to your database through Hyperdrive. This means that you can use the Hyperdrive connection string with your existing drivers or ORM libraries without needing significant changes to your code.

To create your first Hyperdrive database configuration, change into the directory you just created for your Workers project:

```sh

cd hyperdrive-tutorial

```

To create your first Hyperdrive, you will need:

* The IP address (or hostname) and port of your database.

* The database username (for example, `hyperdrive-demo`).

* The password associated with that username.

* The name of the database you want Hyperdrive to connect to. For example, `postgres` or `mysql`.

Hyperdrive accepts the combination of these parameters in the common connection string format used by database drivers:

* PostgreSQL

```txt

postgres://USERNAME:PASSWORD@HOSTNAME_OR_IP_ADDRESS:PORT/database_name

```

Most database providers will provide a connection string you can copy-and-paste directly into Hyperdrive.

To create a Hyperdrive connection, run the `wrangler` command, replacing the placeholder values passed to the `--connection-string` flag with the values of your existing database:

```sh

npx wrangler hyperdrive create --connection-string="postgres://user:password@HOSTNAME_OR_IP_ADDRESS:PORT/database_name"

```

* MySQL

```txt

mysql://USERNAME:PASSWORD@HOSTNAME_OR_IP_ADDRESS:PORT/database_name

```

Most database providers will provide a connection string you can copy-and-paste directly into Hyperdrive.

To create a Hyperdrive connection, run the `wrangler` command, replacing the placeholder values passed to the `--connection-string` flag with the values of your existing database:

```sh

npx wrangler hyperdrive create --connection-string="mysql://user:password@HOSTNAME_OR_IP_ADDRESS:PORT/database_name"

```

Manage caching

By default, Hyperdrive will cache query results. If you wish to disable caching, pass the flag `--caching-disabled`.

Alternatively, you can use the `--max-age` flag to specify the maximum duration (in seconds) for which items should persist in the cache, before they are evicted. Default value is 60 seconds.

Refer to [Hyperdrive Wrangler commands](https://developers.cloudflare.com/hyperdrive/reference/wrangler-commands/) for more information.

If successful, the command will output your new Hyperdrive configuration:

```json

{

"hyperdrive": [

{

"binding": "HYPERDRIVE",

"id": ""

}

]

}

```

Copy the `id` field: you will use this in the next step to make Hyperdrive accessible from your Worker script.

Note

Hyperdrive will attempt to connect to your database with the provided credentials to verify they are correct before creating a configuration. If you encounter an error when attempting to connect, refer to Hyperdrive's [troubleshooting documentation](https://developers.cloudflare.com/hyperdrive/observability/troubleshooting/) to debug possible causes.

## 4. Bind your Worker to Hyperdrive

You must create a binding in your [Wrangler configuration file](https://developers.cloudflare.com/workers/wrangler/configuration/) for your Worker to connect to your Hyperdrive configuration. [Bindings](https://developers.cloudflare.com/workers/runtime-apis/bindings/) allow your Workers to access resources, like Hyperdrive, on the Cloudflare developer platform.

To bind your Hyperdrive configuration to your Worker, add the following to the end of your Wrangler file:

* wrangler.jsonc

```jsonc

{

"hyperdrive": [

{

"binding": "HYPERDRIVE",

"id": "" // the ID associated with the Hyperdrive you just created

}

]

}

```

* wrangler.toml

```toml

[[hyperdrive]]

binding = "HYPERDRIVE"

id = ""

```

Specifically:

* The value (string) you set for the `binding` (binding name) will be used to reference this database in your Worker. In this tutorial, name your binding `HYPERDRIVE`.

* The binding must be [a valid JavaScript variable name](https://developer.mozilla.org/en-US/docs/Web/JavaScript/Guide/Grammar_and_types#variables). For example, `binding = "hyperdrive"` or `binding = "productionDB"` would both be valid names for the binding.

* Your binding is available in your Worker at `env.`.

If you wish to use a local database during development, you can add a `localConnectionString` to your Hyperdrive configuration with the connection string of your database:

* wrangler.jsonc

```jsonc

{

"hyperdrive": [

{

"binding": "HYPERDRIVE",

"id": "", // the ID associated with the Hyperdrive you just created

"localConnectionString": ""

}

]

}

```

* wrangler.toml

```toml

[[hyperdrive]]

binding = "HYPERDRIVE"

id = ""

localConnectionString = ""

```

Note

Learn more about setting up [Hyperdrive for local development](https://developers.cloudflare.com/hyperdrive/configuration/local-development/).

## 5. Run a query against your database

Once you have created a Hyperdrive configuration and bound it to your Worker, you can run a query against your database.

### Install a database driver

* PostgreSQL

To connect to your database, you will need a database driver which allows you to authenticate and query your database. For this tutorial, you will use [node-postgres (pg)](https://node-postgres.com/), one of the most widely used PostgreSQL drivers.

To install `pg`, ensure you are in the `hyperdrive-tutorial` directory. Open your terminal and run the following command:

* npm

```sh

# This should install v8.13.0 or later

npm i pg

```

* yarn

```sh

# This should install v8.13.0 or later

yarn add pg

```

* pnpm

```sh

# This should install v8.13.0 or later

pnpm add pg

```

If you are using TypeScript, you should also install the type definitions for `pg`:

* npm

```sh

# This should install v8.13.0 or later

npm i -D @types/pg

```

* yarn

```sh

# This should install v8.13.0 or later

yarn add -D @types/pg

```

* pnpm

```sh

# This should install v8.13.0 or later

pnpm add -D @types/pg

```

With the driver installed, you can now create a Worker script that queries your database.

* MySQL

To connect to your database, you will need a database driver which allows you to authenticate and query your database. For this tutorial, you will use [mysql2](https://github.com/sidorares/node-mysql2), one of the most widely used MySQL drivers.

To install `mysql2`, ensure you are in the `hyperdrive-tutorial` directory. Open your terminal and run the following command:

* npm

```sh

# This should install v3.13.0 or later

npm i mysql2

```

* yarn

```sh

# This should install v3.13.0 or later

yarn add mysql2

```

* pnpm

```sh

# This should install v3.13.0 or later

pnpm add mysql2

```

With the driver installed, you can now create a Worker script that queries your database.

* npm

```sh

# This should install v8.13.0 or later

npm i pg

```

* yarn

```sh

# This should install v8.13.0 or later

yarn add pg

```

* pnpm

```sh

# This should install v8.13.0 or later

pnpm add pg

```

* npm

```sh

# This should install v8.13.0 or later

npm i -D @types/pg

```

* yarn

```sh

# This should install v8.13.0 or later

yarn add -D @types/pg

```

* pnpm

```sh

# This should install v8.13.0 or later

pnpm add -D @types/pg

```

* npm

```sh

# This should install v3.13.0 or later

npm i mysql2

```

* yarn

```sh

# This should install v3.13.0 or later

yarn add mysql2

```

* pnpm

```sh

# This should install v3.13.0 or later

pnpm add mysql2

```

### Write a Worker

* PostgreSQL

After you have set up your database, you will run a SQL query from within your Worker.

Go to your `hyperdrive-tutorial` Worker and open the `index.ts` file.

The `index.ts` file is where you configure your Worker's interactions with Hyperdrive.

Populate your `index.ts` file with the following code:

```typescript

// pg 8.13.0 or later is recommended

import { Client } from "pg";

export interface Env {

// If you set another name in the Wrangler config file as the value for 'binding',

// replace "HYPERDRIVE" with the variable name you defined.

HYPERDRIVE: Hyperdrive;

}

export default {

async fetch(request, env, ctx): Promise {

// Create a new client on each request. Hyperdrive maintains the underlying

// database connection pool, so creating a new client is fast.

const sql = new Client({

connectionString: env.HYPERDRIVE.connectionString,

});

try {

// Connect to the database

await sql.connect();

// Sample query

const results = await sql.query(`SELECT * FROM pg_tables`);

// Return result rows as JSON

return Response.json(results.rows);

} catch (e) {

console.error(e);

return Response.json(

{ error: e instanceof Error ? e.message : e },

{ status: 500 },

);

}

},

} satisfies ExportedHandler;

```

Upon receiving a request, the code above does the following:

1. Creates a new database client configured to connect to your database via Hyperdrive, using the Hyperdrive connection string.

2. Initiates a query via `await sql.query()` that outputs all tables (user and system created) in the database (as an example query).

3. Returns the response as JSON to the client. Hyperdrive automatically cleans up the client connection when the request ends, and keeps the underlying database connection open in its pool for reuse.

* MySQL

After you have set up your database, you will run a SQL query from within your Worker.

Go to your `hyperdrive-tutorial` Worker and open the `index.ts` file.

The `index.ts` file is where you configure your Worker's interactions with Hyperdrive.

Populate your `index.ts` file with the following code:

```typescript

// mysql2 v3.13.0 or later is required

import { createConnection } from 'mysql2/promise';

export interface Env {

// If you set another name in the Wrangler config file as the value for 'binding',

// replace "HYPERDRIVE" with the variable name you defined.

HYPERDRIVE: Hyperdrive;

}

export default {

async fetch(request, env, ctx): Promise {

// Create a new connection on each request. Hyperdrive maintains the underlying

// database connection pool, so creating a new connection is fast.

const connection = await createConnection({

host: env.HYPERDRIVE.host,

user: env.HYPERDRIVE.user,

password: env.HYPERDRIVE.password,

database: env.HYPERDRIVE.database,

port: env.HYPERDRIVE.port,

// The following line is needed for mysql2 compatibility with Workers

// mysql2 uses eval() to optimize result parsing for rows with > 100 columns

// Configure mysql2 to use static parsing instead of eval() parsing with disableEval

disableEval: true

});

try{

// Sample query

const [results, fields] = await connection.query(

'SHOW tables;'

);

// Return result rows as JSON

return new Response(JSON.stringify({ results, fields }), {

headers: {

'Content-Type': 'application/json',

'Access-Control-Allow-Origin': '*',

},

});

}

catch(e){

console.error(e);

return Response.json(

{ error: e instanceof Error ? e.message : e },

{ status: 500 },

);

}

},

} satisfies ExportedHandler;

```

Upon receiving a request, the code above does the following:

1. Creates a new database client configured to connect to your database via Hyperdrive, using the Hyperdrive connection string.

2. Initiates a query via `await connection.query` that outputs all tables (user and system created) in the database (as an example query).

3. Returns the response as JSON to the client. Hyperdrive automatically cleans up the client connection when the request ends, and keeps the underlying database connection open in its pool for reuse.

### Run in development mode (optional)

You can test your Worker locally before deploying by running `wrangler dev`. This runs your Worker code on your machine while connecting to your database.

The `localConnectionString` field works with both local and remote databases and allows you to connect directly to your database from your Worker project running locally. You must specify the SSL/TLS mode if required (`sslmode=require` for Postgres, `sslMode=REQUIRED` for MySQL).

To connect to a database during local development, configure `localConnectionString` in your `wrangler.jsonc`:

```jsonc

{

"hyperdrive": [

{

"binding": "HYPERDRIVE",

"id": "your-hyperdrive-id",

"localConnectionString": "postgres://user:password@your-database-host:5432/database",

},

],

}

```

Or set an environment variable:

```sh

export CLOUDFLARE_HYPERDRIVE_LOCAL_CONNECTION_STRING_HYPERDRIVE="postgres://user:password@your-database-host:5432/database"

```

Then start local development:

```sh

npx wrangler dev

```

Note

When using `wrangler dev` with `localConnectionString` or `CLOUDFLARE_HYPERDRIVE_LOCAL_CONNECTION_STRING_HYPERDRIVE`, Hyperdrive caching does not take effect locally.

Alternatively, you can run `wrangler dev --remote` to test against your deployed Hyperdrive configuration with caching enabled, but this runs your entire Worker in Cloudflare's network instead of locally.

Learn more about [local development with Hyperdrive](https://developers.cloudflare.com/hyperdrive/configuration/local-development/).

## 6. Deploy your Worker

You can now deploy your Worker to make your project accessible on the Internet. To deploy your Worker, run:

```sh

npx wrangler deploy

# Outputs: https://hyperdrive-tutorial..workers.dev

```

You can now visit the URL for your newly created project to query your live database.

For example, if the URL of your new Worker is `hyperdrive-tutorial..workers.dev`, accessing `https://hyperdrive-tutorial..workers.dev/` will send a request to your Worker that queries your database directly.

By finishing this tutorial, you have created a Hyperdrive configuration, a Worker to access that database and deployed your project globally.

Reduce latency with Placement

If your Worker makes **multiple sequential queries** per request, use [Placement](https://developers.cloudflare.com/workers/configuration/placement/) to run your Worker close to your database. Each query adds round-trip latency: 20-30ms from a distant region, or 1-3ms when placed nearby. Multiple queries compound this difference.

If your Worker makes only one query per request, placement does not improve end-to-end latency. The total round-trip time is the same whether it happens near the user or near the database.

```jsonc

{

"placement": {

"region": "aws:us-east-1", // Match your database region, for example "gcp:us-east4" or "azure:eastus"

},

}

```

## Next steps

* Learn more about [how Hyperdrive works](https://developers.cloudflare.com/hyperdrive/concepts/how-hyperdrive-works/).

* How to [configure query caching](https://developers.cloudflare.com/hyperdrive/concepts/query-caching/).

* [Troubleshooting common issues](https://developers.cloudflare.com/hyperdrive/observability/troubleshooting/) when connecting a database to Hyperdrive.

If you have any feature requests or notice any bugs, share your feedback directly with the Cloudflare team by joining the [Cloudflare Developers community on Discord](https://discord.cloudflare.com).

---

title: Hyperdrive REST API · Cloudflare Hyperdrive docs

lastUpdated: 2024-12-16T22:33:26.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/hyperdrive/hyperdrive-rest-api/

md: https://developers.cloudflare.com/hyperdrive/hyperdrive-rest-api/index.md

---

---

title: Observability · Cloudflare Hyperdrive docs

lastUpdated: 2024-09-06T08:27:36.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/hyperdrive/observability/

md: https://developers.cloudflare.com/hyperdrive/observability/index.md

---

* [Troubleshoot and debug](https://developers.cloudflare.com/hyperdrive/observability/troubleshooting/)

* [Metrics and analytics](https://developers.cloudflare.com/hyperdrive/observability/metrics/)

---

title: Platform · Cloudflare Hyperdrive docs

lastUpdated: 2024-09-06T08:27:36.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/hyperdrive/platform/

md: https://developers.cloudflare.com/hyperdrive/platform/index.md

---

* [Pricing](https://developers.cloudflare.com/hyperdrive/platform/pricing/)

* [Limits](https://developers.cloudflare.com/hyperdrive/platform/limits/)

* [Choose a data or storage product](https://developers.cloudflare.com/workers/platform/storage-options/)

* [Release notes](https://developers.cloudflare.com/hyperdrive/platform/release-notes/)

---

title: Reference · Cloudflare Hyperdrive docs

lastUpdated: 2024-09-06T08:27:36.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/hyperdrive/reference/

md: https://developers.cloudflare.com/hyperdrive/reference/index.md

---

* [Supported databases and features](https://developers.cloudflare.com/hyperdrive/reference/supported-databases-and-features/)

* [FAQ](https://developers.cloudflare.com/hyperdrive/reference/faq/)

* [Wrangler commands](https://developers.cloudflare.com/hyperdrive/reference/wrangler-commands/)

---

title: Tutorials · Cloudflare Hyperdrive docs

description: View tutorials to help you get started with Hyperdrive.

lastUpdated: 2025-08-18T14:27:42.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/hyperdrive/tutorials/

md: https://developers.cloudflare.com/hyperdrive/tutorials/index.md

---

View tutorials to help you get started with Hyperdrive.

| Name | Last Updated | Difficulty |

| - | - | - |

| [Connect to a PostgreSQL database with Cloudflare Workers](https://developers.cloudflare.com/workers/tutorials/postgres/) | 8 months ago | Beginner |

| [Connect to a MySQL database with Cloudflare Workers](https://developers.cloudflare.com/workers/tutorials/mysql/) | 11 months ago | Beginner |

| [Create a serverless, globally distributed time-series API with Timescale](https://developers.cloudflare.com/hyperdrive/tutorials/serverless-timeseries-api-with-timescale/) | over 2 years ago | Beginner |

---

title: Connection pooling · Cloudflare Hyperdrive docs

description: >-

Hyperdrive maintains a pool of connections to your database. These are

optimally placed to minimize the latency for your applications. You can

configure

the amount of connections your Hyperdrive configuration uses to connect to

your origin database. This enables you to right-size your connection pool

based on your database capacity and application requirements.

lastUpdated: 2025-11-12T15:17:36.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/hyperdrive/concepts/connection-pooling/

md: https://developers.cloudflare.com/hyperdrive/concepts/connection-pooling/index.md

---

Hyperdrive maintains a pool of connections to your database. These are optimally placed to minimize the latency for your applications. You can configure the amount of connections your Hyperdrive configuration uses to connect to your origin database. This enables you to right-size your connection pool based on your database capacity and application requirements.

For instance, if your Worker makes many queries to your database (which cannot be resolved by Hyperdrive's caching), you may want to allow Hyperdrive to make more connections to your database. Conversely, if your Worker makes few queries that actually need to reach your database or if your database allows a small number of database connections, you can reduce the amount of connections Hyperdrive will make to your database.

All configurations have a minimum of 5 connections, and with a maximum depending on your Workers plan. Refer to the [limits](https://developers.cloudflare.com/hyperdrive/platform/limits/) for details.

## How Hyperdrive pools database connections

Hyperdrive will automatically scale the amount of database connections held open by Hyperdrive depending on your traffic and the amount of load that is put on your database.

The `max_size` parameter acts as a soft limit - Hyperdrive may temporarily create additional connections during network issues or high traffic periods to ensure high availability and resiliency.

## Pooling mode

The Hyperdrive connection pooler operates in transaction mode, where the client that executes the query communicates through a single connection for the duration of a transaction. When that transaction has completed, the connection is returned to the pool.

Hyperdrive supports [`SET` statements](https://www.postgresql.org/docs/current/sql-set.html) for the duration of a transaction or a query. For instance, if you manually create a transaction with `BEGIN`/`COMMIT`, `SET` statements within the transaction will take effect. Moreover, a query that includes a `SET` command (`SET X; SELECT foo FROM bar;`) will also apply the `SET` command. When a connection is returned to the pool, the connection is `RESET` such that the `SET` commands will not take effect on subsequent queries.

This implies that a single Worker invocation may obtain multiple connections to perform its database operations and may need to `SET` any configurations for every query or transaction. It is not recommended to wrap multiple database operations with a single transaction to maintain the `SET` state. Doing so will affect the performance and scaling of Hyperdrive, as the connection cannot be reused by other Worker isolates for the duration of the transaction.

Hyperdrive supports named prepared statements as implemented in the `postgres.js` and `node-postgres` drivers. Named prepared statements in other drivers may have worse performance or may not be supported.

## Best practices

You can configure connection counts using the Cloudflare dashboard or the Cloudflare API. Consider the following best practices to determine the right limit for your use-case:

* **Start conservatively**: Begin with a lower connection count and increase as needed based on your application's performance.

* **Monitor database metrics**: Watch your database's connection usage and performance metrics to optimize the connection count.

* **Consider database limits**: Ensure your configured connection count doesn't exceed your database's maximum connection limit.

* **Account for multiple configurations**: If you have multiple Hyperdrive configurations connecting to the same database, consider the total connection count across all configurations.

## Next steps

* Learn more about [How Hyperdrive works](https://developers.cloudflare.com/hyperdrive/concepts/how-hyperdrive-works/).

* Review [Hyperdrive limits](https://developers.cloudflare.com/hyperdrive/platform/limits/) for your Workers plan.

* Learn how to [Connect to PostgreSQL](https://developers.cloudflare.com/hyperdrive/examples/connect-to-postgres/) from Hyperdrive.

---

title: Connection lifecycle · Cloudflare Hyperdrive docs

description: Understanding how connections work between Workers, Hyperdrive, and

your origin database is essential for building efficient applications with

Hyperdrive.

lastUpdated: 2026-02-06T18:26:52.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/hyperdrive/concepts/connection-lifecycle/

md: https://developers.cloudflare.com/hyperdrive/concepts/connection-lifecycle/index.md

---

Understanding how connections work between Workers, Hyperdrive, and your origin database is essential for building efficient applications with Hyperdrive.

By maintaining a connection pool to your database within Cloudflare's network, Hyperdrive reduces seven round-trips to your database before you can even send a query: the TCP handshake (1x), TLS negotiation (3x), and database authentication (3x).

## How connections are managed

When you use a database client in a Cloudflare Worker, the connection lifecycle works differently than in traditional server environments. Here's what happens:

Without Hyperdrive, every Worker invocation would need to establish a new connection directly to your origin database. This connection setup process requires multiple roundtrips across the Internet to complete the TCP handshake, TLS negotiation, and database authentication — that's 7x round trips and added latency before your query can even execute.

Hyperdrive solves this by splitting the connection setup into two parts: a fast edge connection and an optimized path to your database.

1. **Connection setup on the edge**: The database driver in your Worker code establishes a connection to the Hyperdrive instance. This happens at the edge, colocated with your Worker, making it extremely fast to create connections. This is why you use Hyperdrive's special connection string.

2. **Single roundtrip across regions**: Since authentication has already been completed at the edge, Hyperdrive only needs a single round trip across regions to your database, instead of the multiple roundtrips that would be incurred during connection setup.

3. **Get existing connection from pool**: Hyperdrive uses an existing connection from the pool that is colocated close to your database, minimizing latency.

4. **If no available connections, create new**: When needed, new connections are created from a region close to your database to reduce the latency of establishing new connections.

5. **Run query**: Your query is executed against the database and results are returned to your Worker through Hyperdrive.

6. **Connection teardown**: When your Worker finishes processing the request, the database client connection in your Worker is automatically garbage collected. However, Hyperdrive keeps the connection to your origin database open in the pool, ready to be reused by the next Worker invocation. This means subsequent requests will still perform the fast edge connection setup, but will reuse one of the existing connections from Hyperdrive's pool near your database.

Note

In a Cloudflare Worker, database client connections within the Worker are only kept alive for the duration of a single invocation. With Hyperdrive, creating a new client on each invocation is fast and recommended because Hyperdrive maintains the underlying database connections for you, pooled in an optimal location and shared across Workers to maximize scale.

## Cleaning up client connections

When your Worker finishes processing a request, the database client is automatically garbage collected and the edge connection to Hyperdrive is cleaned up. Hyperdrive keeps the underlying connection to your origin database open in its pool for reuse.

You do **not** need to call `client.end()`, `sql.end()`, `connection.end()` (or similar) to clean up database clients. Workers-to-Hyperdrive connections are automatically cleaned up when the request or invocation ends, including when a [Workflow](https://developers.cloudflare.com/workflows/) or [Queue consumer](https://developers.cloudflare.com/queues/) completes, or when a [Durable Object](https://developers.cloudflare.com/durable-objects/) hibernates or is evicted when idle.

```ts

import { Client } from "pg";

export default {

async fetch(request, env, ctx): Promise {

const client = new Client({

connectionString: env.HYPERDRIVE.connectionString,

});

await client.connect();

const result = await client.query("SELECT * FROM pg_tables");

// No need to call client.end() — Hyperdrive automatically cleans

// up the client connection when the request ends. The underlying

// pooled connection to your origin database remains open for reuse.

return Response.json(result.rows);

},

} satisfies ExportedHandler;

```

Create database clients inside your handlers

You should always create database clients inside your request handlers (`fetch`, `queue`, and similar), not in the global scope. Workers do not allow [I/O across requests](https://developers.cloudflare.com/workers/runtime-apis/bindings/#making-changes-to-bindings), and Hyperdrive's distributed connection pooling already solves for connection startup latency. Using a driver-level pool (such as `new Pool()` or `createPool()`) in the global script scope will leave you with stale connections that result in failed queries and hard errors.

Do not create database clients or connection pools in the global scope. Instead, create a new client inside each handler invocation — Hyperdrive's connection pool ensures this is fast:

* JavaScript

```js

import { Client } from "pg";

// 🔴 Bad: Client created in the global scope persists across requests.

// Workers do not allow I/O across request contexts, so this client

// becomes stale and subsequent queries will throw hard errors.

const globalClient = new Client({

connectionString: env.HYPERDRIVE.connectionString,

});

await globalClient.connect();

export default {

async fetch(request, env, ctx) {

// ✅ Good: Client created inside the handler, scoped to this request.

// Hyperdrive pools the underlying connection to your origin database,

// so creating a new client per request is fast and reliable.

const client = new Client({

connectionString: env.HYPERDRIVE.connectionString,

});

await client.connect();

const result = await client.query("SELECT * FROM pg_tables");

return Response.json(result.rows);

},

};

```

* TypeScript

```ts

import { Client } from "pg";

// 🔴 Bad: Client created in the global scope persists across requests.

// Workers do not allow I/O across request contexts, so this client

// becomes stale and subsequent queries will throw hard errors.

const globalClient = new Client({

connectionString: env.HYPERDRIVE.connectionString,

});

await globalClient.connect();

export default {

async fetch(request, env, ctx): Promise {

// ✅ Good: Client created inside the handler, scoped to this request.

// Hyperdrive pools the underlying connection to your origin database,

// so creating a new client per request is fast and reliable.

const client = new Client({

connectionString: env.HYPERDRIVE.connectionString,

});

await client.connect();

const result = await client.query("SELECT * FROM pg_tables");

return Response.json(result.rows);

},

} satisfies ExportedHandler;

```

## Connection lifecycle considerations

### Durable Objects and persistent connections

Unlike regular Workers, [Durable Objects](https://developers.cloudflare.com/durable-objects/) can maintain state across multiple requests. If you keep a database client open in a Durable Object, the connection will remain allocated from Hyperdrive's connection pool. Long-lived Durable Objects can exhaust available connections if many objects keep connections open simultaneously.

Warning

Be careful when maintaining persistent database connections in Durable Objects. Each open connection consumes resources from Hyperdrive's connection pool, which could impact other parts of your application. Close connections when not actively in use, use connection timeouts, and limit the number of Durable Objects that maintain database connections.

### Long-running transactions

Hyperdrive operates in [transaction pooling mode](https://developers.cloudflare.com/hyperdrive/concepts/how-hyperdrive-works/#pooling-mode), where a connection is held for the duration of a transaction. Long-running transactions that contain multiple queries can exhaust Hyperdrive's available connections more quickly because each transaction holds a connection from the pool until it completes.

Tip

Keep transactions as short as possible. Perform only the essential queries within a transaction, and avoid including non-database operations (like external API calls or complex computations) inside transaction blocks.

Refer to [Limits](https://developers.cloudflare.com/hyperdrive/platform/limits/) to understand how many connections are available for your Hyperdrive configuration based on your Workers plan.

## Related resources

* [How Hyperdrive works](https://developers.cloudflare.com/hyperdrive/concepts/how-hyperdrive-works/)

* [Connection pooling](https://developers.cloudflare.com/hyperdrive/concepts/connection-pooling/)

* [Limits](https://developers.cloudflare.com/hyperdrive/platform/limits/)

* [Durable Objects](https://developers.cloudflare.com/durable-objects/)

---

title: How Hyperdrive works · Cloudflare Hyperdrive docs

description: Connecting to traditional centralized databases from Cloudflare's

global network which consists of over 300 data center locations presents a few

challenges as queries can originate from any of these locations.

lastUpdated: 2026-01-26T13:23:46.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/hyperdrive/concepts/how-hyperdrive-works/

md: https://developers.cloudflare.com/hyperdrive/concepts/how-hyperdrive-works/index.md

---

Connecting to traditional centralized databases from Cloudflare's global network which consists of over [300 data center locations](https://www.cloudflare.com/network/) presents a few challenges as queries can originate from any of these locations.

If your database is centrally located, queries can take a long time to get to the database and back. Queries can take even longer in situations where you have to establish new connections from stateless environments like Workers, requiring multiple round trips for each Worker invocation.

Traditional databases usually handle a maximum number of connections. With any reasonably large amount of distributed traffic, it becomes easy to exhaust these connections.

Hyperdrive solves these challenges by managing the number of global connections to your origin database, selectively parsing and choosing which query response to cache while reducing loading on your database and accelerating your database queries.

## How Hyperdrive makes databases fast globally

Hyperdrive accelerates database queries by:

* Performing the connection setup for new database connections near your Workers

* Pooling existing connections near your database

* Caching query results

This ensures you have optimal performance when connecting to your database from Workers (whether your queries are cached or not).

### 1. Edge connection setup

When a database driver connects to a database from a Cloudflare Worker **directly**, it will first go through the connection setup. This may require multiple round trips to the database in order to verify and establish a secure connection. This can incur additional network latency due to the distance between your Cloudflare Worker and your database.

**With Hyperdrive**, this connection setup occurs between your Cloudflare Worker and Hyperdrive on the edge, as close to your Worker as possible (see diagram, label *1. Connection setup*). This incurs significantly less latency, since the connection setup is completed within the same location.

Learn more about how connections work between Workers and Hyperdrive in [Connection lifecycle](https://developers.cloudflare.com/hyperdrive/concepts/connection-lifecycle/).

### 2. Connection Pooling

Hyperdrive creates a pool of connections to your database that can be reused as your application executes queries against your database.

The pool of database connections is placed in one or more regions closest to your origin database. This minimizes the latency incurred by roundtrips between your Cloudflare Workers and database to establish new connections. This also ensures that as little network latency is incurred for uncached queries.

If the connection pool has pre-existing connections, the connection pool will try and reuse that connection (see diagram, label *2. Existing warm connection*). If the connection pool does not have pre-existing connections, it will establish a new connection to your database and use that to route your query. This aims at reusing and creating the least number of connections possible as required to operate your application.

Note

Hyperdrive automatically manages the connection pool properties for you, including limiting the total number of connections to your origin database. Refer to [Limits](https://developers.cloudflare.com/hyperdrive/platform/limits/) to learn more.

Learn more about connection pooling behavior and configuration in [Connection pooling](https://developers.cloudflare.com/hyperdrive/concepts/connection-pooling/).

Reduce latency with Placement

If your Worker makes **multiple sequential queries** per request, use [Placement](https://developers.cloudflare.com/workers/configuration/placement/) to run your Worker close to your database. Each query adds round-trip latency: 20-30ms from a distant region, or 1-3ms when placed nearby. Multiple queries compound this difference.

If your Worker makes only one query per request, placement does not improve end-to-end latency. The total round-trip time is the same whether it happens near the user or near the database.

```jsonc

{

"placement": {

"region": "aws:us-east-1", // Match your database region, for example "gcp:us-east4" or "azure:eastus"

},

}

```

### 3. Query Caching

Hyperdrive supports caching of non-mutating (read) queries to your database.

When queries are sent via Hyperdrive, Hyperdrive parses the query and determines whether the query is a mutating (write) or non-mutating (read) query.

For non-mutating queries, Hyperdrive will cache the response for the configured `max_age`, and whenever subsequent queries are made that match the original, Hyperdrive will return the cached response, bypassing the need to issue the query back to the origin database.

Caching reduces the burden on your origin database and accelerates the response times for your queries.

Learn more about query caching behavior and configuration in [Query caching](https://developers.cloudflare.com/hyperdrive/concepts/query-caching/).

## Pooling mode

The Hyperdrive connection pooler operates in transaction mode, where the client that executes the query communicates through a single connection for the duration of a transaction. When that transaction has completed, the connection is returned to the pool.

Hyperdrive supports [`SET` statements](https://www.postgresql.org/docs/current/sql-set.html) for the duration of a transaction or a query. For instance, if you manually create a transaction with `BEGIN`/`COMMIT`, `SET` statements within the transaction will take effect. Moreover, a query that includes a `SET` command (`SET X; SELECT foo FROM bar;`) will also apply the `SET` command. When a connection is returned to the pool, the connection is `RESET` such that the `SET` commands will not take effect on subsequent queries.

This implies that a single Worker invocation may obtain multiple connections to perform its database operations and may need to `SET` any configurations for every query or transaction. It is not recommended to wrap multiple database operations with a single transaction to maintain the `SET` state. Doing so will affect the performance and scaling of Hyperdrive, as the connection cannot be reused by other Worker isolates for the duration of the transaction.

Hyperdrive supports named prepared statements as implemented in the `postgres.js` and `node-postgres` drivers. Named prepared statements in other drivers may have worse performance or may not be supported.

## Related resources

* [Connection lifecycle](https://developers.cloudflare.com/hyperdrive/concepts/connection-lifecycle/)

* [Query caching](https://developers.cloudflare.com/hyperdrive/concepts/query-caching/)

* [Connection pooling](https://developers.cloudflare.com/hyperdrive/concepts/connection-pooling/)

---

title: Query caching · Cloudflare Hyperdrive docs

description: Hyperdrive automatically caches all cacheable queries executed

against your database when query caching is turned on, reducing the need to go

back to your database (incurring latency and database load) for every query

which can be especially useful for popular queries. Query caching is enabled

by default.

lastUpdated: 2026-02-06T18:26:52.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/hyperdrive/concepts/query-caching/

md: https://developers.cloudflare.com/hyperdrive/concepts/query-caching/index.md

---

Hyperdrive automatically caches all cacheable queries executed against your database when query caching is turned on, reducing the need to go back to your database (incurring latency and database load) for every query which can be especially useful for popular queries. Query caching is enabled by default.

## What does Hyperdrive cache?

Because Hyperdrive uses database protocols, it can differentiate between a mutating query (a query that writes to the database) and a non-mutating query (a read-only query), allowing Hyperdrive to safely cache read-only queries.

Besides determining the difference between a `SELECT` and an `INSERT`, Hyperdrive also parses the database wire-protocol and uses it to differentiate between a mutating or non-mutating query.

For example, a read query that populates the front page of a news site would be cached:

* PostgreSQL

```sql

-- Cacheable

SELECT * FROM articles WHERE DATE(published_time) =

CURRENT_DATE() ORDER BY published_time DESC LIMIT 50

```

* MySQL

```sql

-- Cacheable

SELECT * FROM articles WHERE DATE(published_time) =

CURDATE() ORDER BY published_time DESC LIMIT 50

```

Mutating queries (including `INSERT`, `UPSERT`, or `CREATE TABLE`) and queries that use [functions designated as `volatile` by PostgreSQL](https://www.postgresql.org/docs/current/xfunc-volatility.html) are not cached:

* PostgreSQL

```sql

-- Not cached

INSERT INTO users(id, name, email) VALUES(555, 'Matt', 'hello@example.com');

SELECT LASTVAL(), * FROM articles LIMIT 50;

```

* MySQL

```sql

-- Not cached

INSERT INTO users(id, name, email) VALUES(555, 'Thomas', 'hello@example.com');

SELECT LAST_INSERT_ID(), * FROM articles LIMIT 50;

```

## Default cache settings

The default caching behaviour for Hyperdrive is defined as below:

* `max_age` = 60 seconds (1 minute)

* `stale_while_revalidate` = 15 seconds

The `max_age` setting determines the maximum lifetime a query response will be served from cache. Cached responses may be evicted from the cache prior to this time if they are rarely used.

The `stale_while_revalidate` setting allows Hyperdrive to continue serving stale cache results for an additional period of time while it is revalidating the cache. In most cases, revalidation should happen rapidly.

You can set a maximum `max_age` of 1 hour.

## Disable caching

Disable caching on a per-Hyperdrive basis by using the [Wrangler](https://developers.cloudflare.com/workers/wrangler/install-and-update/) CLI to set the `--caching-disabled` option to `true`.

For example:

```sh

# wrangler v3.11 and above required

npx wrangler hyperdrive update my-hyperdrive-id --origin-password my-db-password --caching-disabled true

```

You can also configure multiple Hyperdrive connections from a single application: one connection that enables caching for popular queries, and a second connection where you do not want to cache queries, but still benefit from Hyperdrive's latency benefits and connection pooling.

For example, using database drivers:

* PostgreSQL

```ts

export default {

async fetch(request, env, ctx): Promise {

// Create clients inside your handler — not in global scope

const client = postgres(env.HYPERDRIVE.connectionString);

// ...

const clientNoCache = postgres(env.HYPERDRIVE_CACHE_DISABLED.connectionString);

// ...

},

} satisfies ExportedHandler;

```

* MySQL

```ts

export default {

async fetch(request, env, ctx): Promise {

// Create connections inside your handler — not in global scope

const connection = await createConnection({

host: env.HYPERDRIVE.host,

user: env.HYPERDRIVE.user,

password: env.HYPERDRIVE.password,

database: env.HYPERDRIVE.database,

port: env.HYPERDRIVE.port,

});

// ...

const connectionNoCache = await createConnection({

host: env.HYPERDRIVE_CACHE_DISABLED.host,

user: env.HYPERDRIVE_CACHE_DISABLED.user,

password: env.HYPERDRIVE_CACHE_DISABLED.password,

database: env.HYPERDRIVE_CACHE_DISABLED.database,

port: env.HYPERDRIVE_CACHE_DISABLED.port,

});

// ...

},

} satisfies ExportedHandler;

```

The Wrangler configuration remains the same both for PostgreSQL and MySQL.

```jsonc

{

// Rest of file

"hyperdrive": [

{

"binding": "HYPERDRIVE",

"id": ""

},

{

"binding": "HYPERDRIVE_CACHE_DISABLED",

"id": ""

}

]

}

```

## Next steps

* Learn more about [How Hyperdrive works](https://developers.cloudflare.com/hyperdrive/concepts/how-hyperdrive-works/).

* Learn how to [Connect to PostgreSQL](https://developers.cloudflare.com/hyperdrive/examples/connect-to-postgres/) from Hyperdrive.

* Review [Troubleshooting common issues](https://developers.cloudflare.com/hyperdrive/observability/troubleshooting/) when connecting a database to Hyperdrive.

---

title: Connect to a private database using Tunnel · Cloudflare Hyperdrive docs

description: Hyperdrive can securely connect to your private databases using

Cloudflare Tunnel and Cloudflare Access.

lastUpdated: 2026-02-06T11:48:20.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/hyperdrive/configuration/connect-to-private-database/

md: https://developers.cloudflare.com/hyperdrive/configuration/connect-to-private-database/index.md

---

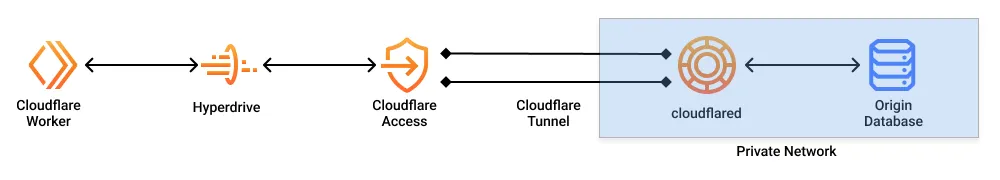

Hyperdrive can securely connect to your private databases using [Cloudflare Tunnel](https://developers.cloudflare.com/cloudflare-one/networks/connectors/cloudflare-tunnel/) and [Cloudflare Access](https://developers.cloudflare.com/cloudflare-one/access-controls/policies/).

## How it works

When your database is isolated within a private network (such as a [virtual private cloud](https://www.cloudflare.com/learning/cloud/what-is-a-virtual-private-cloud) or an on-premise network), you must enable a secure connection from your network to Cloudflare.

* [Cloudflare Tunnel](https://developers.cloudflare.com/cloudflare-one/networks/connectors/cloudflare-tunnel/) is used to establish the secure tunnel connection.

* [Cloudflare Access](https://developers.cloudflare.com/cloudflare-one/access-controls/policies/) is used to restrict access to your tunnel such that only specific Hyperdrive configurations can access it.

A request from the Cloudflare Worker to the origin database goes through Hyperdrive, Cloudflare Access, and the Cloudflare Tunnel established by `cloudflared`. `cloudflared` must be running in the private network in which your database is accessible.

The Cloudflare Tunnel will establish an outbound bidirectional connection from your private network to Cloudflare. Cloudflare Access will secure your Cloudflare Tunnel to be only accessible by your Hyperdrive configuration.

## Before you start

All of the tutorials assume you have already completed the [Get started guide](https://developers.cloudflare.com/workers/get-started/guide/), which gets you set up with a Cloudflare Workers account, [C3](https://github.com/cloudflare/workers-sdk/tree/main/packages/create-cloudflare), and [Wrangler](https://developers.cloudflare.com/workers/wrangler/install-and-update/).

Warning

If your organization also uses [Super Bot Fight Mode](https://developers.cloudflare.com/bots/get-started/super-bot-fight-mode/), keep **Definitely Automated** set to **Allow**. Otherwise, tunnels might fail with a `websocket: bad handshake` error.

## Prerequisites

* A database in your private network, [configured to use TLS/SSL](https://developers.cloudflare.com/hyperdrive/examples/connect-to-postgres/#supported-tls-ssl-modes).

* A hostname on your Cloudflare account, which will be used to route requests to your database.

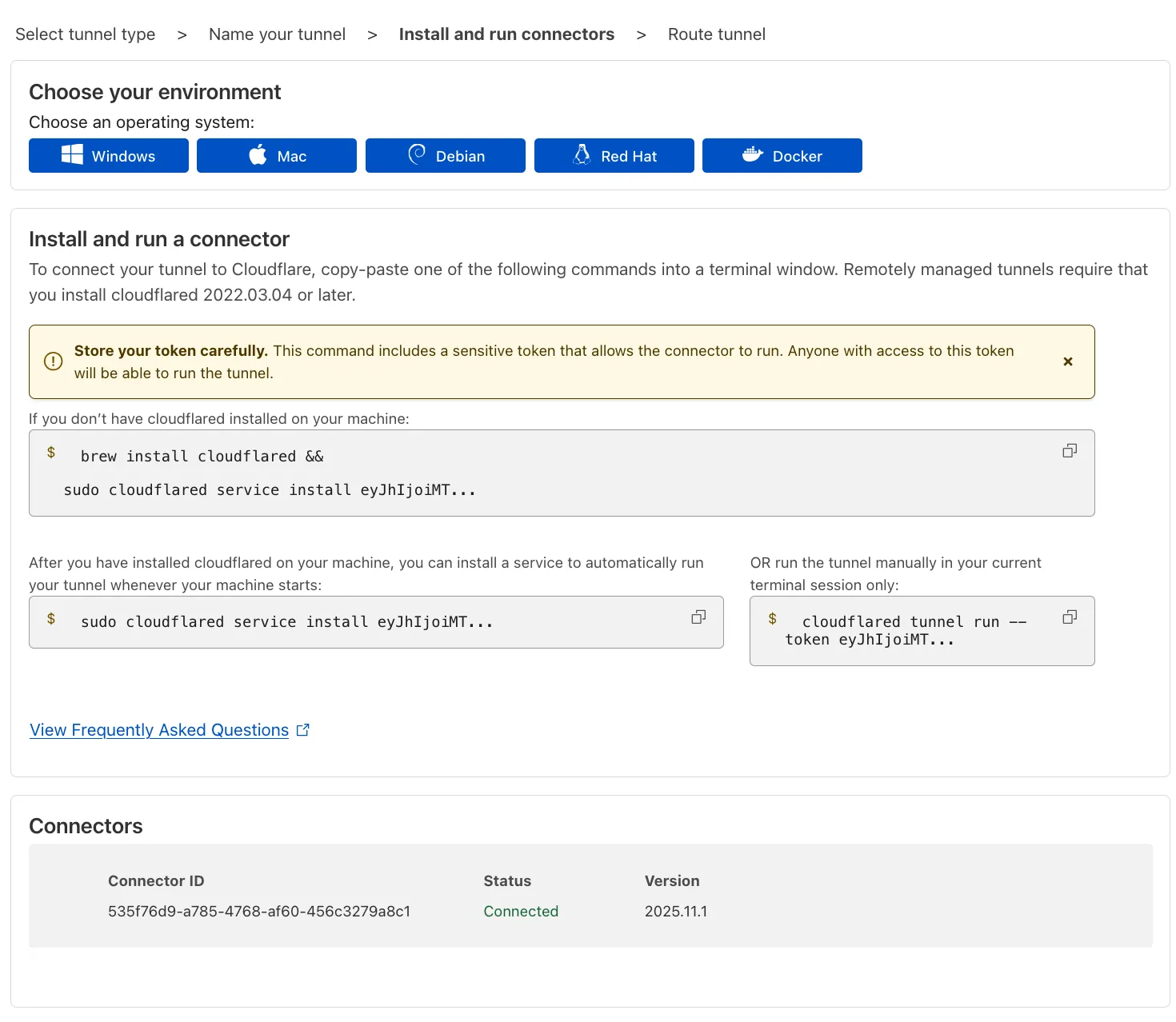

## 1. Create a tunnel in your private network

### 1.1. Create a tunnel

First, create a [Cloudflare Tunnel](https://developers.cloudflare.com/cloudflare-one/networks/connectors/cloudflare-tunnel/) in your private network to establish a secure connection between your network and Cloudflare. Your network must be configured such that the tunnel has permissions to egress to the Cloudflare network and access the database within your network.

1. Log in to [Cloudflare One](https://one.dash.cloudflare.com) and go to **Networks** > **Connectors** > **Cloudflare Tunnels**.

2. Select **Create a tunnel**.

3. Choose **Cloudflared** for the connector type and select **Next**.

4. Enter a name for your tunnel. We suggest choosing a name that reflects the type of resources you want to connect through this tunnel (for example, `enterprise-VPC-01`).

5. Select **Save tunnel**.

6. Next, you will need to install `cloudflared` and run it. To do so, check that the environment under **Choose an environment** reflects the operating system on your machine, then copy the command in the box below and paste it into a terminal window. Run the command.

7. Once the command has finished running, your connector will appear in Cloudflare One.

8. Select **Next**.

### 1.2. Connect your database using a public hostname

Your tunnel must be configured to use a public hostname on Cloudflare so that Hyperdrive can route requests to it. If you don't have a hostname on Cloudflare yet, you will need to [register a new hostname](https://developers.cloudflare.com/registrar/get-started/register-domain/) or [add a zone](https://developers.cloudflare.com/dns/zone-setups/) to Cloudflare to proceed.

1. In the **Published application routes** tab, choose a **Domain** and specify any subdomain or path information. This will be used in your Hyperdrive configuration to route to this tunnel.

2. In the **Service** section, specify **Type** `TCP` and the URL and configured port of your database, such as `localhost:5432` or `my-database-host.database-provider.com:5432`. This address will be used by the tunnel to route requests to your database.

3. Select **Save tunnel**.

Note

If you are setting up the tunnel through the CLI instead ([locally-managed tunnel](https://developers.cloudflare.com/cloudflare-one/networks/connectors/cloudflare-tunnel/do-more-with-tunnels/local-management/)), you will have to complete these steps manually. Follow the Cloudflare Zero Trust documentation to [add a public hostname to your tunnel](https://developers.cloudflare.com/cloudflare-one/networks/connectors/cloudflare-tunnel/routing-to-tunnel/dns/) and [configure the public hostname to route to the address of your database](https://developers.cloudflare.com/cloudflare-one/networks/connectors/cloudflare-tunnel/do-more-with-tunnels/local-management/configuration-file/).

## 2. Create and configure Hyperdrive to connect to the Cloudflare Tunnel

To restrict access to the Cloudflare Tunnel to Hyperdrive, a [Cloudflare Access application](https://developers.cloudflare.com/cloudflare-one/access-controls/applications/http-apps/) must be configured with a [Policy](https://developers.cloudflare.com/cloudflare-one/traffic-policies/) that requires requests to contain a valid [Service Auth token](https://developers.cloudflare.com/cloudflare-one/access-controls/policies/#service-auth).

The Cloudflare dashboard can automatically create and configure the underlying [Cloudflare Access application](https://developers.cloudflare.com/cloudflare-one/access-controls/applications/http-apps/), [Service Auth token](https://developers.cloudflare.com/cloudflare-one/access-controls/policies/#service-auth), and [Policy](https://developers.cloudflare.com/cloudflare-one/traffic-policies/) on your behalf. Alternatively, you can manually create the Access application and configure the Policies.

Automatic creation

### 2.1. (Automatic) Create a Hyperdrive configuration in the Cloudflare dashboard

Create a Hyperdrive configuration in the Cloudflare dashboard to automatically configure Hyperdrive to connect to your Cloudflare Tunnel.

1. In the [Cloudflare dashboard](https://dash.cloudflare.com/?to=/:account/workers/hyperdrive), navigate to **Storage & Databases > Hyperdrive** and click **Create configuration**.

2. Select **Private database**.

3. In the **Networking details** section, select the tunnel you are connecting to.

4. In the **Networking details** section, select the hostname associated to the tunnel. If there is no hostname for your database, return to step [1.2. Connect your database using a public hostname](https://developers.cloudflare.com/hyperdrive/configuration/connect-to-private-database/#12-connect-your-database-using-a-public-hostname).

5. In the **Access Service Authentication Token** section, select **Create new (automatic)**.

6. In the **Access Application** section, select **Create new (automatic)**.

7. In the **Database connection details** section, enter the database **name**, **user**, and **password**.

Manual creation

### 2.1. (Manual) Create a service token

The service token will be used to restrict requests to the tunnel, and is needed for the next step.

1. In [Cloudflare One](https://one.dash.cloudflare.com), go to **Access controls** > **Service credentials** > **Service Tokens**.

2. Select **Create Service Token**.

3. Name the service token. The name allows you to easily identify events related to the token in the logs and to revoke the token individually.

4. Set a **Service Token Duration** of `Non-expiring`. This prevents the service token from expiring, ensuring it can be used throughout the life of the Hyperdrive configuration.

5. Select **Generate token**. You will see the generated Client ID and Client Secret for the service token, as well as their respective request headers.

6. Copy the Access Client ID and Access Client Secret. These will be used when creating the Hyperdrive configuration.

Warning

This is the only time Cloudflare Access will display the Client Secret. If you lose the Client Secret, you must regenerate the service token.

### 2.2. (Manual) Create an Access application to secure the tunnel

[Cloudflare Access](https://developers.cloudflare.com/cloudflare-one/access-controls/policies/) will be used to verify that requests to the tunnel originate from Hyperdrive using the service token created above.

1. In [Cloudflare One](https://one.dash.cloudflare.com), go to **Access controls** > **Applications**.

2. Select **Add an application**.

3. Select **Self-hosted**.

4. Enter any name for the application.

5. In **Session Duration**, select `No duration, expires immediately`.

6. Select **Add public hostname**. and enter the subdomain and domain that was previously set for the tunnel application.

7. Select **Create new policy**.

8. Enter a **Policy name** and set the **Action** to *Service Auth*.

9. Create an **Include** rule. Specify a **Selector** of *Service Token* and the **Value** of the service token you created in step [2. Create a service token](#21-create-a-service-token).

10. Save the policy.

11. Go back to the application configuration and add the newly created Access policy.

12. In **Login methods**, turn off *Accept all available identity providers* and clear all identity providers.

13. Select **Next**.

14. In **Application Appearance**, turn off **Show application in App Launcher**.

15. Select **Next**.

16. Select **Next**.

17. Save the application.

### 2.3. (Manual) Create a Hyperdrive configuration

To create a Hyperdrive configuration for your private database, you'll need to specify the Access application and Cloudflare Tunnel information upon creation.

* Wrangler

```sh

# wrangler v3.65 and above required

npx wrangler hyperdrive create --host= --user= --password= --database= --access-client-id= --access-client-secret=

```

* Terraform

```terraform

resource "cloudflare_hyperdrive_config" "" {

account_id = ""

name = ""

origin = {

host = ""

database = ""

user = ""

password = ""

scheme = "postgres"

access_client_id = ""

access_client_secret = ""

}

caching = {

disabled = false

}

}

```

This will create a Hyperdrive configuration using the usual database information (database name, database host, database user, and database password).

In addition, it will also set the Access Client ID and the Access Client Secret of the Service Token. When Hyperdrive makes requests to the tunnel, requests will be intercepted by Access and validated using the credentials of the Service Token.

Note