---

title: Overview · Cloudflare AI Gateway docs

description: Cloudflare's AI Gateway allows you to gain visibility and control

over your AI apps. By connecting your apps to AI Gateway, you can gather

insights on how people are using your application with analytics and logging

and then control how your application scales with features such as caching,

rate limiting, as well as request retries, model fallback, and more. Better

yet - it only takes one line of code to get started.

lastUpdated: 2026-02-18T19:10:24.000Z

chatbotDeprioritize: false

tags: AI

source_url:

html: https://developers.cloudflare.com/ai-gateway/

md: https://developers.cloudflare.com/ai-gateway/index.md

---

Observe and control your AI applications.

Available on all plans

Cloudflare's AI Gateway allows you to gain visibility and control over your AI apps. By connecting your apps to AI Gateway, you can gather insights on how people are using your application with analytics and logging and then control how your application scales with features such as caching, rate limiting, as well as request retries, model fallback, and more. Better yet - it only takes one line of code to get started.

Check out the [Get started guide](https://developers.cloudflare.com/ai-gateway/get-started/) to learn how to configure your applications with AI Gateway.

## Features

### Analytics

View metrics such as the number of requests, tokens, and the cost it takes to run your application.

[View Analytics](https://developers.cloudflare.com/ai-gateway/observability/analytics/)

### Logging

Gain insight on requests and errors.

[View Logging](https://developers.cloudflare.com/ai-gateway/observability/logging/)

### Caching

Serve requests directly from Cloudflare's cache instead of the original model provider for faster requests and cost savings.

[Use Caching](https://developers.cloudflare.com/ai-gateway/features/caching/)

### Rate limiting

Control how your application scales by limiting the number of requests your application receives.

[Use Rate limiting](https://developers.cloudflare.com/ai-gateway/features/rate-limiting/)

### Request retry and fallback

Improve resilience by defining request retry and model fallbacks in case of an error.

[Use Request retry and fallback](https://developers.cloudflare.com/ai-gateway/features/dynamic-routing/)

### Your favorite providers

Workers AI, Anthropic, Google Gemini, OpenAI, Replicate, and more work with AI Gateway.

[Use Your favorite providers](https://developers.cloudflare.com/ai-gateway/usage/providers/)

***

## Related products

**[Workers AI](https://developers.cloudflare.com/workers-ai/)**

Run machine learning models, powered by serverless GPUs, on Cloudflare’s global network.

**[Vectorize](https://developers.cloudflare.com/vectorize/)**

Build full-stack AI applications with Vectorize, Cloudflare's vector database. Adding Vectorize enables you to perform tasks such as semantic search, recommendations, anomaly detection or can be used to provide context and memory to an LLM.

## More resources

[Developer Discord](https://discord.cloudflare.com)

Connect with the Workers community on Discord to ask questions, show what you are building, and discuss the platform with other developers.

[Use cases](https://developers.cloudflare.com/use-cases/ai/)

Learn how you can build and deploy ambitious AI applications to Cloudflare's global network.

[@CloudflareDev](https://x.com/cloudflaredev)

Follow @CloudflareDev on Twitter to learn about product announcements, and what is new in Cloudflare Workers.

---

title: 404 - Page Not Found · Cloudflare AI Gateway docs

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/ai-gateway/404/

md: https://developers.cloudflare.com/ai-gateway/404/index.md

---

# 404

Check the URL, try using our [search](https://developers.cloudflare.com/search/) or try our LLM-friendly [llms.txt directory](https://developers.cloudflare.com/llms.txt).

---

title: AI Assistant · Cloudflare AI Gateway docs

lastUpdated: 2024-10-30T16:07:34.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/ai-gateway/ai/

md: https://developers.cloudflare.com/ai-gateway/ai/index.md

---

---

title: REST API reference · Cloudflare AI Gateway docs

lastUpdated: 2024-12-18T13:12:05.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/ai-gateway/api-reference/

md: https://developers.cloudflare.com/ai-gateway/api-reference/index.md

---

---

title: Changelog · Cloudflare AI Gateway docs

description: Subscribe to RSS

lastUpdated: 2025-05-09T15:42:57.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/ai-gateway/changelog/

md: https://developers.cloudflare.com/ai-gateway/changelog/index.md

---

[Subscribe to RSS](https://developers.cloudflare.com/ai-gateway/changelog/index.xml)

## 2025-11-21

Unified Billing now supports opt-in Zero Data Retention. This ensures supported upstream AI providers (eg [OpenAI ZDR](https://platform.openai.com/docs/guides/your-data#zero-data-retention)) do not retain request and response data.

## 2025-11-14

* Supports adding OpenAI compatible [Custom Providers](https://developers.cloudflare.com/ai-gateway/configuration/custom-providers/) for inferencing with AI providers that are not natively supported by AI Gateway

* Cost and usage tracking for voice models

* You can now use Workers AI via AI Gateway with no additional configuration. Previously, this required generating / passing additional Workers AI tokens.

## 2025-11-06

**Unified Billing**

* [Unified Billing](https://developers.cloudflare.com/ai-gateway/features/unified-billing/) is now in open beta. Connect multiple AI providers (e.g. OpenAI, Anthropic) without any additional setup and pay through a single Cloudflare invoice. To use it, purchase credits in the Cloudflare Dashboard and spend them across providers via AI Gateway.

## 2025-11-03

New supported providers

* [Baseten](https://developers.cloudflare.com/ai-gateway/usage/providers/baseten/)

* [Ideogram](https://developers.cloudflare.com/ai-gateway/usage/providers/ideogram/)

* [Deepgram](https://developers.cloudflare.com/ai-gateway/usage/providers/deepgram/)

## 2025-10-29

* Add support for pipecat model on Workers AI

* Fix OpenAI realtime websocket authentication.

## 2025-10-24

* Added cost tracking and observability support for async video generation requests for OpenAI Sora 2 and Google AI Studio Veo 3.

* `cf-aig-eventId` and `cf-aig-log-id` headers are now returned on all requests including failed requests

## 2025-10-14

The Model playground is now available in the AI Gateway Cloudflare Dashboard, allowing you to request and compare model behaviour across all models supported by AI Gateway.

## 2025-10-07

* Add support for [Deepgram on Workers AI](https://developers.cloudflare.com/ai-gateway/usage/websockets-api/realtime-api/#deepgram-workers-ai) using Websocket transport.

* Added [Parallel](https://developers.cloudflare.com/ai-gateway/usage/providers/parallel/) as a provider.

## 2025-09-24

**OTEL Tracing**

Added OpenTelemetry (OTEL) tracing export for better observability and debugging of AI Gateway requests.

## 2025-09-21

* Added support for [Fal AI](https://developers.cloudflare.com/ai-gateway/usage/providers/fal/) provider.

* You can now set up custom Stripe usage reporting, and report usage and costs for your users directly to Stripe from AI Gateway.

* Fixed incorrectly geoblocked requests for certain regions.

## 2025-09-19

* New API endpoint (`/compat/v1/models`) for listing available models along with their costs.

* Unified API now supports Google Vertex AI providers and all their models.

* BYOK support for requests using WebSocket transport.

## 2025-08-28

**Data Loss Prevention**

[Data loss prevention](https://developers.cloudflare.com/ai-gateway/features/dlp/) capabilities are now available to scan both incoming prompts and outgoing AI responses for sensitive information, ensuring your AI applications maintain security and compliance standards.

## 2025-08-25

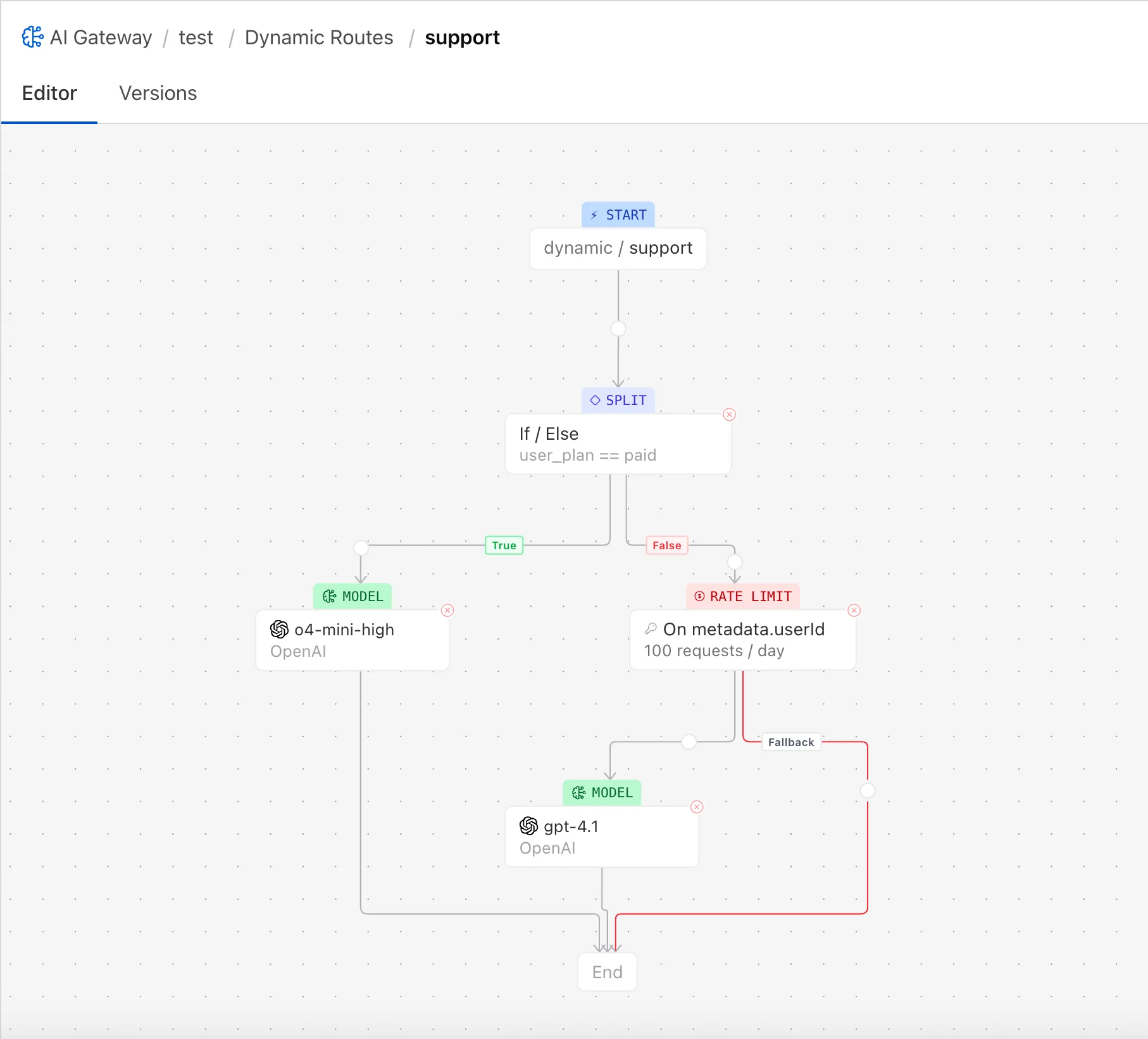

**Dynamic routing**

Introduced [Dynamic routing](https://developers.cloudflare.com/ai-gateway/features/dynamic-routing/) that lets you visually or via JSON define flexible request flows that segment users, enforce quotas, and choose models with fallbacks—without changing application code.

## 2025-08-21

**Bring your own keys (BYOK)**

Introduced [Bring your own keys (BYOK)](https://developers.cloudflare.com/ai-gateway/configuration/bring-your-own-keys/) allowing you to save your AI provider keys securely with Cloudflare Secret Store and manage them through the Cloudflare dashboard.

## 2025-06-18

**New GA providers**

We have moved the following providers out of beta and into GA:

* [Cartesia](https://developers.cloudflare.com/ai-gateway/usage/providers/cartesia/)

* [Cerebras](https://developers.cloudflare.com/ai-gateway/usage/providers/cerebras/)

* [DeepSeek](https://developers.cloudflare.com/ai-gateway/usage/providers/deepseek/)

* [ElevenLabs](https://developers.cloudflare.com/ai-gateway/usage/providers/elevenlabs/)

* [OpenRouter](https://developers.cloudflare.com/ai-gateway/usage/providers/openrouter/)

## 2025-05-28

**OpenAI Compatibility**

* Introduced a new [OpenAI-compatible chat completions endpoint](https://developers.cloudflare.com/ai-gateway/usage/chat-completion/) to simplify switching between different AI providers without major code modifications.

## 2025-04-22

* Increased Max Number of Gateways per account: Raised the maximum number of gateways per account from 10 to 20 for paid users. This gives you greater flexibility in managing your applications as you build and scale.

* Streaming WebSocket Bug Fix: Resolved an issue affecting streaming responses over [WebSockets](https://developers.cloudflare.com/ai-gateway/configuration/websockets-api/). This fix ensures more reliable and consistent streaming behavior across all supported AI providers.

* Increased Timeout Limits: Extended the default timeout for AI Gateway requests beyond the previous 100-second limit. This enhancement improves support for long-running requests.

## 2025-04-02

**Cache Key Calculation Changes**

* We have updated how [cache](https://developers.cloudflare.com/ai-gateway/features/caching/) keys are calculated. As a result, new cache entries will be created, and you may experience more cache misses than usual during this transition. Please monitor your traffic and performance, and let us know if you encounter any issues.

## 2025-03-18

**WebSockets**

* Added [WebSockets API](https://developers.cloudflare.com/ai-gateway/configuration/websockets-api/) to provide a persistent connection for AI interactions, eliminating repeated handshakes and reducing latency.

## 2025-02-26

**Guardrails**

* Added [Guardrails](https://developers.cloudflare.com/ai-gateway/features/guardrails/) help deploy AI applications safely by intercepting and evaluating both user prompts and model responses for harmful content.

## 2025-02-19

**Updated Log Storage Settings**

* Introduced customizable log storage settings, enabling users to:

* Define the maximum number of logs stored per gateway.

* Choose how logs are handled when the storage limit is reached:

* **On** - Automatically delete the oldest logs to ensure new logs are always saved.

* **Off** - Stop saving new logs when the storage limit is reached.

## 2025-02-06

**Added request handling**

* Added [request handling options](https://developers.cloudflare.com/ai-gateway/configuration/request-handling/) to help manage AI provider interactions effectively, ensuring your applications remain responsive and reliable.

## 2025-02-05

**New AI Gateway providers**

* **Configuration**: Added [ElevenLabs](https://elevenlabs.io/), [Cartesia](https://docs.cartesia.ai/), and [Cerebras](https://inference-docs.cerebras.ai/) as new providers.

## 2025-01-02

**DeepSeek**

* **Configuration**: Added [DeepSeek](https://developers.cloudflare.com/ai-gateway/usage/providers/deepseek/) as a new provider.

## 2024-12-17

**AI Gateway Dashboard**

* Updated dashboard to view performance, costs, and stats across all gateways.

## 2024-12-13

**Bug Fixes**

* **Bug Fixes**: Fixed Anthropic errors being cached.

* **Bug Fixes**: Fixed `env.AI.run()` requests using authenticated gateways returning authentication error.

## 2024-11-28

**OpenRouter**

* **Configuration**: Added [OpenRouter](https://developers.cloudflare.com/ai-gateway/usage/providers/openrouter/) as a new provider.

## 2024-11-19

**WebSockets API**

* **Configuration**: Added [WebSockets API](https://developers.cloudflare.com/ai-gateway/configuration/websockets-api/) which provides a single persistent connection, enabling continuous communication.

## 2024-11-19

**Authentication**

* **Configuration**: Added [Authentication](https://developers.cloudflare.com/ai-gateway/configuration/authentication/) which adds security by requiring a valid authorization token for each request.

## 2024-10-28

**Grok**

* **Providers**: Added [Grok](https://developers.cloudflare.com/ai-gateway/usage/providers/grok/) as a new provider.

## 2024-10-17

**Vercel SDK**

Added [Vercel AI SDK](https://sdk.vercel.ai/). The SDK supports many different AI providers, tools for streaming completions, and more.

## 2024-09-26

**Persistent logs**

* **Logs**: AI Gateway now has [logs that persist](https://developers.cloudflare.com/ai-gateway/observability/logging/index), giving you the flexibility to store them for your preferred duration.

## 2024-09-26

**Logpush**

* **Logs**: Securely export logs to an external storage location using [Logpush](https://developers.cloudflare.com/ai-gateway/observability/logging/logpush).

## 2024-09-26

**Pricing**

* **Pricing**: Added [pricing](https://developers.cloudflare.com/ai-gateway/reference/pricing/) for storing logs persistently.

## 2024-09-26

**Evaluations**

* **Configurations**: Use AI Gateway’s [Evaluations](https://developers.cloudflare.com/ai-gateway/evaluations) to make informed decisions on how to optimize your AI application.

## 2024-09-10

**Custom costs**

* **Configuration**: AI Gateway now allows you to set custom costs at the request level [custom costs](https://developers.cloudflare.com/ai-gateway/configuration/custom-costs/) to requests, accurately reflect your unique pricing, overriding the default or public model costs.

## 2024-08-02

**Mistral AI**

* **Providers**: Added [Mistral AI](https://developers.cloudflare.com/ai-gateway/usage/providers/mistral/) as a new provider.

## 2024-07-23

**Google AI Studio**

* **Providers**: Added [Google AI Studio](https://developers.cloudflare.com/ai-gateway/usage/providers/google-ai-studio/) as a new provider.

## 2024-07-10

**Custom metadata**

AI Gateway now supports adding [custom metadata](https://developers.cloudflare.com/ai-gateway/configuration/custom-metadata/) to requests, improving tracking and analysis of incoming requests.

## 2024-07-09

**Logs**

[Logs](https://developers.cloudflare.com/ai-gateway/observability/analytics/#logging) are now available for the last 24 hours.

## 2024-06-24

**Custom cache key headers**

AI Gateway now supports [custom cache key headers](https://developers.cloudflare.com/ai-gateway/features/caching/#custom-cache-key-cf-aig-cache-key).

## 2024-06-18

**Access an AI Gateway through a Worker**

Workers AI now natively supports [AI Gateway](https://developers.cloudflare.com/ai-gateway/usage/providers/workersai/#worker).

## 2024-05-22

**AI Gateway is now GA**

AI Gateway is moving from beta to GA.

## 2024-05-16

* **Providers**: Added [Cohere](https://developers.cloudflare.com/ai-gateway/usage/providers/cohere/) and [Groq](https://developers.cloudflare.com/ai-gateway/usage/providers/groq/) as new providers.

## 2024-05-09

* Added new endpoints to the [REST API](https://developers.cloudflare.com/api/resources/ai_gateway/methods/create/).

## 2024-03-26

* [LLM Side Channel vulnerability fixed](https://blog.cloudflare.com/ai-side-channel-attack-mitigated)

* **Providers**: Added Anthropic, Google Vertex, Perplexity as providers.

## 2023-10-26

* **Real-time Logs**: Logs are now real-time, showing logs for the last hour. If you have a need for persistent logs, please let the team know on Discord. We are building out a persistent logs feature for those who want to store their logs for longer.

* **Providers**: Azure OpenAI is now supported as a provider!

* **Docs**: Added Azure OpenAI example.

* **Bug Fixes**: Errors with costs and tokens should be fixed.

## 2023-10-09

* **Logs**: Logs will now be limited to the last 24h. If you have a use case that requires more logging, please reach out to the team on Discord.

* **Dashboard**: Logs now refresh automatically.

* **Docs**: Fixed Workers AI example in docs and dash.

* **Caching**: Embedding requests are now cacheable. Rate limit will not apply for cached requests.

* **Bug Fixes**: Identical requests to different providers are not wrongly served from cache anymore. Streaming now works as expected, including for the Universal endpoint.

* **Known Issues**: There's currently a bug with costs that we are investigating.

---

title: Configuration · Cloudflare AI Gateway docs

description: Configure your AI Gateway with multiple options and customizations.

lastUpdated: 2025-05-28T19:49:34.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/ai-gateway/configuration/

md: https://developers.cloudflare.com/ai-gateway/configuration/index.md

---

Configure your AI Gateway with multiple options and customizations.

* [BYOK (Store Keys)](https://developers.cloudflare.com/ai-gateway/configuration/bring-your-own-keys/)

* [Custom costs](https://developers.cloudflare.com/ai-gateway/configuration/custom-costs/)

* [Custom Providers](https://developers.cloudflare.com/ai-gateway/configuration/custom-providers/)

* [Manage gateways](https://developers.cloudflare.com/ai-gateway/configuration/manage-gateway/)

* [Request handling](https://developers.cloudflare.com/ai-gateway/configuration/request-handling/)

* [Fallbacks](https://developers.cloudflare.com/ai-gateway/configuration/fallbacks/)

* [Authenticated Gateway](https://developers.cloudflare.com/ai-gateway/configuration/authentication/)

---

title: Architectures · Cloudflare AI Gateway docs

description: Learn how you can use AI Gateway within your existing architecture.

lastUpdated: 2025-10-13T13:40:40.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/ai-gateway/demos/

md: https://developers.cloudflare.com/ai-gateway/demos/index.md

---

Learn how you can use AI Gateway within your existing architecture.

## Reference architectures

Explore the following reference architectures that use AI Gateway:

[Fullstack applications](https://developers.cloudflare.com/reference-architecture/diagrams/serverless/fullstack-application/)

[A practical example of how these services come together in a real fullstack application architecture.](https://developers.cloudflare.com/reference-architecture/diagrams/serverless/fullstack-application/)

[Multi-vendor AI observability and control](https://developers.cloudflare.com/reference-architecture/diagrams/ai/ai-multivendor-observability-control/)

[By shifting features such as rate limiting, caching, and error handling to the proxy layer, organizations can apply unified configurations across services and inference service providers.](https://developers.cloudflare.com/reference-architecture/diagrams/ai/ai-multivendor-observability-control/)

[AI Vibe Coding Platform](https://developers.cloudflare.com/reference-architecture/diagrams/ai/ai-vibe-coding-platform/)

[Cloudflare's low-latency, fully serverless compute platform, Workers offers powerful capabilities to enable A/B testing using a server-side implementation.](https://developers.cloudflare.com/reference-architecture/diagrams/ai/ai-vibe-coding-platform/)

---

title: Evaluations · Cloudflare AI Gateway docs

description: Understanding your application's performance is essential for

optimization. Developers often have different priorities, and finding the

optimal solution involves balancing key factors such as cost, latency, and

accuracy. Some prioritize low-latency responses, while others focus on

accuracy or cost-efficiency.

lastUpdated: 2025-08-19T11:42:14.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/ai-gateway/evaluations/

md: https://developers.cloudflare.com/ai-gateway/evaluations/index.md

---

Understanding your application's performance is essential for optimization. Developers often have different priorities, and finding the optimal solution involves balancing key factors such as cost, latency, and accuracy. Some prioritize low-latency responses, while others focus on accuracy or cost-efficiency.

AI Gateway's Evaluations provide the data needed to make informed decisions on how to optimize your AI application. Whether it is adjusting the model, provider, or prompt, this feature delivers insights into key metrics around performance, speed, and cost. It empowers developers to better understand their application's behavior, ensuring improved accuracy, reliability, and customer satisfaction.

Evaluations use datasets which are collections of logs stored for analysis. You can create datasets by applying filters in the Logs tab, which help narrow down specific logs for evaluation.

Our first step toward comprehensive AI evaluations starts with human feedback (currently in open beta). We will continue to build and expand AI Gateway with additional evaluators.

[Learn how to set up an evaluation](https://developers.cloudflare.com/ai-gateway/evaluations/set-up-evaluations/) including creating datasets, selecting evaluators, and running the evaluation process.

---

title: Features · Cloudflare AI Gateway docs

description: AI Gateway provides a comprehensive set of features to help you

build, deploy, and manage AI applications with confidence. From performance

optimization to security and observability, these features work together to

create a robust AI infrastructure.

lastUpdated: 2025-09-02T18:45:30.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/ai-gateway/features/

md: https://developers.cloudflare.com/ai-gateway/features/index.md

---

AI Gateway provides a comprehensive set of features to help you build, deploy, and manage AI applications with confidence. From performance optimization to security and observability, these features work together to create a robust AI infrastructure.

## Core Features

### Performance & Cost Optimization

### Caching

Serve identical requests directly from Cloudflare's global cache, reducing latency by up to 90% and significantly cutting costs by avoiding repeated API calls to AI providers.

**Key benefits:**

* Reduced response times for repeated queries

* Lower API costs through cache hits

* Configurable TTL and per-request cache control

* Works across all supported AI providers

[Use Caching](https://developers.cloudflare.com/ai-gateway/features/caching/)

### Rate Limiting

Control application scaling and protect against abuse with flexible rate limiting options. Set limits based on requests per time window with sliding or fixed window techniques.

**Key benefits:**

* Prevent API quota exhaustion

* Control costs and usage patterns

* Configurable per gateway or per request

* Multiple rate limiting techniques available

[Use Rate Limiting](https://developers.cloudflare.com/ai-gateway/features/rate-limiting/)

### Dynamic Routing

Create sophisticated request routing flows without code changes. Route requests based on user segments, geography, content analysis, or A/B testing requirements through a visual interface.

**Key benefits:**

* Visual flow-based configuration

* User-based and geographic routing

* A/B testing and fractional traffic splitting

* Context-aware routing based on request content

* Dynamic rate limiting with automatic fallbacks

[Use Dynamic Routing](https://developers.cloudflare.com/ai-gateway/features/dynamic-routing/)

### Security & Safety

### Guardrails

Deploy AI applications safely with real-time content moderation. Automatically detect and block harmful content in both user prompts and model responses across all providers.

**Key benefits:**

* Consistent moderation across all AI providers

* Real-time prompt and response evaluation

* Configurable content categories and actions

* Compliance and audit capabilities

* Enhanced user safety and trust

[Use Guardrails](https://developers.cloudflare.com/ai-gateway/features/guardrails/)

### Data Loss Prevention (DLP)

Protect your organization from inadvertent exposure of sensitive data through AI interactions. Scan prompts and responses for PII, financial data, and other sensitive information.

**Key benefits:**

* Real-time scanning of AI prompts and responses

* Detection of PII, financial, healthcare, and custom data patterns

* Configurable actions: flag or block sensitive content

* Integration with Cloudflare's enterprise DLP solution

* Compliance support for GDPR, HIPAA, and PCI DSS

[Use Data Loss Prevention (DLP)](https://developers.cloudflare.com/ai-gateway/features/dlp/)

### Authentication

Secure your AI Gateway with token-based authentication. Control access to your gateways and protect against unauthorized usage.

**Key benefits:**

* Token-based access control

* Configurable per gateway

* Integration with Cloudflare's security infrastructure

* Audit trail for access attempts

[Use Authentication](https://developers.cloudflare.com/ai-gateway/configuration/authentication/)

### Bring Your Own Keys (BYOK)

Securely store and manage AI provider API keys in Cloudflare's encrypted infrastructure. Remove hardcoded keys from your applications while maintaining full control.

**Key benefits:**

* Encrypted key storage at rest and in transit

* Centralized key management across providers

* Easy key rotation without code changes

* Support for 20+ AI providers

* Enhanced security and compliance

[Use Bring Your Own Keys (BYOK)](https://developers.cloudflare.com/ai-gateway/configuration/bring-your-own-keys/)

### Observability & Analytics

### Analytics

Gain deep insights into your AI application usage with comprehensive analytics. Track requests, tokens, costs, errors, and performance across all providers.

**Key benefits:**

* Real-time usage metrics and trends

* Cost tracking and estimation across providers

* Error monitoring and troubleshooting

* Cache hit rates and performance insights

* GraphQL API for custom dashboards

[Use Analytics](https://developers.cloudflare.com/ai-gateway/observability/analytics/)

### Logging

Capture detailed logs of all AI requests and responses for debugging, compliance, and analysis. Configure log retention and export options.

**Key benefits:**

* Complete request/response logging

* Configurable log retention policies

* Export capabilities via Logpush

* Custom metadata support

* Compliance and audit support

[Use Logging](https://developers.cloudflare.com/ai-gateway/observability/logging/)

### Custom Metadata

Enrich your logs and analytics with custom metadata. Tag requests with user IDs, team information, or any custom data for enhanced filtering and analysis.

**Key benefits:**

* Enhanced request tracking and filtering

* User and team-based analytics

* Custom business logic integration

* Improved debugging and troubleshooting

[Use Custom Metadata](https://developers.cloudflare.com/ai-gateway/observability/custom-metadata/)

### Advanced Configuration

### Custom Costs

Override default pricing with your negotiated rates or custom cost models. Apply custom costs at the request level for accurate cost tracking.

**Key benefits:**

* Accurate cost tracking with negotiated rates

* Per-request cost customization

* Better budget planning and forecasting

* Support for enterprise pricing agreements

[Use Custom Costs](https://developers.cloudflare.com/ai-gateway/configuration/custom-costs/)

## Feature Comparison by Use Case

| Use Case | Recommended Features |

| - | - |

| **Cost Optimization** | Caching, Rate Limiting, Custom Costs |

| **High Availability** | Fallbacks using Dynamic Routing |

| **Security & Compliance** | Guardrails, DLP, Authentication, BYOK, Logging |

| **Performance Monitoring** | Analytics, Logging, Custom Metadata |

| **A/B Testing** | Dynamic Routing, Custom Metadata, Analytics |

## Getting Started with Features

1. **Start with the basics**: Enable [Caching](https://developers.cloudflare.com/ai-gateway/features/caching/) and [Analytics](https://developers.cloudflare.com/ai-gateway/observability/analytics/) for immediate benefits

2. **Add reliability**: Configure Fallbacks and Rate Limiting using [Dynamic routing](https://developers.cloudflare.com/ai-gateway/features/dynamic-routing/)

3. **Enhance security**: Implement [Guardrails](https://developers.cloudflare.com/ai-gateway/features/guardrails/), [DLP](https://developers.cloudflare.com/ai-gateway/features/dlp/), and [Authentication](https://developers.cloudflare.com/ai-gateway/configuration/authentication/)

***

*All features work seamlessly together and across all 20+ supported AI providers. Get started with [AI Gateway](https://developers.cloudflare.com/ai-gateway/get-started/) to begin using these features in your applications.*

---

title: Getting started · Cloudflare AI Gateway docs

description: In this guide, you will learn how to create and use your first AI Gateway.

lastUpdated: 2026-01-21T09:55:14.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/ai-gateway/get-started/

md: https://developers.cloudflare.com/ai-gateway/get-started/index.md

---

In this guide, you will learn how to create and use your first AI Gateway.

* Dashboard

[Go to **AI Gateway**](https://dash.cloudflare.com/?to=/:account/ai/ai-gateway)

1. Log into the [Cloudflare dashboard](https://dash.cloudflare.com/) and select your account.

2. Go to **AI** > **AI Gateway**.

3. Select **Create Gateway**.

4. Enter your **Gateway name**. Note: Gateway name has a 64 character limit.

5. Select **Create**.

* API

To set up an AI Gateway using the API:

1. [Create an API token](https://developers.cloudflare.com/fundamentals/api/get-started/create-token/) with the following permissions:

* `AI Gateway - Read`

* `AI Gateway - Edit`

2. Get your [Account ID](https://developers.cloudflare.com/fundamentals/account/find-account-and-zone-ids/).

3. Using that API token and Account ID, send a [`POST` request](https://developers.cloudflare.com/api/resources/ai_gateway/methods/create/) to the Cloudflare API.

### Authenticated gateway 🔒

When you enable authentication on a gateway, each request is required to include a valid Cloudflare API token, adding an extra layer of security. We recommend using an authenticated gateway to prevent unauthorized access. [Learn more](https://developers.cloudflare.com/ai-gateway/configuration/authentication/).

## Provider Authentication

Authenticate with your upstream AI provider using one of the following options:

* **Unified Billing:** Use the AI Gateway billing to pay for and authenticate your inference requests. Refer to [Unified Billing](https://developers.cloudflare.com/ai-gateway/features/unified-billing/).

* **BYOK (Store Keys):** Store your own provider API Keys with Cloudflare, and AI Gateway will include them at runtime. Refer to [BYOK](https://developers.cloudflare.com/ai-gateway/configuration/bring-your-own-keys/).

* **Request headers:** Include your provider API Key in the request headers as you normally would (for example, `Authorization: Bearer `).

## Integration Options

### Unified API Endpoint

OpenAI Compatible Recommended

The easiest way to get started with AI Gateway is through our OpenAI-compatible `/chat/completions` endpoint. This allows you to use existing OpenAI SDKs and tools with minimal code changes while gaining access to multiple AI providers.

`https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_name}/compat/chat/completions`

**Key benefits:**

* Drop-in replacement for OpenAI API, works with existing OpenAI SDKs and other OpenAI compliant clients

* Switch between providers by changing the `model` parameter

* Dynamic Routing - Define complex routing scenarios requiring conditional logic, conduct A/B tests, set rate / budget limits, etc

#### Example:

Make a request to

OpenAI

using

OpenAI JS SDK

with

Stored Key (BYOK)

Refer to [Unified API](https://developers.cloudflare.com/ai-gateway/usage/chat-completion/) to learn more about OpenAI compatibility.

### Provider-specific endpoints

For direct integration with specific AI providers, use dedicated endpoints that maintain the original provider's API schema while adding AI Gateway features.

```txt

https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/{provider}

```

**Available providers:**

* [OpenAI](https://developers.cloudflare.com/ai-gateway/usage/providers/openai/) - GPT models and embeddings

* [Anthropic](https://developers.cloudflare.com/ai-gateway/usage/providers/anthropic/) - Claude models

* [Google AI Studio](https://developers.cloudflare.com/ai-gateway/usage/providers/google-ai-studio/) - Gemini models

* [Workers AI](https://developers.cloudflare.com/ai-gateway/usage/providers/workersai/) - Cloudflare's inference platform

* [AWS Bedrock](https://developers.cloudflare.com/ai-gateway/usage/providers/bedrock/) - Amazon's managed AI service

* [Azure OpenAI](https://developers.cloudflare.com/ai-gateway/usage/providers/azureopenai/) - Microsoft's OpenAI service

* [and more...](https://developers.cloudflare.com/ai-gateway/usage/providers/)

## Next steps

* Learn more about [caching](https://developers.cloudflare.com/ai-gateway/features/caching/) for faster requests and cost savings and [rate limiting](https://developers.cloudflare.com/ai-gateway/features/rate-limiting/) to control how your application scales.

* Explore how to specify model or provider [fallbacks, ratelimits, A/B tests](https://developers.cloudflare.com/ai-gateway/features/dynamic-routing/) for resiliency.

* Learn how to use low-cost, open source models on [Workers AI](https://developers.cloudflare.com/ai-gateway/usage/providers/workersai/) - our AI inference service.

---

title: Header Glossary · Cloudflare AI Gateway docs

description: AI Gateway supports a variety of headers to help you configure,

customize, and manage your API requests. This page provides a complete list of

all supported headers, along with a short description

lastUpdated: 2025-08-19T11:42:14.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/ai-gateway/glossary/

md: https://developers.cloudflare.com/ai-gateway/glossary/index.md

---

AI Gateway supports a variety of headers to help you configure, customize, and manage your API requests. This page provides a complete list of all supported headers, along with a short description

| Term | Definition |

| - | - |

| cf-aig-backoff | Header to customize the backoff type for [request retries](https://developers.cloudflare.com/ai-gateway/configuration/request-handling/#request-retries) of a request. |

| cf-aig-cache-key | The [cf-aig-cache-key-aig-cache-key](https://developers.cloudflare.com/ai-gateway/features/caching/#custom-cache-key-cf-aig-cache-key) let you override the default cache key in order to precisely set the cacheability setting for any resource. |

| cf-aig-cache-status | [Status indicator for caching](https://developers.cloudflare.com/ai-gateway/features/caching/#default-configuration), showing if a request was served from cache. |

| cf-aig-cache-ttl | Specifies the [cache time-to-live for responses](https://developers.cloudflare.com/ai-gateway/features/caching/#cache-ttl-cf-aig-cache-ttl). |

| cf-aig-collect-log | The [cf-aig-collect-log](https://developers.cloudflare.com/ai-gateway/observability/logging/#collect-logs-cf-aig-collect-log) header allows you to bypass the default log setting for the gateway. |

| cf-aig-custom-cost | Allows the [customization of request cost](https://developers.cloudflare.com/ai-gateway/configuration/custom-costs/#custom-cost) to reflect user-defined parameters. |

| cf-aig-event-id | [cf-aig-event-id](https://developers.cloudflare.com/ai-gateway/evaluations/add-human-feedback-api/#3-retrieve-the-cf-aig-log-id) is a unique identifier for an event, used to trace specific events through the system. |

| cf-aig-log-id | The [cf-aig-log-id](https://developers.cloudflare.com/ai-gateway/evaluations/add-human-feedback-api/#3-retrieve-the-cf-aig-log-id) is a unique identifier for the specific log entry to which you want to add feedback. |

| cf-aig-max-attempts | Header to customize the number of max attempts for [request retries](https://developers.cloudflare.com/ai-gateway/configuration/request-handling/#request-retries) of a request. |

| cf-aig-metadata | [Custom metadata](https://developers.cloudflare.com/ai-gateway/configuration/custom-metadata/)allows you to tag requests with user IDs or other identifiers, enabling better tracking and analysis of your requests. |

| cf-aig-request-timeout | Header to trigger a fallback provider based on a [predetermined response time](https://developers.cloudflare.com/ai-gateway/configuration/fallbacks/#request-timeouts) (measured in milliseconds). |

| cf-aig-retry-delay | Header to customize the retry delay for [request retries](https://developers.cloudflare.com/ai-gateway/configuration/request-handling/#request-retries) of a request. |

| cf-aig-skip-cache | Header to [bypass caching for a specific request](https://developers.cloudflare.com/ai-gateway/features/caching/#skip-cache-cf-aig-skip-cache). |

| cf-aig-step | [cf-aig-step](https://developers.cloudflare.com/ai-gateway/configuration/fallbacks/#response-headercf-aig-step) identifies the processing step in the AI Gateway flow for better tracking and debugging. |

| cf-cache-ttl | Deprecated: This header is replaced by `cf-aig-cache-ttl`. It specifies cache time-to-live. |

| cf-skip-cache | Deprecated: This header is replaced by `cf-aig-skip-cache`. It bypasses caching for a specific request. |

## Configuration hierarchy

Settings in AI Gateway can be configured at three levels: **Provider**, **Request**, and **Gateway**. Since the same settings can be configured in multiple locations, the following hierarchy determines which value is applied:

1. **Provider-level headers**: Relevant only when using the [Universal Endpoint](https://developers.cloudflare.com/ai-gateway/usage/universal/), these headers take precedence over all other configurations.

2. **Request-level headers**: Apply if no provider-level headers are set.

3. **Gateway-level settings**: Act as the default if no headers are set at the provider or request levels.

This hierarchy ensures consistent behavior, prioritizing the most specific configurations. Use provider-level and request-level headers for more fine-tuned control, and gateway settings for general defaults.

---

title: Integrations · Cloudflare AI Gateway docs

lastUpdated: 2025-05-09T15:42:57.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/ai-gateway/integrations/

md: https://developers.cloudflare.com/ai-gateway/integrations/index.md

---

---

title: MCP server · Cloudflare AI Gateway docs

lastUpdated: 2025-10-09T17:32:08.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/ai-gateway/mcp-server/

md: https://developers.cloudflare.com/ai-gateway/mcp-server/index.md

---

---

title: Observability · Cloudflare AI Gateway docs

description: Observability is the practice of instrumenting systems to collect

metrics, and logs enabling better monitoring, troubleshooting, and

optimization of applications.

lastUpdated: 2025-05-09T15:42:57.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/ai-gateway/observability/

md: https://developers.cloudflare.com/ai-gateway/observability/index.md

---

Observability is the practice of instrumenting systems to collect metrics, and logs enabling better monitoring, troubleshooting, and optimization of applications.

* [Analytics](https://developers.cloudflare.com/ai-gateway/observability/analytics/)

* [Costs](https://developers.cloudflare.com/ai-gateway/observability/costs/)

* [Custom metadata](https://developers.cloudflare.com/ai-gateway/observability/custom-metadata/)

* [OpenTelemetry](https://developers.cloudflare.com/ai-gateway/observability/otel-integration/)

* [Logging](https://developers.cloudflare.com/ai-gateway/observability/logging/)

---

title: Platform · Cloudflare AI Gateway docs

lastUpdated: 2025-05-09T15:42:57.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/ai-gateway/reference/

md: https://developers.cloudflare.com/ai-gateway/reference/index.md

---

* [Audit logs](https://developers.cloudflare.com/ai-gateway/reference/audit-logs/)

* [Limits](https://developers.cloudflare.com/ai-gateway/reference/limits/)

* [Pricing](https://developers.cloudflare.com/ai-gateway/reference/pricing/)

---

title: Tutorials · Cloudflare AI Gateway docs

description: View tutorials to help you get started with AI Gateway.

lastUpdated: 2025-05-09T15:42:57.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/ai-gateway/tutorials/

md: https://developers.cloudflare.com/ai-gateway/tutorials/index.md

---

View tutorials to help you get started with AI Gateway.

## Docs

| Name | Last Updated | Difficulty |

| - | - | - |

| [AI Gateway Binding Methods](https://developers.cloudflare.com/ai-gateway/integrations/worker-binding-methods/) | 11 months ago | |

| [Workers AI](https://developers.cloudflare.com/ai-gateway/integrations/aig-workers-ai-binding/) | over 1 year ago | |

| [Create your first AI Gateway using Workers AI](https://developers.cloudflare.com/ai-gateway/tutorials/create-first-aig-workers/) | over 1 year ago | Beginner |

| [Deploy a Worker that connects to OpenAI via AI Gateway](https://developers.cloudflare.com/ai-gateway/tutorials/deploy-aig-worker/) | over 2 years ago | Beginner |

## Videos

Cloudflare Workflows | Introduction (Part 1 of 3)

In this video, we introduce Cloudflare Workflows, the Newest Developer Platform Primitive at Cloudflare.

Cloudflare Workflows | Batching and Monitoring Your Durable Execution (Part 2 of 3)

Workflows exposes metrics such as execution, error rates, steps, and total duration!

Welcome to the Cloudflare Developer Channel

Welcome to the Cloudflare Developers YouTube channel. We've got tutorials and working demos and everything you need to level up your projects. Whether you're working on your next big thing or just dorking around with some side projects, we've got you covered! So why don't you come hang out, subscribe to our developer channel and together we'll build something awesome. You're gonna love it.

Optimize your AI App & fine-tune models (AI Gateway, R2)

In this workshop, Kristian Freeman, Cloudflare Developer Advocate, shows how to optimize your existing AI applications with Cloudflare AI Gateway, and how to finetune OpenAI models using R2.

How to use Cloudflare AI models and inference in Python with Jupyter Notebooks

Cloudflare Workers AI provides a ton of AI models and inference capabilities. In this video, we will explore how to make use of Cloudflare’s AI model catalog using a Python Jupyter Notebook.

---

title: Using AI Gateway · Cloudflare AI Gateway docs

lastUpdated: 2025-08-19T11:42:14.000Z

chatbotDeprioritize: true

source_url:

html: https://developers.cloudflare.com/ai-gateway/usage/

md: https://developers.cloudflare.com/ai-gateway/usage/index.md

---

##

---

title: Authenticated Gateway · Cloudflare AI Gateway docs

description: Add security by requiring a valid authorization token for each request.

lastUpdated: 2025-10-07T18:26:33.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/ai-gateway/configuration/authentication/

md: https://developers.cloudflare.com/ai-gateway/configuration/authentication/index.md

---

Using an Authenticated Gateway in AI Gateway adds security by requiring a valid authorization token for each request. This feature is especially useful when storing logs, as it prevents unauthorized access and protects against invalid requests that can inflate log storage usage and make it harder to find the data you need. With Authenticated Gateway enabled, only requests with the correct token are processed.

Note

We recommend enabling Authenticated Gateway when opting to store logs with AI Gateway.

If Authenticated Gateway is enabled but a request does not include the required `cf-aig-authorization` header, the request will fail. This setting ensures that only verified requests pass through the gateway. To bypass the need for the `cf-aig-authorization` header, make sure to disable Authenticated Gateway.

## Setting up Authenticated Gateway using the Dashboard

1. Go to the Settings for the specific gateway you want to enable authentication for.

2. Select **Create authentication token** to generate a custom token with the required `Run` permissions. Be sure to securely save this token, as it will not be displayed again.

3. Include the `cf-aig-authorization` header with your API token in each request for this gateway.

4. Return to the settings page and toggle on Authenticated Gateway.

## Example requests with OpenAI

```bash

curl https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/openai/chat/completions \

--header 'cf-aig-authorization: Bearer {CF_AIG_TOKEN}' \

--header 'Authorization: Bearer OPENAI_TOKEN' \

--header 'Content-Type: application/json' \

--data '{"model": "gpt-5-mini", "messages": [{"role": "user", "content": "What is Cloudflare?"}]}'

```

Using the OpenAI SDK:

```javascript

import OpenAI from "openai";

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

baseURL: "https://gateway.ai.cloudflare.com/v1/account-id/gateway/openai",

defaultHeaders: {

"cf-aig-authorization": `Bearer {token}`,

},

});

```

## Example requests with the Vercel AI SDK

```javascript

import { createOpenAI } from "@ai-sdk/openai";

const openai = createOpenAI({

baseURL: "https://gateway.ai.cloudflare.com/v1/account-id/gateway/openai",

headers: {

"cf-aig-authorization": `Bearer {token}`,

},

});

```

## Expected behavior

Note

When an AI Gateway is accessed from a Cloudflare Worker using a **binding**, the `cf-aig-authorization` header does not need to be manually included.\

Requests made through bindings are **pre-authenticated** within the associated Cloudflare account.

The following table outlines gateway behavior based on the authentication settings and header status:

| Authentication Setting | Header Info | Gateway State | Response |

| - | - | - | - |

| On | Header present | Authenticated gateway | Request succeeds |

| On | No header | Error | Request fails due to missing authorization |

| Off | Header present | Unauthenticated gateway | Request succeeds |

| Off | No header | Unauthenticated gateway | Request succeeds |

---

title: BYOK (Store Keys) · Cloudflare AI Gateway docs

description: Bring your own keys (BYOK) is a feature in Cloudflare AI Gateway

that allows you to securely store your AI provider API keys directly in the

Cloudflare dashboard. Instead of including API keys in every request to your

AI models, you can configure them once in the dashboard, and reference them in

your gateway configuration.

lastUpdated: 2026-01-14T14:49:24.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/ai-gateway/configuration/bring-your-own-keys/

md: https://developers.cloudflare.com/ai-gateway/configuration/bring-your-own-keys/index.md

---

## Introduction

Bring your own keys (BYOK) is a feature in Cloudflare AI Gateway that allows you to securely store your AI provider API keys directly in the Cloudflare dashboard. Instead of including API keys in every request to your AI models, you can configure them once in the dashboard, and reference them in your gateway configuration.

The keys are stored securely with [Secrets Store](https://developers.cloudflare.com/secrets-store/) and allows for:

* Secure storage and limit exposure

* Easier key rotation

* Rate limit, budget limit and other restrictions with [Dynamic Routes](https://developers.cloudflare.com/ai-gateway/features/dynamic-routing/)

## Setting up BYOK

### Prerequisites

* Ensure your gateway is [authenticated](https://developers.cloudflare.com/ai-gateway/configuration/authentication/).

* Ensure you have appropriate [permissions](https://developers.cloudflare.com/secrets-store/access-control/) to create and deploy secrets on Secrets Store.

### Configure API keys

1. Log into the [Cloudflare dashboard](https://dash.cloudflare.com/) and select your account.

2. Go to **AI** > **AI Gateway**.

3. Select your gateway or create a new one.

4. Go to the **Provider Keys** section.

5. Click **Add API Key**.

6. Select your AI provider from the dropdown.

7. Enter your API key and optionally provide a description.

8. Click **Save**.

### Update your applications

Once you've configured your API keys in the dashboard:

1. **Remove API keys from your code**: Delete any hardcoded API keys or environment variables.

2. **Update request headers**: Remove provider authorization headers from your requests. Note that you still need to pass `cf-aig-authorization`.

3. **Test your integration**: Verify that requests work without including API keys.

## Example

With BYOK enabled, your workflow changes from:

1. **Traditional approach**: Include API key in every request header

```bash

curl https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/openai/chat/completions \

-H 'cf-aig-authorization: Bearer {CF_AIG_TOKEN}' \

-H "Authorization: Bearer YOUR_OPENAI_API_KEY" \

-H "Content-Type: application/json" \

-d '{"model": "gpt-4", "messages": [...]}'

```

2. **BYOK approach**: Configure key once in dashboard, make requests without exposing keys

```bash

curl https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/openai/chat/completions \

-H 'cf-aig-authorization: Bearer {CF_AIG_TOKEN}' \

-H "Content-Type: application/json" \

-d '{"model": "gpt-4", "messages": [...]}'

```

## Managing API keys

### Viewing configured keys

In the AI Gateway dashboard, you can:

* View all configured API keys by provider

* See when each key was last used

* Check the status of each key (active, expired, invalid)

### Rotating keys

To rotate an API key:

1. Generate a new API key from your AI provider

2. In the Cloudflare dashboard, edit the existing key entry

3. Replace the old key with the new one

4. Save the changes

Your applications will immediately start using the new key without any code changes or downtime.

### Revoking access

To remove an API key:

1. In the AI Gateway dashboard, find the key you want to remove

2. Click the **Delete** button

3. Confirm the deletion

Impact of key deletion

Deleting an API key will immediately stop all requests that depend on it. Make sure to update your applications or configure alternative keys before deletion.

## Multiple keys per provider

AI Gateway supports storing multiple API keys for the same provider. This allows you to:

* Use different keys for different use cases (for example, development vs production)

* Gradually migrate between keys during rotation

### Key aliases

Each API key can be assigned an alias to identify it. When you add a key, you can specify a custom alias, or the system will use `default` as the alias.

When making requests, AI Gateway uses the key with the `default` alias by default. To use a different key, include the `cf-aig-byok-alias` header with the alias of the key you want to use.

### Example: Using a specific key alias

If you have multiple OpenAI keys configured with different aliases (for example, `default`, `production`, and `testing`), you can specify which one to use:

```bash

# Uses the key with alias "default" (no header needed)

curl https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/openai/chat/completions \

-H 'cf-aig-authorization: Bearer {CF_AIG_TOKEN}' \

-H "Content-Type: application/json" \

-d '{"model": "gpt-4", "messages": [...]}'

```

```bash

# Uses the key with alias "production"

curl https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/openai/chat/completions \

-H 'cf-aig-authorization: Bearer {CF_AIG_TOKEN}' \

-H 'cf-aig-byok-alias: production' \

-H "Content-Type: application/json" \

-d '{"model": "gpt-4", "messages": [...]}'

```

```bash

# Uses the key with alias "testing"

curl https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/openai/chat/completions \

-H 'cf-aig-authorization: Bearer {CF_AIG_TOKEN}' \

-H 'cf-aig-byok-alias: testing' \

-H "Content-Type: application/json" \

-d '{"model": "gpt-4", "messages": [...]}'

```

---

title: Custom costs · Cloudflare AI Gateway docs

description: Override default or public model costs on a per-request basis.

lastUpdated: 2025-03-05T12:30:57.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/ai-gateway/configuration/custom-costs/

md: https://developers.cloudflare.com/ai-gateway/configuration/custom-costs/index.md

---

AI Gateway allows you to set custom costs at the request level. By using this feature, the cost metrics can accurately reflect your unique pricing, overriding the default or public model costs.

Note

Custom costs will only apply to requests that pass tokens in their response. Requests without token information will not have costs calculated.

## Custom cost

To add custom costs to your API requests, use the `cf-aig-custom-cost` header. This header enables you to specify the cost per token for both input (tokens sent) and output (tokens received).

* **per\_token\_in**: The negotiated input token cost (per token).

* **per\_token\_out**: The negotiated output token cost (per token).

There is no limit to the number of decimal places you can include, ensuring precise cost calculations, regardless of how small the values are.

Custom costs will appear in the logs with an underline, making it easy to identify when custom pricing has been applied.

In this example, if you have a negotiated price of $1 per million input tokens and $2 per million output tokens, include the `cf-aig-custom-cost` header as shown below.

```bash

curl https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/openai/chat/completions \

--header "Authorization: Bearer $TOKEN" \

--header 'Content-Type: application/json' \

--header 'cf-aig-custom-cost: {"per_token_in":0.000001,"per_token_out":0.000002}' \

--data ' {

"model": "gpt-4o-mini",

"messages": [

{

"role": "user",

"content": "When is Cloudflare’s Birthday Week?"

}

]

}'

```

Note

If a response is served from cache (cache hit), the cost is always `0`, even if you specified a custom cost. Custom costs only apply when the request reaches the model provider.

---

title: Custom Providers · Cloudflare AI Gateway docs

description: Create and manage custom AI providers for your account.

lastUpdated: 2026-02-17T16:17:11.000Z

chatbotDeprioritize: false

source_url:

html: https://developers.cloudflare.com/ai-gateway/configuration/custom-providers/

md: https://developers.cloudflare.com/ai-gateway/configuration/custom-providers/index.md

---

## Overview

Custom Providers allow you to integrate AI providers that are not natively supported by AI Gateway. This feature enables you to use AI Gateway's observability, caching, rate limiting, and other features with any AI provider that has an HTTPS API endpoint.

## Use cases

* **Internal AI models**: Connect to your organization's self-hosted AI models

* **Regional providers**: Integrate with AI providers specific to your region

* **Specialized models**: Use domain-specific AI services not available through standard providers

* **Custom endpoints**: Route requests to your own AI infrastructure

## Before you begin

### Prerequisites

* An active Cloudflare account with AI Gateway access

* A valid API key from your custom AI provider

* The HTTPS base URL for your provider's API

### Authentication

The API endpoints for creating, reading, updating, or deleting custom providers require authentication. You need to create a Cloudflare API token with the appropriate permissions.

To create an API token:

1. Go to the [Cloudflare dashboard API tokens page](https://dash.cloudflare.com/?to=:account/api-tokens)

2. Click **Create Token**

3. Select **Custom Token** and add the following permissions:

* `AI Gateway - Edit`

4. Click **Continue to summary** and then **Create Token**

5. Copy the token - you'll use it in the `Authorization: Bearer $CLOUDFLARE_API_TOKEN` header

## Create a custom provider

* API

To create a new custom provider using the API:

1. Get your [Account ID](https://developers.cloudflare.com/fundamentals/account/find-account-and-zone-ids/) and Account Tag.

2. Send a `POST` request to create a new custom provider:

```bash

curl -X POST "https://api.cloudflare.com/client/v4/accounts/$ACCOUNT_ID/ai-gateway/custom-providers" \

-H "Authorization: Bearer $CLOUDFLARE_API_TOKEN" \

-H "Content-Type: application/json" \

-d '{

"name": "My Custom Provider",

"slug": "some-provider",

"base_url": "https://api.myprovider.com",

"description": "Custom AI provider for internal models",

"enable": true

}'

```

**Required fields:**

* `name` (string): Display name for your provider

* `slug` (string): Unique identifier (alphanumeric with hyphens). Must be unique within your account.

* `base_url` (string): HTTPS URL for your provider's API endpoint. Must start with `https://`.

**Optional fields:**

* `description` (string): Description of the provider

* `link` (string): URL to provider documentation

* `enable` (boolean): Whether the provider is active (default: `false`)

* `beta` (boolean): Mark as beta feature (default: `false`)

* `curl_example` (string): Example cURL command for using the provider

* `js_example` (string): Example JavaScript code for using the provider

**Response:**

```json

{

"success": true,

"result": {

"id": "550e8400-e29b-41d4-a716-446655440000",

"account_id": "abc123def456",

"account_tag": "my-account",

"name": "My Custom Provider",

"slug": "some-provider",

"base_url": "https://api.myprovider.com",

"description": "Custom AI provider for internal models",

"enable": true,

"beta": false,

"logo": "Base64 encoded SVG logo",

"link": null,

"curl_example": null,

"js_example": null,

"created_at": 1700000000,

"modified_at": 1700000000

}

}

```

Auto-generated logo

A default SVG logo is automatically generated for each custom provider. The logo is returned as a base64-encoded string.

* Dashboard

To create a new custom provider using the dashboard:

1. Log in to the [Cloudflare dashboard](https://dash.cloudflare.com) and select your account.

2. Go to [**Compute & AI** > **AI Gateway** > **Custom Providers**](https://dash.cloudflare.com/?to=/:account/ai/ai-gateway/custom-providers).

3. Select **Add Custom Provider**.

4. Enter the following information:

* **Provider Name**: Display name for your provider

* **Provider Slug**: Unique identifier (alphanumeric with hyphens)

* **Base URL**: HTTPS URL for your provider's API endpoint (e.g., `https://api.myprovider.com/v1`)

5. Select **Save** to create your custom provider.

## List custom providers

* API

Retrieve all custom providers with optional filtering and pagination:

```bash

curl "https://api.cloudflare.com/client/v4/accounts/$ACCOUNT_ID/ai-gateway/custom-providers" \

-H "Authorization: Bearer $CLOUDFLARE_API_TOKEN"

```

**Query parameters:**

* `page` (number): Page number (default: `1`)

* `per_page` (number): Items per page (default: `20`, max: `100`)

* `enable` (boolean): Filter by enabled status

* `beta` (boolean): Filter by beta status

* `search` (string): Search in id, name, or slug fields

* `order_by` (string): Sort field and direction (default: `"name ASC"`)

**Examples:**

List only enabled providers:

```bash

curl "https://api.cloudflare.com/client/v4/accounts/$ACCOUNT_ID/ai-gateway/custom-providers?enable=true" \

-H "Authorization: Bearer $CLOUDFLARE_API_TOKEN"

```

Search for specific providers:

```bash

curl "https://api.cloudflare.com/client/v4/accounts/$ACCOUNT_ID/ai-gateway/custom-providers?search=custom" \

-H "Authorization: Bearer $CLOUDFLARE_API_TOKEN"

```

**Response:**

```json

{

"success": true,

"result": [

{

"id": "550e8400-e29b-41d4-a716-446655440000",

"name": "My Custom Provider",

"slug": "some-provider",

"base_url": "https://api.myprovider.com",

"enable": true,

"created_at": 1700000000,

"modified_at": 1700000000

}

],

"result_info": {

"page": 1,

"per_page": 20,

"total_count": 1,

"total_pages": 1

}

}

```

* Dashboard

To view all your custom providers:

1. Log in to the [Cloudflare dashboard](https://dash.cloudflare.com) and select your account.

2. Go to [**Compute & AI** > **AI Gateway** > **Custom Providers**](https://dash.cloudflare.com/?to=/:account/ai/ai-gateway/custom-providers).

3. You will see a list of all your custom providers with their names, slugs, base URLs, and status.

## Get a specific custom provider

* API

Retrieve details for a specific custom provider by its ID:

```bash

curl "https://api.cloudflare.com/client/v4/accounts/$ACCOUNT_ID/ai-gateway/custom-providers/{provider_id}" \

-H "Authorization: Bearer $CLOUDFLARE_API_TOKEN"

```

**Response:**

```json

{

"success": true,

"result": {

"id": "550e8400-e29b-41d4-a716-446655440000",

"account_id": "abc123def456",

"account_tag": "my-account",

"name": "My Custom Provider",

"slug": "some-provider",

"base_url": "https://api.myprovider.com",

"description": "Custom AI provider for internal models",

"enable": true,

"beta": false,

"logo": "Base64 encoded SVG logo",

"link": "https://docs.myprovider.com",

"curl_example": "curl -X POST https://api.myprovider.com/v1/chat ...",

"js_example": "fetch('https://api.myprovider.com/v1/chat', {...})",

"created_at": 1700000000,

"modified_at": 1700000000

}

}

```

## Update a custom provider

* API

Update an existing custom provider. All fields are optional - only include the fields you want to change:

```bash

curl -X PATCH "https://api.cloudflare.com/client/v4/accounts/$ACCOUNT_ID/ai-gateway/custom-providers/{provider_id}" \

-H "Authorization: Bearer $CLOUDFLARE_API_TOKEN" \

-H "Content-Type: application/json" \

-d '{

"name": "Updated Provider Name",

"enable": true,

"description": "Updated description"

}'

```

**Updatable fields:**

* `name` (string): Provider display name

* `slug` (string): Provider identifier

* `base_url` (string): API endpoint URL (must be HTTPS)

* `description` (string): Provider description

* `link` (string): Documentation URL

* `enable` (boolean): Active status

* `beta` (boolean): Beta flag

* `curl_example` (string): Example cURL command

* `js_example` (string): Example JavaScript code

**Examples:**

Enable a provider:

```bash

curl -X PATCH "https://api.cloudflare.com/client/v4/accounts/$ACCOUNT_ID/ai-gateway/custom-providers/{provider_id}" \

-H "Authorization: Bearer $CLOUDFLARE_API_TOKEN" \

-H "Content-Type: application/json" \

-d '{"enable": true}'

```

Update provider URL:

```bash

curl -X PATCH "https://api.cloudflare.com/client/v4/accounts/$ACCOUNT_ID/ai-gateway/custom-providers/{provider_id}" \

-H "Authorization: Bearer $CLOUDFLARE_API_TOKEN" \

-H "Content-Type: application/json" \

-d '{"base_url": "https://api.newprovider.com"}'

```

Cache invalidation

Updates to custom providers automatically invalidate any cached entries related to that provider.

* Dashboard

To update an existing custom provider:

1. Log in to the [Cloudflare dashboard](https://dash.cloudflare.com) and select your account.

2. Go to [**Compute & AI** > **AI Gateway** > **Custom Providers**](https://dash.cloudflare.com/?to=/:account/ai/ai-gateway/custom-providers).

3. Find the custom provider you want to update and select **Edit**.

4. Update the fields you want to change (name, slug, base URL, etc.).

5. Select **Save** to apply your changes.

## Delete a custom provider

* API

Delete a custom provider:

```bash

curl -X DELETE "https://api.cloudflare.com/client/v4/accounts/$ACCOUNT_ID/ai-gateway/custom-providers/{provider_id}" \

-H "Authorization: Bearer $CLOUDFLARE_API_TOKEN"

```

**Response:**

```json

{

"success": true,

"result": {

"id": "550e8400-e29b-41d4-a716-446655440000",

"name": "My Custom Provider",

"slug": "some-provider"

}

}

```

Impact of deletion

Deleting a custom provider will immediately stop all requests routed through it. Ensure you have updated your applications before deleting a provider. Cache entries related to the provider will also be invalidated.

* Dashboard

To delete a custom provider:

1. Log in to the [Cloudflare dashboard](https://dash.cloudflare.com) and select your account.

2. Go to [**Compute & AI** > **AI Gateway** > **Custom Providers**](https://dash.cloudflare.com/?to=/:account/ai/ai-gateway/custom-providers).

3. Find the custom provider you want to delete and select **Delete**.

4. Confirm the deletion when prompted.

Impact of deletion

Deleting a custom provider will immediately stop all requests routed through it. Ensure you have updated your applications before deleting a provider.

## Using custom providers with AI Gateway

Once you've created a custom provider, you can route requests through AI Gateway using one of two approaches: the **Unified API** or the **provider-specific endpoint**. When referencing your custom provider with either approach, you must prefix the slug with `custom-`.

Custom provider prefix

All custom provider slugs must be prefixed with `custom-` when making requests through AI Gateway. For example, if your provider slug is `some-provider`, you must use `custom-some-provider` in your requests.

### How URL routing works

When AI Gateway receives a request for a custom provider, it constructs the upstream URL by combining the provider's configured `base_url` with the path that comes after `custom-{slug}/` in the gateway URL.

**The `base_url` field should contain only the root domain** (or domain with a fixed prefix) of the provider's API. Any API-specific path segments (like `/v1/chat/completions`) go in the request URL, not in `base_url`.

The formula is:

```plaintext

Gateway URL: https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/custom-{slug}/{provider-path}

Upstream URL: {base_url}/{provider-path}

```

Everything after `custom-{slug}/` in your request URL is appended directly to the `base_url` to form the final upstream URL. This means `{provider-path}` can include multiple path segments, query parameters, or any path structure your provider requires.

### Choosing between Unified API and provider-specific endpoint

| | Unified API (`/compat`) | Provider-specific endpoint |

| - | - | - |

| **Best for** | Providers with OpenAI-compatible APIs | Providers with any API structure |

| **Request format** | Must follow the OpenAI `/chat/completions` schema | Uses the provider's native request format |

| **Path control** | Fixed to `/compat/chat/completions` | Full control over the upstream path |

| **How to specify the provider** | `model` field: `custom-{slug}/{model-name}` | URL path: `/custom-{slug}/{path}` |

Use the **Unified API** when your custom provider accepts the OpenAI-compatible `/chat/completions` request format. This is the simplest option and works well with OpenAI SDKs.

Use the **provider-specific endpoint** when your custom provider uses a non-standard API path or request format. This gives you full control over both the URL path and the request body sent to the upstream provider.

### Via Unified API

The Unified API sends requests to the provider's chat completions endpoint using the OpenAI-compatible format. Specify the model using the format `custom-{slug}/{model-name}`.

```bash

curl https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/compat/chat/completions \

-H "Authorization: Bearer $PROVIDER_API_KEY" \

-H "cf-aig-authorization: Bearer $CF_AIG_TOKEN" \

-H "Content-Type: application/json" \

-d '{

"model": "custom-some-provider/model-name",

"messages": [{"role": "user", "content": "Hello!"}]

}'

```

### Via provider-specific endpoint

The provider-specific endpoint gives you full control over the upstream path. Everything after `custom-{slug}/` in the URL is appended to the `base_url`.

```bash

curl https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/custom-some-provider/v1/chat/completions \

-H "Authorization: Bearer $PROVIDER_API_KEY" \

-H "cf-aig-authorization: Bearer $CF_AIG_TOKEN" \

-H "Content-Type: application/json" \

-d '{

"model": "model-name",

"messages": [{"role": "user", "content": "Hello!"}]

}'

```

If `base_url` is `https://api.myprovider.com`, this request is proxied to: `https://api.myprovider.com/v1/chat/completions`

### Examples

The following examples show how to configure `base_url` and construct request URLs for different types of providers.

#### Example 1: OpenAI-compatible provider (standard `/v1/` path)

Many providers follow the OpenAI convention of hosting their API at `{domain}/v1/chat/completions`.

**Configuration:**

* `slug`: `my-openai-compat`

* `base_url`: `https://api.example-provider.com`

**Provider-specific endpoint:**

```bash

curl https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/custom-my-openai-compat/v1/chat/completions \

-H "Authorization: Bearer $PROVIDER_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "example-model",

"messages": [{"role": "user", "content": "Hello!"}]

}'

```

**URL mapping:**

| Component | Value |

| - | - |

| Gateway URL | `https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/custom-my-openai-compat/v1/chat/completions` |

| `base_url` | `https://api.example-provider.com` |

| Provider path | `/v1/chat/completions` |

| Upstream URL | `https://api.example-provider.com/v1/chat/completions` |

Since this provider is OpenAI-compatible, you could also use the Unified API:

```bash

curl https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/compat/chat/completions \

-H "Authorization: Bearer $PROVIDER_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "custom-my-openai-compat/example-model",

"messages": [{"role": "user", "content": "Hello!"}]

}'

```

#### Example 2: Provider with a non-standard API path

Some providers use API paths that don't follow the `/v1/` convention. For example, a provider whose chat endpoint is at `https://api.custom-ai.com/api/coding/paas/v4/chat/completions`.

**Configuration:**

* `slug`: `custom-ai`

* `base_url`: `https://api.custom-ai.com`

**Provider-specific endpoint:**

```bash

curl https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/custom-custom-ai/api/coding/paas/v4/chat/completions \

-H "Authorization: Bearer $PROVIDER_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "custom-ai-model",

"messages": [{"role": "user", "content": "Hello!"}]

}'

```

**URL mapping:**

| Component | Value |

| - | - |

| Gateway URL | `https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/custom-custom-ai/api/coding/paas/v4/chat/completions` |

| `base_url` | `https://api.custom-ai.com` |

| Provider path | `/api/coding/paas/v4/chat/completions` |

| Upstream URL | `https://api.custom-ai.com/api/coding/paas/v4/chat/completions` |

Note

For providers with non-standard paths, you must use the provider-specific endpoint. The Unified API only supports the `/chat/completions` path and cannot route to custom API paths.

#### Example 3: Self-hosted model with a path prefix

If you host your own model behind a reverse proxy or on a platform that adds a path prefix, include only the fixed prefix portion in `base_url` if all your endpoints share it. Otherwise, keep `base_url` as just the domain.

**Configuration (domain-only `base_url`):**

* `slug`: `internal-llm`

* `base_url`: `https://ml.internal.example.com`

**Provider-specific endpoint:**

```bash

curl https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/custom-internal-llm/serving/models/my-model:predict \

-H "Authorization: Bearer $INTERNAL_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"instances": [{"prompt": "Summarize the following text:"}]

}'

```

**URL mapping:**

| Component | Value |

| - | - |

| Gateway URL | `https://gateway.ai.cloudflare.com/v1/{account_id}/{gateway_id}/custom-internal-llm/serving/models/my-model:predict` |

| `base_url` | `https://ml.internal.example.com` |

| Provider path | `/serving/models/my-model:predict` |

| Upstream URL | `https://ml.internal.example.com/serving/models/my-model:predict` |

#### Example 4: Provider using OpenAI SDK with a custom base URL

When using the OpenAI SDK to connect to a custom provider through AI Gateway, set the SDK's `base_url` to the gateway's provider-specific endpoint path (up to and including the API version prefix that your provider expects).

**Configuration:**

* `slug`: `alt-provider`

* `base_url`: `https://api.alt-provider.com`

**Python (OpenAI SDK):**

```python

from openai import OpenAI

client = OpenAI(

api_key="your-provider-api-key",